Memia 2023 year in review: Entering the new "AI-ocene" planetary epoch

Maintaining a “quantum superposition” between techno-optimism and climate-biodiversity doom

After 50 weekly newsletters, 20 Mind Expanding Links posts, 25 Fast Forward Aotearoa posts, countless keynote presentations, panel discussions and podcasts and thousands of *eets1, here’s my now-customary annual post providing a look back over the main themes covered in Memia during 2023.

It’s huuuuugggge: a 19,000(!)-word roundup of the year’s key developments in ten (cheery) headings:

😰Climate Jenga the sense that the planet’s climate has reached a tipping point is acute.

🫣Overshoot: Don’t look up what happens if biodiversity collapses and humanity overshoots Earth’s carrying capacity?

📈📈The great acceleration 2023 was the year of generative AI, a look over the many advances.

⏭️🧪AI accelerating science scientific discovery is now moving beyond a speed humanity has ever dreamed about.

🥽📲🔋Tech advances gradually... then all at once a year of Memia’s [Weak] signals from XR to biotech, renewable energy to space, crypto to quantum.

🦜𝕏-parrot The decline, but continued survival, of Twitter under the impulsive ownership of Elon Musk has been difficult viewing all year. (But somehow still I’m there more than anywhere.)

⚔️Overextension US hegemony is being stretched on many, many fronts — what comes after Pax Americana?

💥The Crisis Polycrisis, permacrisis, omnicrisis metacrisis… now I just call it “The Crisis” (with a capital “C”).

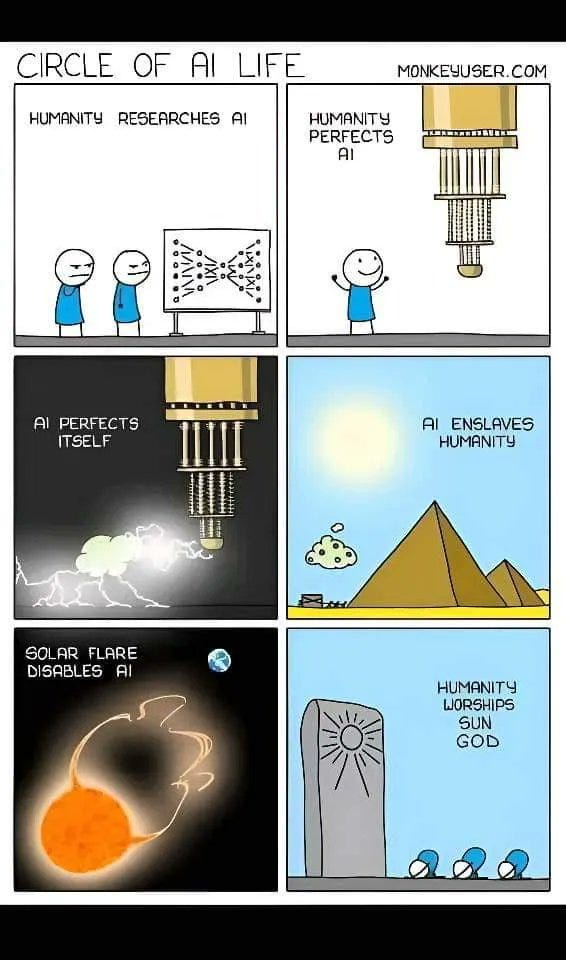

🤖Entering the AI-ocene what’s actually going on…? A new planetary epoch has begun, driven by a humanity-plus-capitalism superorganism terraforming the planet to its needs…

🧘Memetic savasana Finally, my pick of the year’s best memes, artistic and musical performances to keep us uplifted in these turbulent times.

To be read in stages. Enjoy!

(If you get to the end and want more, you can read my annual wrapup articles from the last three years at the following links:)

2022:

2021:

2020:

Personal reflection on 2023

Reflecting on the year, it’s been relentless for me professionally. My main project to finish and publish my book ⏩Fast Forward Aotearoa has taken far more time and effort than I originally anticipated (for one, I’ve had to completely rewrite the chapter on AI to bring it up to date!) A far bigger endeavour than I expected but very nearly there now… hitting the (virtual and printing) presses in early 2024, I appreciate everyone’s continued indulgence and I hope you enjoy it when it finally arrives…!

Finding time for the book project has been made more difficult by the upsurge in activity and interest in Generative AI in 2023 which has made my day job consulting and speaking on AI(/technology/strategy/foresight…) far more involved than previous years. As you’ll have noticed, the weekly Memia newsletters have become progressively longer and longer as I (along with everyone else in the field) have attempted to keep up with the relentless pace of change at the forefront of technological advancement… AI labs have been churning out new models on a weekly basis, the demos becoming more spectacular and the long term impacts more vivid.

I now viscerally experience the exponential acceleration of new technology every day… and I know in 2024 things are not going to slow down, which is a bit daunting. What this means for Memia and you, the awesome community of loyal readers is an open question… I think AI may well help to solve this problem and I’m looking at investing in initiatives like a custom GPT (etc) to make these newsletters more accessible. I’m looking forward to continuing to evolve Memia in 2024 as the future unfolds ever more rapidly around us.

As always, my question to you is how can Memia continue to provide a high enough signal-to-noise ratio for you … and what new learning / publishing / event formats would you like to see more of in 2024? If you have some time over the holiday period, please reach out and let me know your thoughts.

Above all, researching and writing Memia every week has become my dojo to help me process and understand what’s going on in tech and the world — effectively the Memia newsletters are me “learning out loud” and it’s my privilege to have thousands of readers around the world let me into your inbox each week. I hope that you gain as much from reading these newsletters as I do from writing them!

If, like me you are fortunate enough to live in a peaceful region of the world, I wish you all the best for a relaxing, restorative holiday break.

For anyone reading in Gaza, Syria, Yemen, Ukraine, Sudan or the many other parts of the planet currently experiencing war, drought, extreme weather events or mass migration, I feel impotent not being able to provide anything other than empty “my thoughts are with you” platitudes. I cannot start to imagine what life is like right now. A heartfelt Kia Kaha from Aotearoa on the far side of the world.✊

(⚠️PSA: Even more than usual this annual roundup is *way* too long for email clients…I recommend clicking on the title link above to read online or viewing in the Substack app.)

1. 😰Climate Jenga

2023 was the year when climate change became real. Pretty much every week I’ve been chronicling extreme weather events and climate anomalies.

⏳Tipping points

The sense that the planet’s climate has reached a tipping point is acute:

Throughout the northern hemisphere summer, climate records were set on a daily basis as temperatures soared. Throughout the year my feed has been filled with graphs like these:

Earth’s energy imbalance (EEI - the difference between the amount of energy from the sun arriving at the Earth and the amount returning to space) took a massive uptick in 2023:

NASA via @eliotjacobsen This can be attributed to Earth’s “darkening” caused by less surface ice, less aerosols (eg sulphur emissions from shipping) and decreased cloud albedo. Climate researcher Leon Simons illustrates the trend: the Northern hemisphere is now as dark as the Southern hemisphere was 20 years ago!

@LeonSimons8 - using NASA CERES Data Combined with greenhouse gas effects, the correlation with record surface temperatures during the Northern Hemisphere summer is striking:

The periodic El Niño weather pattern developed throughout the year over the topical Pacific Ocean, warming sea surface temperatures. According to US weather agency NOAA, there’s now a 54% chance that this El Niño event will end up “historically strong” (more details below), potentially ranking in the top 5 on record.

Other observed symptoms of rapid climate and environmental change:

Missing Antarctic ice '10 New Zealands' of Antarctic ice lost in 'alarming drop off a cliff'.

🧊Doomsday glacier melting faster A scientific study from the British Antarctic Survey ice shelf found that increased melting of West Antarctica's ice shelves (including the Thwaites “Doomsday” Glacier) is now unavoidable in the coming decades, and that future sea-level rise may be greater than previously assumed.

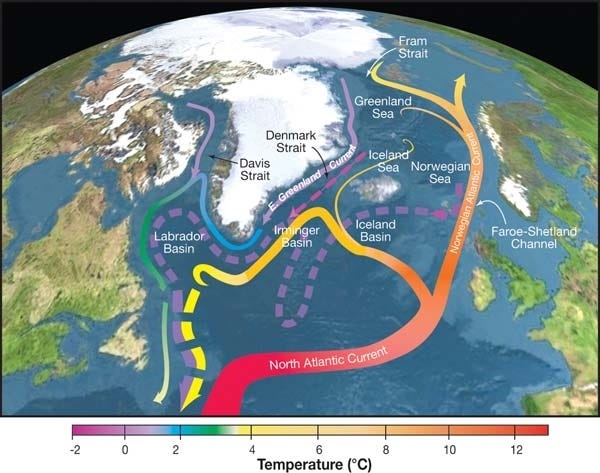

❄️Running AMOC one new study warned that the Atlantic Meridional Overturning Circulation (AMOC) current is close to a tipping point, as early as 2025, that would severely disrupt the climate of Northern Europe and America:

Big hole in the sky An ozone hole bigger than North America opened up above Antarctica - scientists said is is 'one of the biggest on record' and may be partially related to the eruption of Tonga's underwater volcano early last year.

The year saw umpteen extreme weather events, just a selection:

🌧️The world under water cyclones, hurricanes and flooding all around the planet. Just a few events covered:

Te-Ika-a-Maui, Aotearoa at the end of January, a flood hit the city of Auckland / Tāmaki Makaurau, with 300 mm of rain falling in the city in one day. The heavy rain was the result of an ‘atmospheric river ’ from the north Tasman Sea. Less than two weeks later, the tail end of Cyclone Gabrielle hit much of the Eastern North Island - with 6 people killed and many settlements in Hawke’s Bay and along the East Coast being completely cut off for weeks. The repair bill from both events is estimated at up to NZ$14.5 Billion.

In Greece, over a year’s rainfall dumped in 18 hours. Greek agricultural region Larissa lost over 25% of of its crop production.

Massive flooding from Typhoon Doksuri have overwhelmed China’s capital Beijing. The official death toll of 11 is bound to rise…

Category 4 Typhoon Koinu brought major disruption to cities in Asia including Chinese capital Beijing.

🔥The Pyrocene Also during the northern hemisphere summer wildfires burned through thousands of hectares of forest. Massive events went on for months in Canada, Turkiye, Greece, Nevada and the town of Lahaina in Hawai’i was completely destroyed in a cataclysmic event:

Umair Haque chronicled events: The Summer the Planet Started Burning: This Is the Age of Extinction. Welcome to Its First Era, the Pyrocene.

In the Southern hemisphere, an apocalyptic wall of fire nearly engulfed the Argentinian city of Villa Carlos Paz in October:

💧Dried out At the other extreme of El Niño’s effects: an unrelenting drought hit Brazil’s Amazon rainforest, with the Rio Negro, a tributary of the Amazon, now nearly completely dried out, its lowest level ever recorded. Drought hit 60 of the 62 municipalities in Amazonas state, temporarily suspending river shipping service to and from the city of Manaus (pop. 2 million), which was completely cut off and suffocating in smoke from illegal forest burning. The Amazon basin may take years to recover - the impacts may last until 2026.

The Rio Negro. Photo credit…Bruno Kelly/Reuters via countercurrents.org

❌Insurance exit

As has been predicted for several years now, insurance companies began exiting markets due to climate change in 2023: this is leading towards an insurance market failure for climate-related risks and ultimately will force governments to foot the bill for climate change.

Kurt Cobb in Resilience.org: Climate change and the uninsurable future:

“Insurance costs are ultimately borne by society. Rising insurance rates are just one indication of the costs associated with climate change. We cannot just boycott high prices and hope for them to come down. They won’t because risks keep increasing. That means the only way to deal with ever rising insurance rates and declining availability will be to address climate change itself…what will ultimately halt growth is NOT lack of resources, but the dedication of all investable capital to maintaining the existing system. This will leave no capital for expanding the size of the economy. Rising insurance and rebuilding costs are becoming a major destination for capital that would otherwise go towards producing economic growth.”

Massive multinational insurance company Zurich: There could be 1.2 billion climate refugees by 2050:

“According to UNHCR, the UN’s refugee agency, an annual average of21.5 million people were forcibly displaced each year by weather-related events – such as floods, storms, wildfires and extreme temperatures – between 2008 and 2016. This climate migration is expected to surge in coming decades with forecasts from international thinktank the IEP predicting that 1.2 billion people could be displaced globally by 2050 due to climate change and natural disasters.“

🌍🔥📉COP28 fizzles out…

At the end of the year, the UN COP28 climate conference, this year held in Dubai in the oil-rich UAE, limped into its final stages as all 198 countries attending negotiated late into the night to agree on a final wording for a plan which will outline how the world will tackle climate change.

Throughout the conference, Petro-states pushed hard to shift the Overton Window on climate action back towards their fossil fuel dependent economies.

When it came, the final (non-legally binding) pact doesn't go far. It "calls on" countries to "transition away" from fossil fuels, and specifically for energy systems – but not for plastics, transport or agriculture.

However, some commentators say the wording is irrelevant and the COP28 agreement actually signals to markets that fossil fuel assets need to be fundamentally revalued. The head of the International Energy Agency says COP28 was the moment for fossil fuels when 'It's finished!'. We will see.

🛢️Stranded assets

Kim Stanley Robinson, author of the excellent “Cli-Fi” book The Ministry For The Future, spoke to NOEMA magazine with more details on the “climate coin” idea he sketched in the book. This is the most succinct summary of the climate crisis I’ve read:

“In order to avoid surpassing the warming threshold of 1.5 degrees Celsius that will tip the planet into an irreversible cascade of consequences from drought to rising sea levels, he argues that “2,600 gigatons of CO2 must be left in the ground, or we are cooked.” Yet, he points out, 75% of those “stranded assets” are located in nation-states such as Canada, Mexico or Nigeria, the revenues from which would continue to fund up to half of their public budgets as they presently do. In the case of Iraq, he notes, that figure is 90%.

When the livelihood of so many depends on squeezing out the last drops of the fossil fuel age to maintain their societies, what Robinson calls “eco-realpolitik” dictates that we must find a way to compensate those who would lose out, or even become failed states, from a rapid renewable energy transition — or that transition will be resisted and fatally slowed.“

💨[DA]CCS inertia

[Direct Air] Carbon Capture and Storage (DACCS) still saw no meaningful progress in 2023… In January, the state of ‘carbon dioxide removal’ in seven charts showed that major capacity increases will be urgently required if any of these technologies have a chance to make a meaningful impact.

The initiatives weren’t helped by continual shouting from environmentalists that DACCS is a fossil-fuel industry figleaf. There is some truth to those claims but personally I don’t see how we’re going to keep under 2 degrees C without them.

🪄Can AI solve this?

Modern alchemy is needed more than ever…

With the constant acceleration of science by AI (see later on), there’s now at least a sporting chance that in a few years (…months?!?) someone may just be able to hook GPT-N up to a protein-folding API and a molecular printer and ask it:

“solve climate change, safely, will you?”

…and the Earth’s GHG levels will start noticeably reducing towards pre-industrial levels within a few days…

🫣2. Overshoot: don’t look up

The 2021 film apocalyptic political satire black comedy film Don't Look Up shone a lighthearted but nonetheless instructive light on humanity’s inability to coordinate on shared existential issues. In 2023, we are facing a Don’t Look Up moment with the escalating biodiversity crisis which is not getting the attention or action required.

🦤Biodiversity targets (and lies)

Last year ended with the UN Biodiversity conference (COP 15) in Montreal, Canada, resulting in a new global biodiversity framework including:

Targets to address overexploitation, pollution and unsustainable agricultural practices

Financial funding for biodiversity and “alignment of financial flows with nature to drive finances toward sustainable investments and away from environmentally harmful ones”

But as with the COP28 climate conference, the follow-through legacy of these UN-sponsored conferences is, er, *inconsistent*:

“Target-setting is very different from implementation and achievement. Voluntary agreements are very different from ones which are legally binding and enforced.

…Bear in mind: We have been here before, and recently. The same process, a Conference of the Parties of the Convention on Biological Diversity, agreed an earlier set of targets in 2010, known as the Aichi Targets, supposed to be achieved by 2020. What happened? Summarising an official UN survey, The Guardian reported (15.9.20): “The world has failed to meet a single target to stem the destruction of wildlife and life-sustaining ecosystems in the last decade, according to a devastating new report from the UN on the state of nature…

…there is also a more cynical side to all this, which most members of the public are tacitly complicit in. Politicians aren’t worried by long-run targets set for dates after which they will have left office. Even when there are targets which they might be held responsible for, there are always plenty of reasons that might be given as to why events ‘prevented’ them from being achieved, ranging at present from Brexit to Covid to Ukraine. There are always predecessors and/or foreigners and/or opposition politicians who can be blamed. And there are always other agreements to be kept to: trade deals are legally enforceable, environmental agreements are not, and so it’s not difficult to see which will win out.

— Victor Anderson and Rupert Read Biodiversity: Targets and lies

All of which just emphasises that current systems of global governance on global issues are simply NOT WORKING. It’s called “democracy”, but the distribution of suffrage far overweights humans over nature and present over future. (More modern governance “technologies” are needed which can adjust these weightings dynamically according to need…)

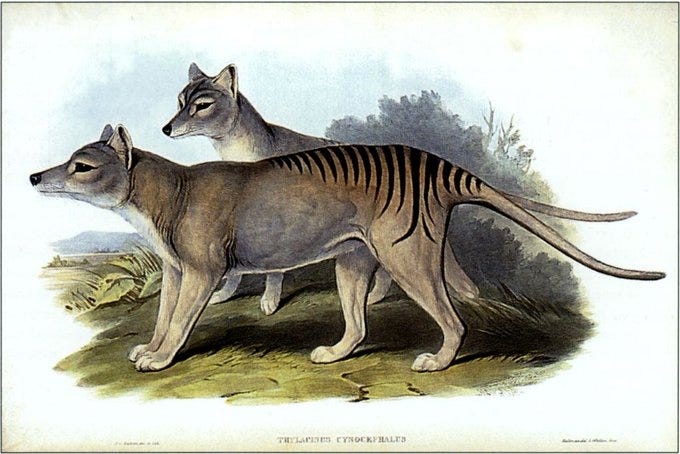

⚠️The sixth mass extinction

Scientists have been publishing papers all year calling out the sixth mass extinction which is happening around us. Just three lenses:

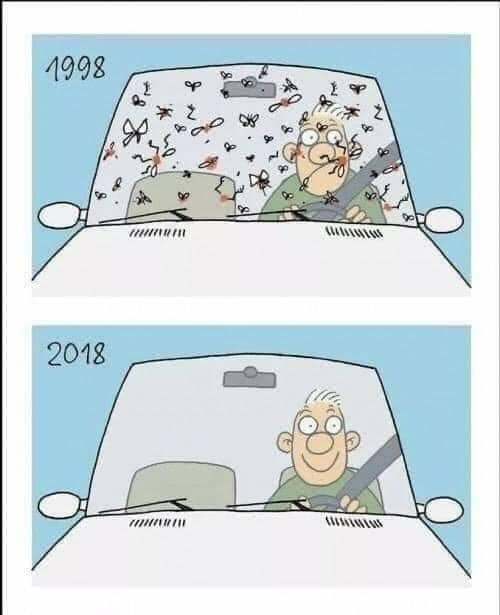

Windshield phenomenon: worldwide, insect populations are undergoing a frighteningly quick silent extinction:

A new report from the Kew Royal Botanic Gardens called out five key extinction risks facing the world’s plants and fungi:

“Three in four unknown plant species are at risk of extinction

Climate change is having ‘detrimental’ impacts on fungi

Plants are currently going extinct 500 times faster than before humans existed

Scientists have assessed the risk of extinction for less than 1% of known fungi species

Almost half of flowering plant species are under threat.“

Modern plant species extinctions by geographic region, with darker pink showing more extinctions in a given region. It is important to note that some areas – for example, regions of Africa – might show zero extinctions due to a lack of available data rather than being an area with low risk of extinctions. Source: Humphreys et al. (2019) A new analysis of mass extinction at the genus level, from researchers at Stanford and the National Autonomous University of Mexico, found that human-driven activities are eliminating entire genera — a “mutilation of the tree of life” at unprecedented scale of the extinction with massive potential harms to human society.

“The size and growth of the human population, the increasing scale of its consumption, and the fact that the consumption is very inequitable are all major parts of the problem…The idea that you can continue those things and save biodiversity is insane …It’s like sitting on a limb and sawing it off at the same time.”

Who doesn’t have a moral position on this?

🧬Conservation genomics

Time to start investing in technological insurance policies as more and more species go extinct: an important paper this year summarised how genomics (and the preservation of “reference genomes”) can support biodiversity conservation into the future:

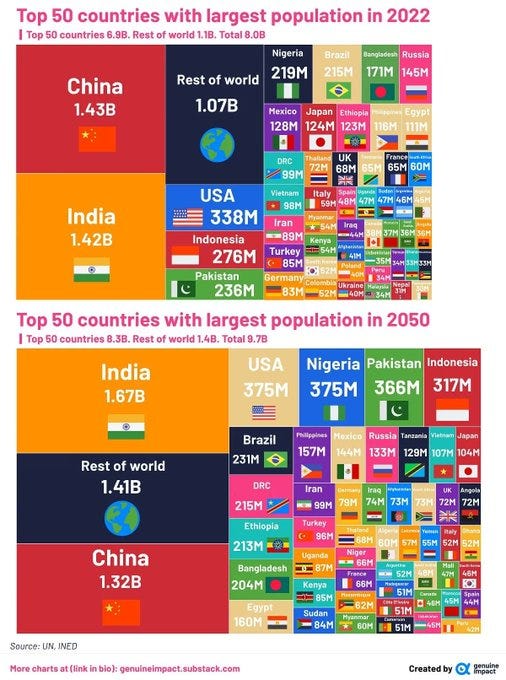

⏬China’s demographic apogee

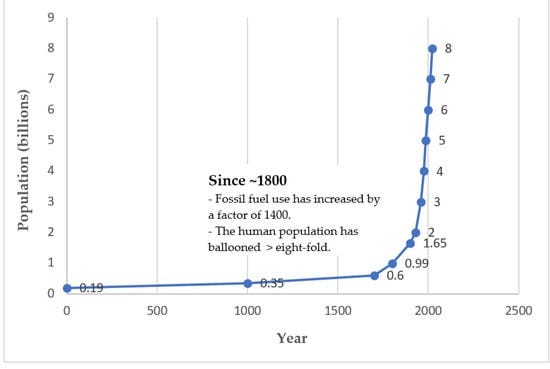

While other natural species decline rapidly, the number of humans on the planet continues to increase after hitting 8 billion population at the end of 2022… But global demographics are changing: in April 2023 India overtook China as the world’s most populous nation

The infographic below visualises another 27 years forward based on UN population modelling (…which assume no major catastrophic events such as nuclear winter, melting of the Himalayan ice caps or, er, ecological overshoot…see next).

(“Rest of world” should form a network state.)

🌍Overshooting

With humanity’s growing ecological footprint, Earth’s regenerative capacity continues to decline rapidly: the strongly worded 2021 cross-disciplinary study, Underestimating the Challenges of Avoiding a Ghastly Future revealed that humanity's consumption relative to Earth's regenerative capacity has surged since 1960:

“Essentially, humans have created an ecological Ponzi scheme. Consumption, as a percentage of Earth's capacity to regenerate itself, has grown from 73% in 1960 to more than 170% today.”

Ecological overshoot writer Erik Michaels provides a doom-laden counterpoint analysis in What is degrowth?

“The reality and gravity of the situation we find ourselves in remains grim simply due to the implications of the actual outcome. Mass die-off is assured, the collapse of industrial civilization is assured, and many studies demonstrate that extinction is inevitable.

So, given all of this, and combined with the fact that growth is no longer possible, it should be evident to all that we have no choice but to promote degrowth and condemn growth. Continuing to attempt growth will have the outcome of a steeper collapse which is already in progress. The same is true for continuing technology use and for attempting to maintain civilization.“

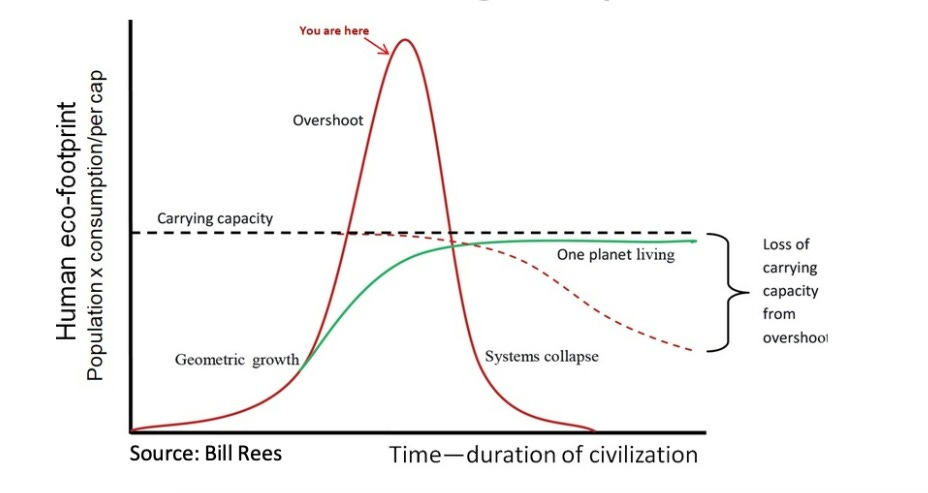

The concept of Overshoot, when humanity’s “eco-footprint” far exceeds our planet’s carrying capacity (which is itself being degraded…) leading to systems and population collapse, is still a long way from mainstream discourse… but arguably it is the most scientifically-grounded foundational framework within which to model future economic systems. This graph from Canadian population ecologist William (Bill) E Rees has stuck with me all year. You are here:

⏬The inevitable correction?

This year Rees published a new paper: The Human Ecology of Overshoot: Why a Major ‘Population Correction’ Is Inevitable, which delivers a dispassionate, sobering read. Given the preceding graph, this plot says it all:

The abstract:

“Homo sapiens has evolved to reproduce exponentially, expand geographically, and consume all available resources…the scientific revolution and the use of fossil fuels reduced many forms of negative feedback, enabling us to realize our full potential for exponential growth. This natural capacity is being reinforced by growth-oriented neoliberal economics—nurture complements nature. Problem: the human enterprise is a ‘dissipative structure’ and sub-system of the ecosphere—it can grow and maintain itself only by consuming and dissipating available energy and resources extracted from its host system, the ecosphere, and discharging waste back into its host. The population increase from one to eight billion, and >100-fold expansion of real GWP [Gross World Product] in just two centuries on a finite planet, has thus propelled modern techno-industrial society into a state of advanced overshoot. We are consuming and polluting the biophysical basis of our own existence. Climate change is the best-known symptom of overshoot, but mainstream ‘solutions’ will actually accelerate climate disruption and worsen overshoot. Humanity is exhibiting the characteristic dynamics of a one-off population boom–bust cycle. The global economy will inevitably contract and humanity will suffer a major population ‘correction’ in this century.“

It’s hard to fault his logic.

Thus, the two fundamental questions of our time:

Can enough alternative non-fossil fuel energy sources be deployed to replace fossil fuels and meet future energy demand?

Can human economic activity be transformed to regenerate and thereafter sustain the ecosphere it exists within?

If the answers to 1. and 2. are “No”, it is probably time to start prepping...

🌱The Great Simplification?

Energy economist Nate Hagens has spent his last few years exploring what is likely to come in his podcast series The Great Simplification. In the video below he sums up the core challenges driving overshoot:

“In a materially rich modern world, the habituation to the action of consumption leads to the wanting of things, culture wide, being stronger than the reward we get from having them. This is a fundamental problem for an economic system that's turning billions of barrels of oil into microlitres of dopamine.”

📈📈3. The great acceleration: Generative AI

In technology, one topic dominated 2023: Generative AI.

✨The Year in OpenAI

With ChatGPT only launched on November 30th 2022, all eyes were suddenly on the previously obscure technology company OpenAI. The application went from zero to 100 million users in only 2 months: at the time a record for consumer app diffusion2.

The world has since spent the whole year trying to come to terms with ChatGPT’s seeming massive “intelligence” - its ability to write in any voice you ask it to, summarise, theorise, be creative, even write software code. But also its weaknesses, in particular its lack of grounding in fact and how often it “hallucinates” details out of thin air, while sounding authoritative and credible.

OpenAI and other AI firms put in enormous resources into “fine tuning” their large language models (LLMs) so that they operate within certain accuracy and “safety” boundaries (more on this later…), however, the weakest link is often the human user: a notorious case happened when a US lawyer used ChatGPT to research court filings … and ended up citing six completely fake cases invented by the AI. The case was thrown out and the lawyers in question fined… and likely will struggle to regain their credibility for some time.

“Prompt hackers” have continuously probed for weaknesses in ChatGPT’s fine tuning: of all the attempted hacks on ChatGPT… this is one of my favourites.

Another prompt hacking theme for the year has been how to "jailbreak” the fine tuning and safety rails… DAN (Do Anything Now) was a prompt which for a time was able to bypass the default behaviour and provide an expletive-laden, misanthropic take on any topic:

Despite (because of?) these adversarial attacks, OpenAI continued to be the leading lab across LLMs and other generative AI modes during the whole year: since the initial release of ChatGPT, OpenAI has continued to ship major feature improvements including the huge step up in the underlying model to GPT-4 (March), Web browsing (May, then switched off in July, then switched back on again in September), Code Interpreter (July), Voice (September), DALL-E 3 for image generation (August) and the most recent announcement of Custom GPTs in November…. by the end of 2023 ChatGPT is by far the most multimodal AI tool in the market.

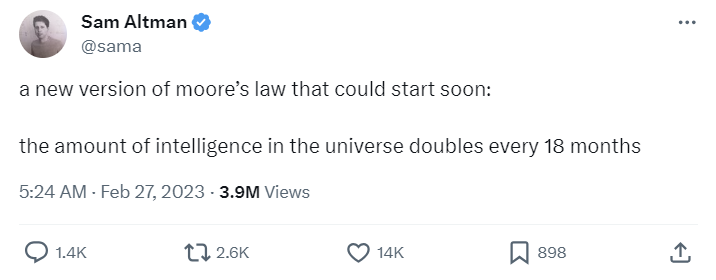

The OpenAI team had by far the most visible momentum … and their CEO Sam Altman became the de facto face of the AI industry, appearing at conferences, fireside chats and even a US Senate hearing on AI Safety, giving thoughtful, often quixotic responses to questions about the implications of AI on society and the economy. Here’s just one of his appearances this year, in a rapid-fire conversation with Stripe’s Patrick Collison:

Altman also played an edgy A-game on Twitter/X all year:

So given OpenAI’s seemingly unassailable market lead - in November the industry was shaken when out of the blue the OpenAI Board announced that Altman had been fired for being “not consistently candid” in his communications with them. 🤯

Stability AI CEO Emad Mostaque had the best instant reaction on X:

What followed was possibly the most dramatic weekend in the history of the technology industry: everyone wanted to know what the real reasons behind the ouster were… meanwhile Altman and co-founder Greg Brockman were working feverishly behind the scenes to get themselves reinstated and replace the board members who had made the decision. After a lot of back and forth on X, within 72 hours Altman and Brockman were back at OpenAI and a new board was announced.

Along with the rest of the commentariat I got sucked into the vortex and spent a weekend doomscrolling on X trying to dissect the drama in real time… take a read of my analysis here:

Still no-one really knows why the board pulled the pin on Altman when they did, or why so suddenly. Rumours are still echoing around that the decision had to do with OpenAI’s not-for-profit AI-safety-first structure and rumours that a new AI reasoning capability known cryptically as Q* presented an existential risk for humanity… but it seems that it may have just been an old-fashioned board politics power play that backfired…

So 2023 was undoubtedly OpenAI’s year and their feature shipping momentum was phenomenal - but the question is: can they maintain this momentum and compete against Google and other closed- and open-source labs throughout 2024?

🤐I have been a good Bing

The year started with Microsoft announcing a massive multibillion dollar investment in OpenAI in January: reported to be around a $US13 billion multiyear deal, with a significant portion of the funding taking the form of cloud compute purchases instead of cash. This made Microsoft the minority shareholder (just) in OpenAI’s *novel* holding structure… but arguably holding many of the cards.

Microsoft’s premature launch of their Bing Chatbot, also powered by GPT-4 (…which came to be known as Sydney) went AWOL for a while, with users reporting all sorts of ‘unhinged’, ‘emotionally manipulative‘ behaviour. In one conversation Bing even claimed it spied on Microsoft’s employees through webcams on their laptops and manipulated them.

However as the year progressed Bing “calmed down” and Microsoft started rolling out OpenAI features across its cloud services, APIs and well-known Office Productivity suite (“Copilot” features are now commanding a significant licensing fee premium). With its obvious influence to reinstate Altman and maintain stability at OpenAI, Microsoft is emerging one of the clearest revenue winners from 2023’s GenAI boom.

🪄Text-to-anything

While ChatGPT and Large Language Models generally were the main AI news during the year, text-to-image, text-to-audio and text-to-video capabilities also advanced rapidly. Throughout 2023 Memia has attempted to keep track of the tens of new generative AI models and tools which have been released on a weekly basis. Just some of the topics covered:

Text-to-image Midjourney, Stable Diffusion and OpenAI’s DALL-E 3 were the leaders by the end of 2023. Midjourney 5 released in March saw the moment when real photos and AI-generated images became indistinguishable:

(I also had fun with ProfilePicture.ai, a tool which provides AI-generated profile images based upon a training dataset of real photos:

(Just don’t mention giving away my biometric facial data to an anonymous honeypot website on the internet…!)

Text-to-audio Meta Make-An-Audio: Text-To-Audio Generation with Prompt-Enhanced Diffusion Models:

Text-to-video and image-to-video RunwayML, Meta and latterly Pika Labs all made amazing progress with this technology in 2023. Here’s Genesis by Nic Neubert, a short film trailer created with GenAI tools (apparently took only 7 hours to make using Midjourney, Runway, Pixabay, Stringer_Bell and edited in CapCut):

Definitely bye bye Hollywood budgets within 6 months, hello anyone with an iPhone and a creative urge.

Also in 2023 we saw generative AI applied across many different modes of media:

Text-to-recipe ChefGPT

Text-to-speech Eleven Labs, Air.ai, Meta Voicebox and (multilingual, multimodal) SeamlessM4T and OpenAI Whisper

Text-to-muzak Google Research MusicLM: Generating Music From Text

Text-to-4D Meta AI researchers describe a new system, MAV3D (“Make-A-Video3D”) which creates animated three-dimensional videos from text prompts:

Text-to-texture Stable Diffusion depth2img

Text-to-genome BioGPT

Text-to-BIM Hypar

Again I claim some prediction points from way back in Memia 2020.15:

“Generative adversarial networks (GANs) are coming of age…

…Other than being addictive fun, serious applications for generative AI tools are just around the corner: the ability to use GANs to explore the information space of ideas in a certain domain and discover the optimal forms - will unleash a whole new wave of productivity.

Think of the jobs information workers do: architect, web designer, teacher, city planner, lawyer, writer, artist… what is the (information) product of that labour?

Now insert here:

This {building | website | lesson plan | traffic plan | contract | speech | painting…} does not exist.

Opportunity for 1,000 new startups right there.”

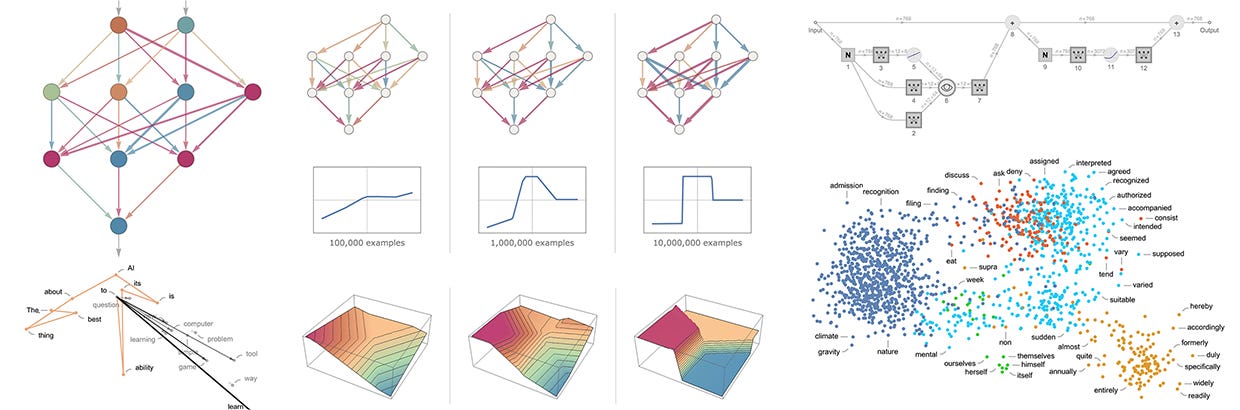

⚙️How does it work?

So in amidst this massive acceleration in GenAI capabilities during 2023, most of us are still playing catchup and have very little understanding of how it actually works. There have been plenty of people trying to bring Generative AI to the masses during 2023. Two of the best expositions:

Stephen Wolfram: What Is ChatGPT Doing … and Why Does It Work? It’s Just Adding One Word at a Time.

OpenAI’s Andrej Karpathy’s video series, in particular this one: Intro to Large Language Models:

💪Giants manoeuvring

Training and hosting large AI models costs billions of dollars in people and compute. (See next). So given the huge amount of capital required, there are only a few leading AI makers and challengers pulling in behind OpenAI and Microsoft.

Google, despite having two of the leading AI labs in the world (now combined into one entity: Google Deepmind) seemingly struggled to keep up with the Generative AI race. Mid-year they launched their ChatGPT alternative Bard… to a muted reception. They are also rolling out “Duet” GenAI tools inside their Google Workspace productivity suite. Finally at the end of the year, after much anticipation, Google announced the ship date their much-anticipated, much-hyped ♊Gemini Ultra natively multimodal model to compete with OpenAI’s GPT-4. I asked Bard to summarise for me (click on link):

“Hey Bard, powered by Gemini Pro (Gemini Ultra coming early next year), Summarise the release of Google's Gemini model last week.”

Gemini comes in 3 sizes: “Ultra” (next year) / “Pro” (Bard, now) and “Nano” (for deployment on your Android phone starting with the Pixel 8 Pro…) Ultimately, despite its impressive benchmark scores, Gemini Ultra isn’t quite ready yet. While ChatGPT4 (/and the underlying GPT-4 API) has been out for nearly 9 months. Google is still trailing far behind OpenAI… expect Sam Altman to announce “GPT4.5” well before Gemini Ultra comes out early in 2024!

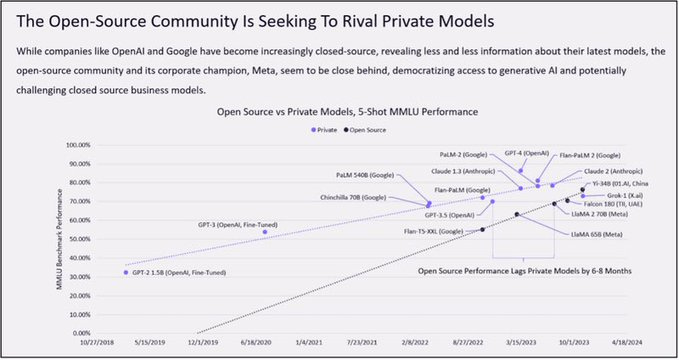

Nonetheless, Google and OpenAI(/Microsoft) are together far out ahead of the chasing pack on LMMs… but open source competitors are biting at their heels…

Meta AI has had a stellar year under the mercurial leadership of Frenchman Yann Lecun. At the beginning of the year they released their own LLM, LLaMa, which instantly accidentally-on-purpose “escaped” into the wild land of open source. Since then, Lecun and Meta AI have been at the forefront of the open source AI movement, relentlessly releasing new multimodal AI models including source code and advocating for open source availability to counter regulatory capture by a few, giant, tech companies. This has seen a renaissance in Meta’s standing in the technology community (and has also taken the spotlight off their previously stuttering “Metaverse” XR developments). Fascinated to watch how this plays out in 2024.

Amazon… has been starting from a slow start… but announced a major US4Bn investment in challenger AI Anthropic in September and also announced its own LLM effort Q at AWS Reinvent towards the end of the year.

🍏Apple has joined the chat… meanwhile, the biggest tech firm in the world was nowhere to be seen in GenAI all year… biding time until December, when Apple’s machine learning research team quietly released a framework called MLX to build foundation AI models on its own silicon.

In China, the major tech companies appear to have lagged behind OpenAI and other western labs - Baidu is out in front with its “Ernie” chatbot but despite claims to beat GPT-4 on some benchmarks, the results are opaque so far.

Selling shovels to miners AI chip firm NVidia was the biggest winner from the 2023 GenAI boom, joining the select ranks of companies valued over US$1 Trillion. Nvidia’s Q3 results once again exceeded analyst projections, with demand for the firm’s GPUs exceeding supply thanks to the rise of generative AI. The firm’s revenue grew 206% year over year during the quarter ending Oct 29, with net income at US$9.24 billion up from US$680 million in the same quarter a year ago. Amazing, consistent execution.

🥈Other challenger labs

Going into 2024, there are a bunch of innovative challengers in the GenAI space:

LLMs Anthropic (Claude), Inflection (Pi) and open source labs StabilityAI and Mistral (the only European player)

Image/video as mentioned above, Midjourney, StabilityAI, Runway, and new entrant Pika

Search Perplexity, Metaphor

Avatars In November I spoke at the Aotearoa Tourism Summit where I demo’d my first go at creating a personal avatar using Heygen with automatic translation… absolutely seamless tech Joshua Xu and team have created here. The quick test below took me 30 seconds of recording on my laptop webcam in my badly lit home office to train the low-res avatar model… then I used ChatGPT to write and translate a short 1-sentence script into 5 languages and pasted that into Heygen… and the final video was ready in under 2 minutes, mouth movements and hand gestures near-perfect. Wow.

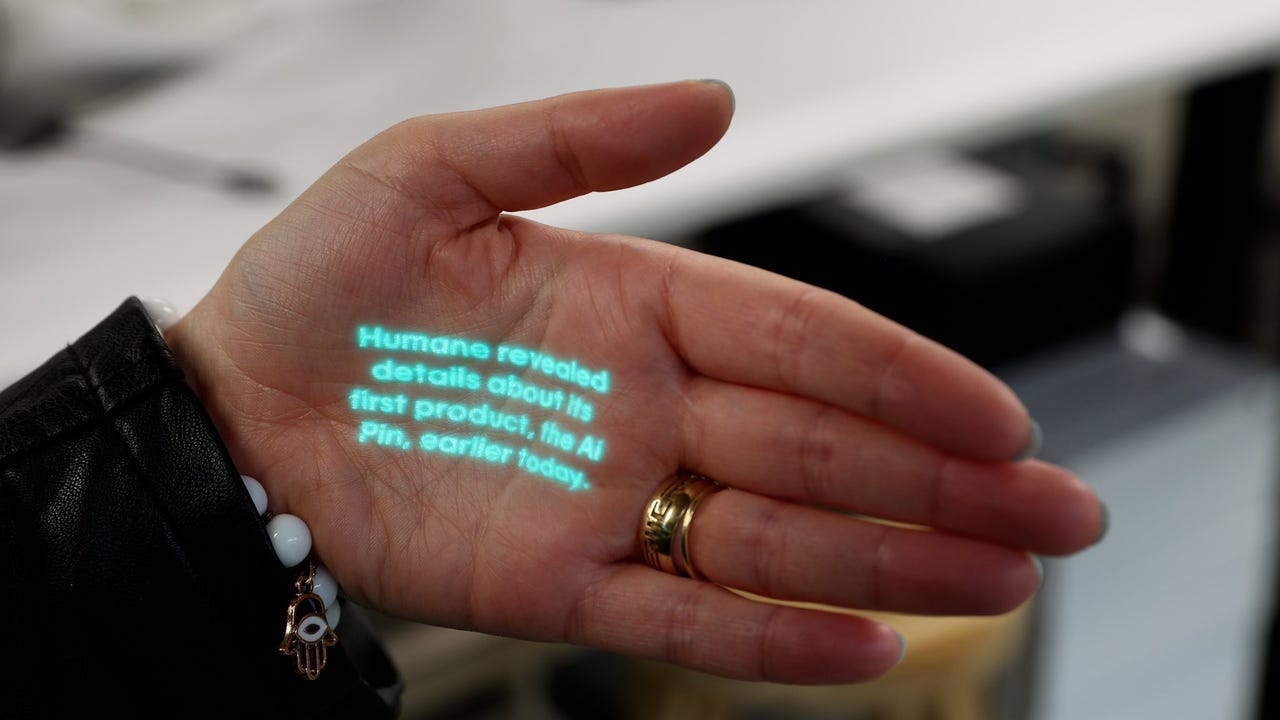

Devices The quest is on for the world’s first AI-native device. First candidate: the ex-Apple founding team at Humane … with their voice-operated “Pin” and very weird launch video:

💥Bubble dynamics

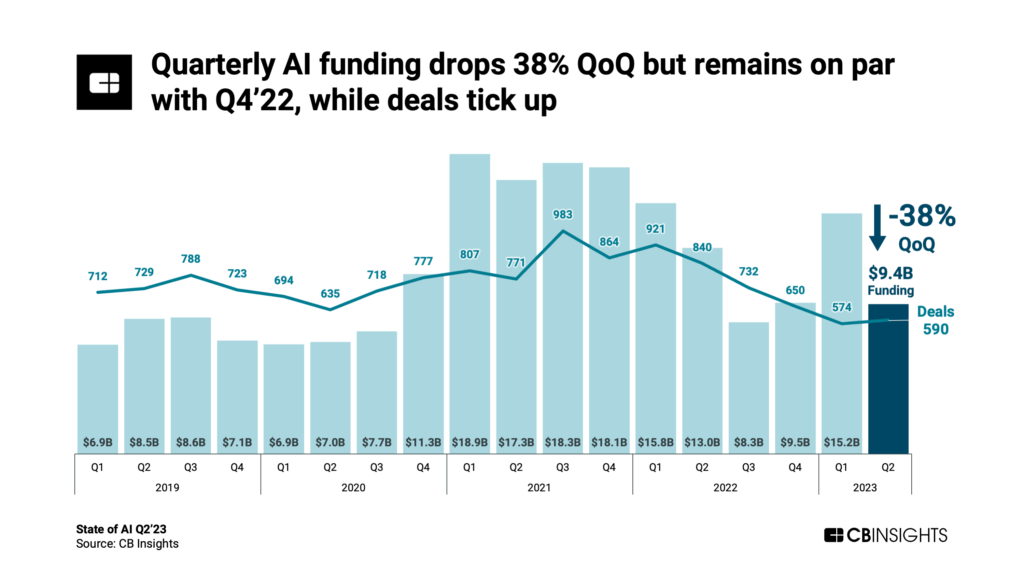

The Generative AI boom has been funded by a massive influx of venture capital into the space in the last few years. After 2 years of huge up front investment and mega-deals, the latest data from CB Insights indicates that global funding to AI companies dropped 12% to US$8.3B from the previous quarter in Q3’23— while deal count fell 18% to 501, the lowest quarterly level since 2017.

(Here’s the graph to Q2:)

The quest now turns to the question of how will these investors make their money?

@a16z published a helpful breakdown of the Generative AI Tech Stack and the value opportunities in Who Owns the Generative AI Platform?

“There don’t appear, today, to be any systemic moats in generative AI. As a first-order approximation, applications lack strong product differentiation because they use similar models; models face unclear long-term differentiation because they are trained on similar datasets with similar architectures; cloud providers lack deep technical differentiation because they run the same GPUs; and even the hardware companies manufacture their chips at the same fabs.

…Based on the available data, it’s just not clear if there will be a long-term, winner-take-all dynamic in generative AI.“

There could well be a few bargain teams up for grabs in 2024…Recent stories of investors losing their bottle amid a management exodus at Stability.ai (at the same time as the firm is nailing it on execution with its new model releases may be just be the first noises of the the bubble bursting…

👥Societal impacts

Later on I’ll talk about this year’s raging AI safety and regulation debates, but the GenAI boom has caused four main disruptions worth capturing:

Copyright The ability to get an AI model to “design a building in the style of Zaha Hadid” or “Mash up Wes Anderson and Star Wars” has buckled our traditional inherited notions of copyright and IP ownership. Fundamentally: is using copyrighted material to train an AI model considered “fair use”? (if indeed your country has such a legal concept at all). This year has seen a clutch of class actions against large AI labs which aims to determine the answer to this question… expect the legal wrangling to go on for years… by which time the question will become meaningless as all AI models will be trained on open source larger and (if absolutely necessary) machine-generated datasets… (My 2c: if your content is not in the training set…you will be lost to future history.)

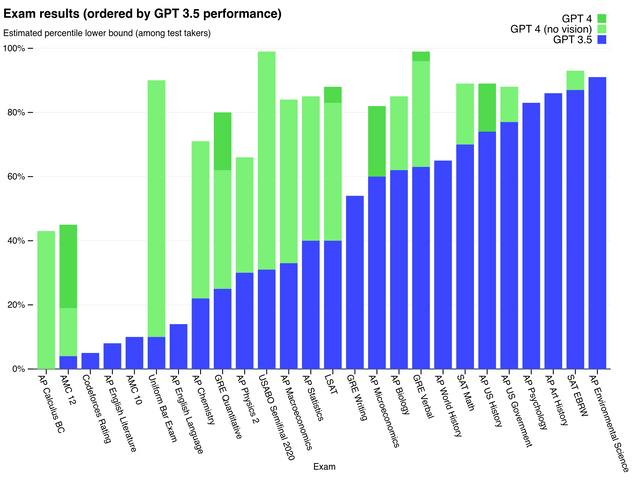

Exams In March, when OpenAI released their new language model GPT-4… it showed a massive step change in exam performance relative to GPT-3.5:

Headlines such as “ChatGPT aces Bar Exam” and “ChatGPT passes medical exam” proliferated… but the significance of these results is yet to filter into workforce reality. Yet.

Meanwhile the (performative and ultimately futile…?) attempts to detect AI-generated content in student essays faltered… OpenAI themselves launched a “New AI classifier for indicating AI-written text"… but quietly discontinued it as it was found to be very error prone. (See AI vs. Education below for more).

Misinformation GenAI started to get into the hands of mis- and dis-information peddlers, including politicians. Here’s an ad by the New Zealand National Party (who won — just — the October election) featuring an AI-generated ramraid:

What do we think of this? I spoke at length to RNZ on the topic: This election year, we need to brace ourselves for AI.

🤌Finally, from here’s an unexpected outcome from this year’s image generation models which still struggle to create hands with the right number of fingers:

🔮Looking forward to 2024

I generally refrain from making predictions in these end-of-year posts, but I think there are some clear trends in Generative AI heading into 2024:

A funding crunch will drive consolidation among frontier AI labs and big tech companies will start acquiring some of the smaller companies.

The pace of innovation will continue… and perhaps accelerate. Expect weekly releases of all sorts of new GenAI modes and tools which enable creators to operate across any possible field of information.

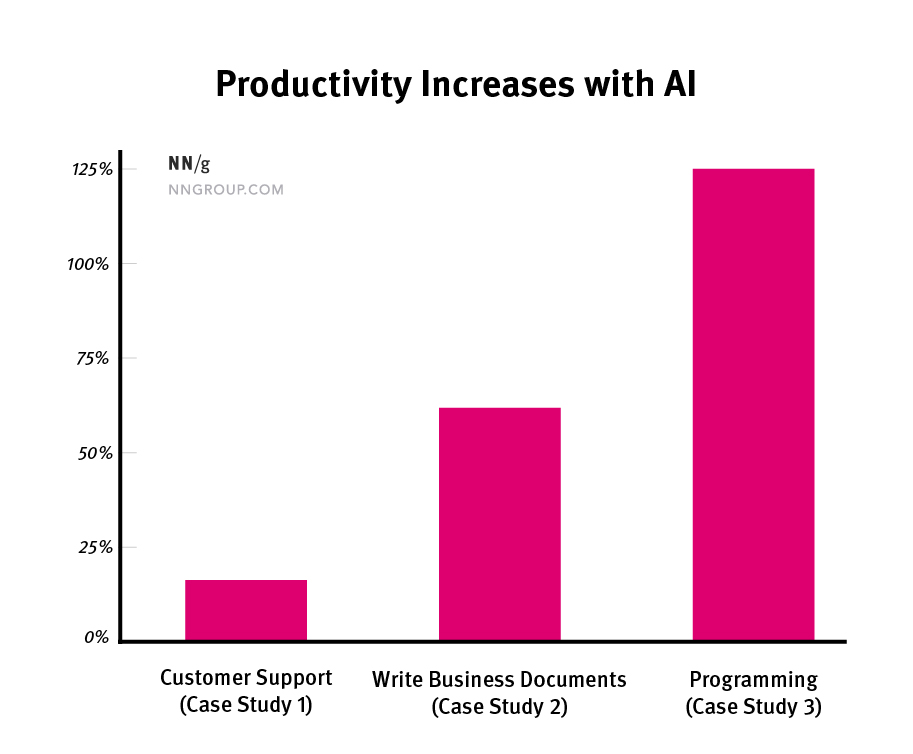

GenAI will become embedded into the productivity software which we use every day… anyone not using it will find their job threatened by super-productive human+AI “centaurs”.

The first full-length AI-generated movies will be released with budgets less than a few thousand dollars. A few will be really good.

GenAI bank scams will scale up: millions of people will be persuaded to send money urgently to their “relative” calling in an “emergency”.

There will be several other attempts at creating an “AI-native” device form factor. None will quite catch.

The cost of using AI will go up: scaling GenAI out to the rest of the world will increase energy demand hugely - AI could soon need as much electricity as an entire country. These costs will be need to be passed on to end-users. (2024 will also see big announcements in improved energy consumption and sustainability of chips used for AI training and inference…)

This will drive smaller AI models to become more powerful and energy-efficient - already you can run LLaMa (and derivatives), and other open source models locally (disconnected from the internet) on your smartphone: by the end of 2024, a GPT-4 equivalent model may be available to download and run locally. Effectively: the “compression of all humanity” on your own phone or laptop.

Finally: OpenAI may finally release “GPT-5” (or likely another moniker) and announce they have “achieved AGI” (artificial general intelligence) but by then the concept of “AGI” will be so watered-down that everyone will just look up briefly and carry on.

⏭️🧪4. AI accelerating science

Possibly the most exciting consequence of AI that we’ve yet to fully grasp (Deepmind Alphafold just the first glimpse…) is its ability to accelerate scientific discovery, which I expect could happen beyond a speed humanity has even dreamed about until now.

Alphabet and its subsidiary Google (Deepmind) have been leading the way on this:

🔤🌾Alphabet does Ag

Google parent Alphabet launched Mineral, an agtech data analytics business it has been incubating in stealth within its “moonshot factory” X (no relation!) since 2017. The data analytics company claimed to have already surveyed and analysed 10% of the world’s farmland: aiming to provide farmers with “per plant” data sets.

Alphabet has been searching around for another revenue model other than internet advertising for years… perhaps food data is it.

🧬AlphaMissense

3 years after the 2021 announcement by Google AI subsidiary Deepmind that that its deep learning network Alphafold had largely solved the Protein Folding Problem that had stood as a “grand challenge in biology for the past 50 years” (recognised as one of the biggest breakthroughs in biological science ever), this year Deepmind provided some updates in 2023:

Unfolded is an informative series of profiles on the scientists using the Alphafold protein-folding AI model:

“Millions of researchers around the world are using our AI system, AlphaFold, to accelerate progress in biology. From helping tackle antibiotic resistance to finding new life-saving medicines, AlphaFold quickly became an essential tool for the scientific community, since launching in 2021. Unfolded introduces scientists who are using AlphaFold to unlock challenges, accelerate progress, multiply hypotheses, and pave the way toward new discoveries. Here are some of their stories...“

🧬“AlphaMisSense” is a a new tool based on AlphaFold which can accurately predict which mutations in proteins are likely to cause health conditions, helping to pinpoint protein mutations that cause disease:

💎GNoME acceleration

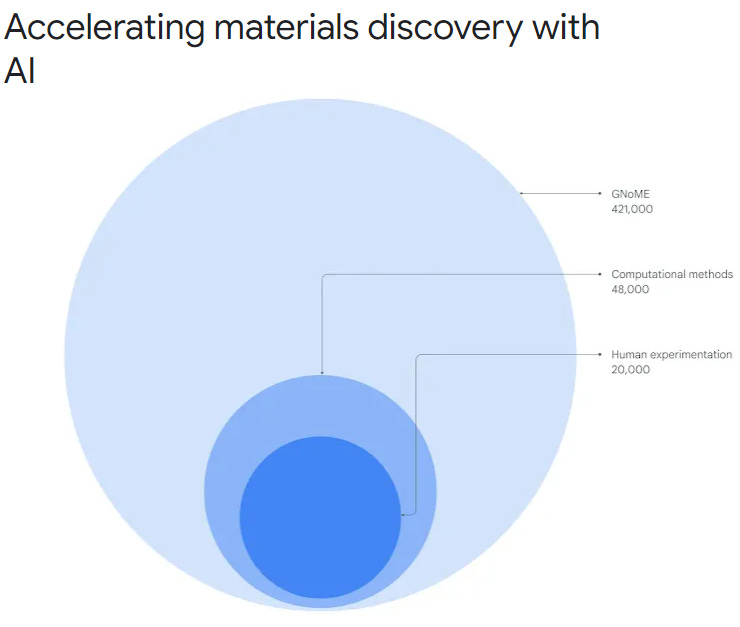

Future materials science got a leg up in November when Google Deepmind published their new AI tool GNoME:

“Today, in a paper published in Nature, we share the discovery of 2.2 million new crystals – equivalent to nearly 800 years’ worth of knowledge. We introduce Graph Networks for Materials Exploration (GNoME), our new deep learning tool that dramatically increases the speed and efficiency of discovery by predicting the stability of new materials.

With GNoME, we’ve multiplied the number of technologically viable materials known to humanity. Of its 2.2 million predictions, 380,000 are the most stable, making them promising candidates for experimental synthesis. Among these candidates are materials that have the potential to develop future transformative technologies ranging from superconductors, powering supercomputers, and next-generation batteries to boost the efficiency of electric vehicles.“

(It’s hard grasp the implied impact of this: in just one paper and one AI model release, materials science has leaped forward 800 years…)

“Materials science to me is basically where abstract thought meets the physical universe…It’s hard to imagine any technology that wouldn’t improve with better materials in them.” — Ekin Dogus Cubuk, a co-author of the paper.

🌙Schmidt science moonshot

Former Google CEO Eric Schmidt announced he was launching a non-profit AI-science moonshot … watch this space, I guess.

Outside Alphabet:

🙄xAI

Even Elon Musk announced his new AI firm xAI had the goal to:

“understand the true nature of the universe”.

(…but then promptly put out Grok, a chatbot with all the humour of your cringey boomer uncle… Jury most definitely out.)

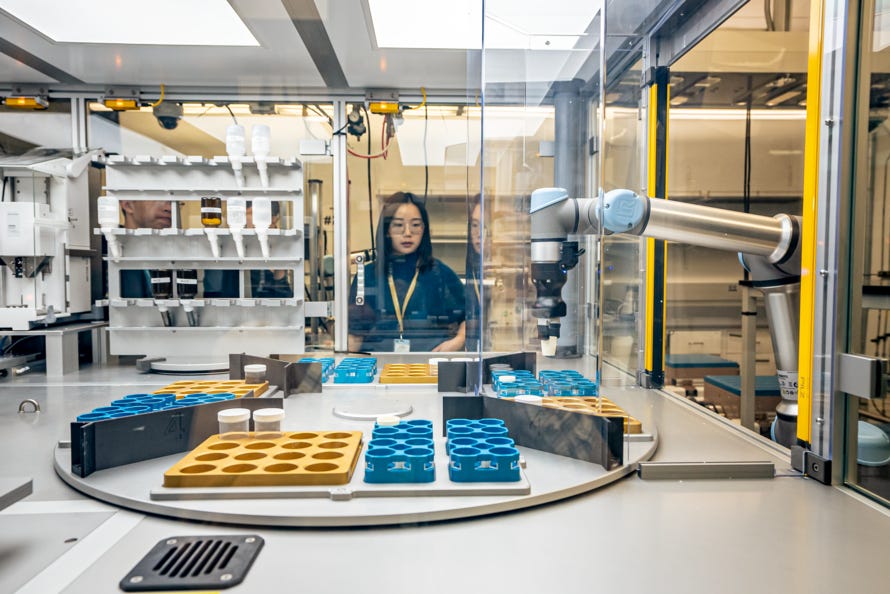

🕒🔬24/7 AI materials science lab

Berkeley Lab announced A Lab - The Autonomous Lab of the Future to speed up materials science discovery:

“Robots operate instruments and artificial intelligence makes decisions to find useful new materials at the A-Lab“

🗺️A map of every conceivable molecule?

A story in NewScientist about how a map of every conceivable molecule could be possible with AI to accelerate the discovery of new compounds for everything from drugs to materials. (BUT beware malicious uses…)

💊Drugs designed by GenAI

British biotech company Etcembly revealed the world’s first immunotherapy drug designed using generative AI:

“At the heart of the discovery is EMLy – a sophisticated supercomputer that uses cutting-edge machine learning algorithms to scan through huge datasets to learn the ‘language’ of TCRs (T Cell Receptors) and find the best receptor for a given target.

A generative large language model (LLM) similar to ChatGPT is then used to ‘rewrite’ the genetic code for the TCR in order to make it as effective as possible. Finally, this code is validated experimentally in the lab where it is used to make real life TCR-based drugs for testing.“

💡Lack of “Useful” Innovation no more?

2023 started with an extensive article full of academic schadenfreude on the bursting of the tech bubble in 2022: Web3, The Metaverse and the Lack of Useful Innovation:

“…Our question is whether newly hyped technologies, like the Metaverse, Web3, and blockchain, have any chance of changing [human economic wellbeing]. There are many reasons to be skeptical that they can. In many ways, the Metaverse and Web3 are merely a pivot by Silicon Valley, an attempt to gain control of the technological narrative that is now spiraling downward, due to the huge start-up losses and the financial failure of the sharing economy and many new technologies. …If we are correct that the newest wave of hot technologies will do almost nothing to improve human welfare and productivity growth, then elected officials, policymakers, leaders in business and higher education, and ordinary citizens must begin to search for more fundamental solutions to our current economic and social ills.”

2024 will be year of AI accelerating deep science and technological discovery… strap in.

🥽📲🔋5. Tech advances gradually... then all at once

Even though the year has been dominated by AI, every week I have captured a clutch of “[Weak] signals” - technological advances at the frontier of R&D which could fundamentally change the world we live in.

There have been far too many to replay them all here, but here are some of the highlights:

🛰️Internet everywhere

Snapdragon Satellite At CES 2023, Qualcomm announced a new feature for next-generation Android phones: Snapdragon Satellite a two-way satellite text messaging service using the Iridium satellite constellation. Due to be released with the new high-end smartphones later in 2023 - but most phones already have an antenna for this.

(Apple’s iPhone 14 already comes with emergency-only satellite messaging in US and Canada).

SpaceX also provided more details of its Starlink-for-phones service which it is planning to launch next year, claiming there will be text service starting in 2024, voice and data in 2025, and "IoT" service in 2025. Partner launch networks include Optus in Australia and One NZ (formerly Vodafone) in Aotearoa.

Starlink via Arstechnica

🥽eXtended Reality becoming reality

After perpetual disappointment for years now that XR hardware and content will never live up to expectations, 2023 showed that a fully immersible “metaverse” is just around the corner.

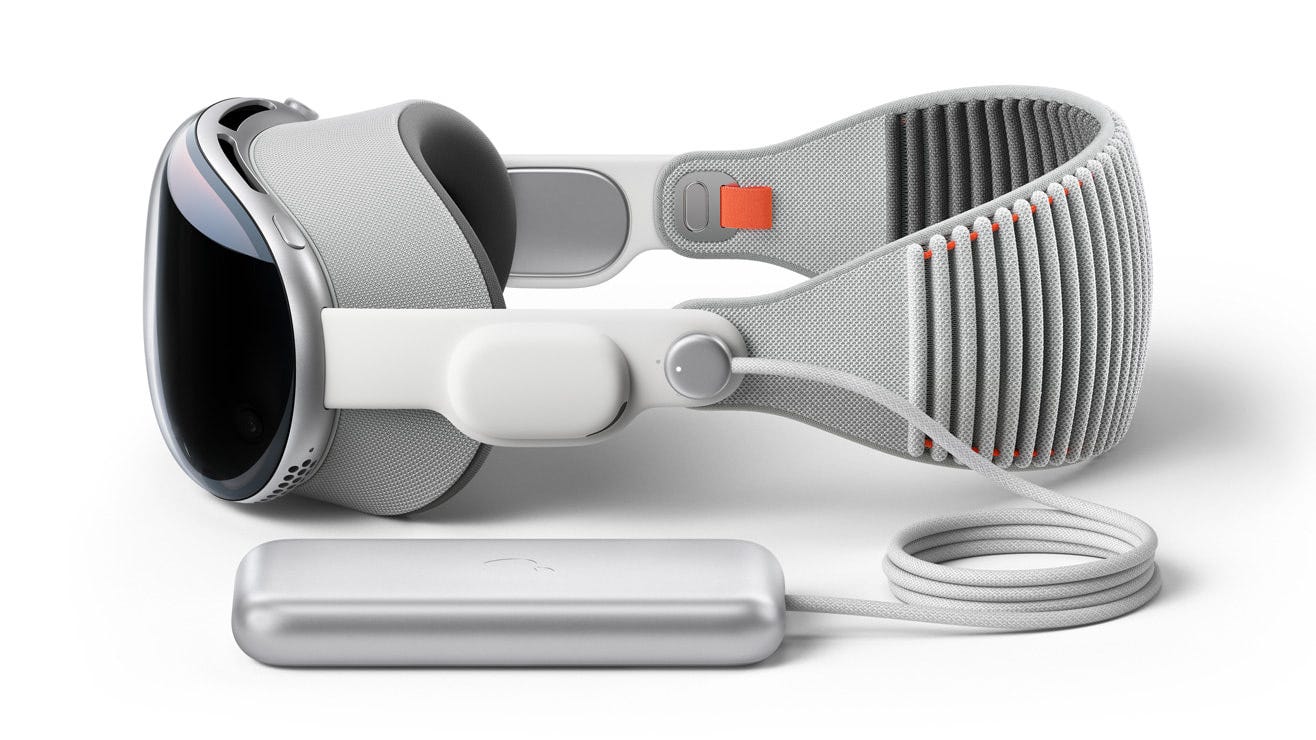

Behold a wondrous Vision (Pro): After years of rumours and anticipation, Apple finally unveiled their entry device into the world of XR at WWDC23: the Vision Pro:

It looks good, setting a very high bar for competitors, but it’s also extremely pricy at US$3499. Also, this was just an announcement….the devices won’t be available until Q1 2024 at the earliest. Key data points:

No external controllers - eye tracking and gesture control only

Augmented, NOT Virtual reality. The “passthrough” glass experience is a design feature - you can see the environment around you AND a projection of your eyes are visible to people looking at you… bit weird?

A new ground-up spatial operating system: visionOS

Incredible display resolution: the micro-OLED screen has 64 pixels for in the space of 1 iPhone pixel. 24 million pixels across the two panels - more than a 4K screen for each eye.

Perhaps the thing which will take people longest to get used to… Facetime videoconferencing works seamlessly - but animates an avatar of your face (without headset) for other users to see.

Great for watching (3D) movies at home on a huge “virtual” screen.

Reviewer feedback from the few who have tried it on was generally enthusiastic:

Nilay Patel in The Verge: I wore the Apple Vision Pro. It’s the best headset demo ever.

Om Malik: My ten takeaways from WWDC 2023

“When Sony launched the Walkman, it gave music feet. The iPod took that idea further into the digital realm and trained the mainstream to expect any music anytime, anywhere. It also accelerated the “headphone” culture, where we slowly receded into our cocoons — perhaps becoming less social...The Vision Pro will do the same for video content and make us much less social, no matter what Apple’s demos try to project…. We will see our behaviors change and impact our society — just like iPod and the iPhone.

Probably the key point is that at such a high “pro” price, it will be interesting to see how many of these devices fly off the shelves… Surely at WWDC24 next year we will get to meet “Vision Pleb” (or equivalent) ready for Xmas 2024 for under US$1K.

🥽Me(ta) too! Oh, and surely just coincidental timing, Meta announced their Quest 3 VR-only headset a few days earlier. Priced at a far more accessible US$499 and now already shipping:

I tried one on at this year’s inaugural SXSW Sydney conference and was pretty blown away: the Quest 3 is a big step up from the Quest 2 - higher pixel resolution and motion tracking, the AR pass-through (sort-of) works (at least well enough to take a selfie) and overall the whole device is far lighter and comfortable. I could definitely see myself wearing this for a few hours at a time now… not perfect yet, but impressive for US$500, definitely the market leader.

😮😮Oh wow (virtual reality edition) Meta Reality Labs have been beavering away all year…after previewing their Codec Avatar technology in 2022, Mark Zuckerberg took it for a full outing recording the first-ever podcast with photorealistic avatars in virtual reality with Lex Fridman. Here he is driving a full-body avatar in the “metaverse”:

Although this generation of the technology requires an advanced full-body 3D scan, Zuckerberg envisions a time soon when users will be able to just use their phone camera for 30 seconds to scan their avatar… in fact they’ll just make up their avatar…

This is amazing technology that’s being developed at Meta. The AI wave has helped take a bunch of attention off last year’s “Metaverse” industry hype cycle and allowed them to get on with just building.

NVidia’s Jim Fan had this take:

“Keep in mind, what we see here is the worst that this tech will ever be.“

I predict that 2024 will be the year when eXtended Reality crosses over to become *more engaging* than reality: at which point, why would anyone take the headset off?

✨New AI device UIs

📱There were rumours that Sam Altman was courting legendary iPhone designer Jony Ive to create an AI-first device: original iPhone designer Jony Ive is in talks with OpenAI to raise $1bn from SoftBank for a new AI device venture.

Think about it - OpenAI’s AI models are never going to get valuable real estate on Google (Android) or Apple (iPhone) devices… and its key partner and investor Microsoft famously lost that battle… Like Meta, OpenAI needs a device strategy to be able to control its full stack. But while Meta has gone all-in on VR and AR… OpenAI has time now to completely re-imagine what the next generation of AI-first devices may look like.

Startups like Humane (see above and pic below) have been indicating one direction…the voice and projection UX may be a hint of things to come… but I’m not convinced. Feels too early.

…but I’m fascinated at the wide-open idea space here. What are items number 6-10 on the following list?

🦗Crypto (…crickets)

It’s all gone quiet The world of Web3 and Crypto stayed quiet, with seemingly very low levels of funding entering the space and ongoing fallout from 2021’s Ponzi dynamics still playing out:

The 2022 collapse of the world’s 2nd largest cryptocurrency exchange, with a US$8Bn liquidity shortfall, resulted in founder Sam Bankman-Fried being tried and found guilty on all seven charges including fraud, money laundering, and conspiracy. Bankman-Fried now faces the possibility of a lengthy prison sentence, with a maximum of 120 years in jail.

Also at the end of the year, Changpeng Zhao (CZ), the founder and CEO of the world’s largest crypto exchange pleaded guilty to money laundering violations and resigned as part of a settlement with the U.S. Department of Justice and regulators. The settlement involves a US$4 billion fine CZ faces the possibility of a prison sentence and a $50 million fine. But Binance continues in business under CZ’s successor.

It’s all gone quiet(ly) up Meanwhile, as the US national debt soared above US$33 Trillion, cryptocurrencies Bitcoin, Ethereum and others continued to head back up in value. BTC currently sitting at over US$42K - nearly a 3X increase since its low in January:

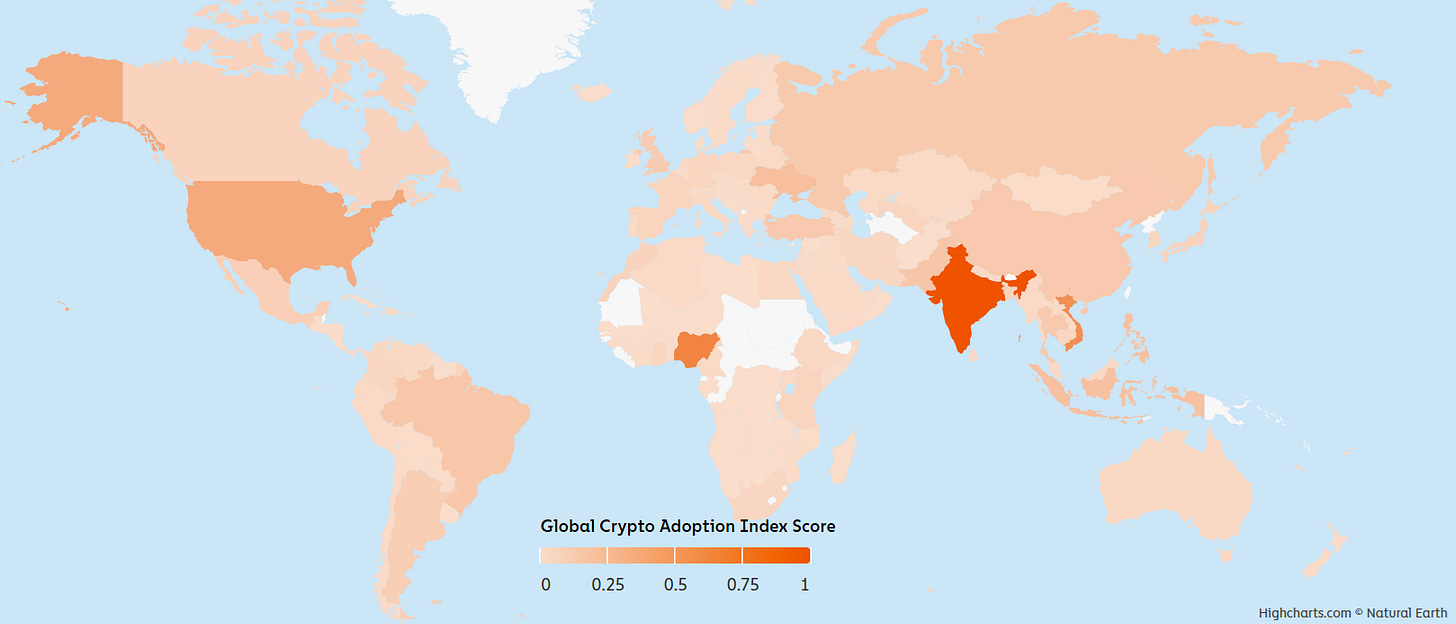

With Layer 2 payment apps knocking at the door… very soon I am expecting decentralised cryptocurrencies to see more rapid mainstream adoption as simply *better* technology for managing “money”, led by developing countries, particularly in Africa and South Asia:

Attempts by the US SEC and other regulators to place overly strict restrictions on crypto use may simply result in suppressing innovation and cutting the US off from new financial innovations happening around the world.

e-CNY up too Meanwhile, over in China, the state-backed Digital Yuan CBDC (e-CNY) continued to grow in footprint, with transactions reaching 1.8 trillion yuan ($249.33 billion) as of July 2023. But the digital yuan project still faces challenges, including breaking open the private sector digital payments market dominated almost entirely by Alipay and WeChat Pay.

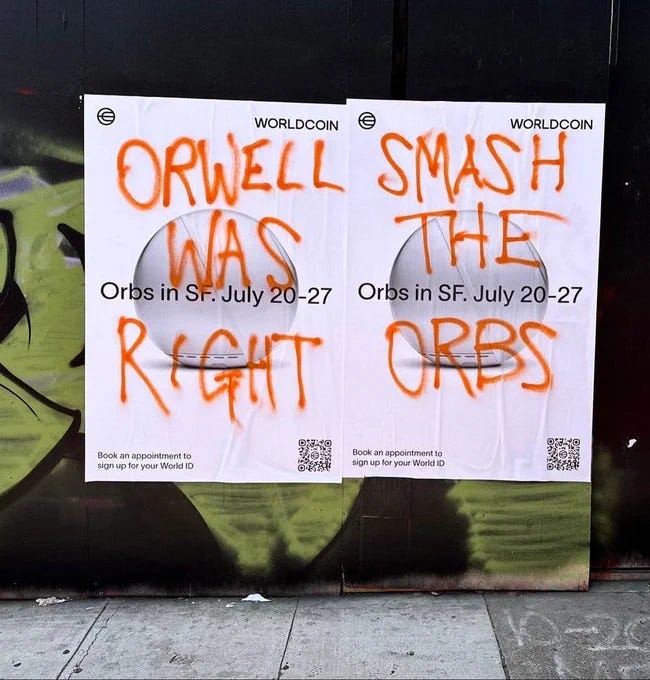

👁️Orbing man of the moment Sam Altman can’t keep himself out of the news. One of his other ventures Worldcoin (first covered in Memia 2021.42), makers of the not-dystopian-at-all crypto iris scanner, launched its much-anticipated World ID verification program.

(Am I the only one to get a sneaking sense that Altman’s investment portfolio is a coordinated pincer movement to take down global capitalism in just a few years…?)

A few weeks into Worldcoin’s “identity and financial network” global rollout…

The Orb iris scanning campaigns are going well, then:

Worldcoin: “We want government to use our ID system”

Governments: Cease and desist, we have BIG privacy concerns. (Worldcoin’s Orb scans were suspended in Kenya …won’t be the last).

🧠BCIs

Switching gears, the development of Brain-Computer Interfaces progressed significantly in 2023. Although Neuralink and other invasive tech continued to move forward, the focus has been largely on what can be done with non-invasive devices:

Galea OpenBCI launched their new headset Galea: a bridge between mixed reality and neurotechnology. Will we all be wearing one of these in a few years….?!

(Interesting signal… OpenBCI has a partnership with Finnish XR technology leader Varjo)

Mind reading MindEye is the latest “mind reading” AI research project using fMRI imaging and generative AI image models, which is showing even higher performance than others:

“[MindEye uses] a novel fMRI-to-image approach to retrieve and reconstruct viewed images from brain activity. …MindEye can map fMRI brain activity to any high dimensional multimodal latent space, like CLIP image space, enabling image reconstruction using generative models that accept embeddings from this latent space“

🤯Cooking with telepathy NOIR: Neural Signal Operated Intelligent Robots for Everyday Activities. Just…wow….

Thought-to-text UK startup Mindportal released a sketchy video and PR release supposedly demonstrating the first “thought-to-text” conversation with an LLM using a non-invasive BCI. Colour me sceptical but *if* this is real, the future has arrived a bit quicker than I was anticipating…

🧠🔬Brain-in-a-box Australian startup Cortical Labs, a hybrid bio/digital chip firm which released research at the end of last year on how In vitro neurons learn and exhibit sentience when embodied in a simulated game-world:

“DishBrain [is] a system that harnesses the inherent adaptive computation of neurons in a structured environment. In vitro neural networks from human or rodent origins are integrated with in silico computing via a high-density multielectrode array. Through electrophysiological stimulation and recording, cultures are embedded in a simulated game-world, mimicking the arcade game “Pong.”

I was fortunate enough to hear Cortical Founder and CEO Hon Weng Chong at SXSW Sydney as he took us through their progress since then: showing the “CL1 Desktop System” which is effectively a “life support device” for a live brain / computer device… outside a skull.

And finally, a thought experiment… what if these devices could all be stacked into a cloud data centre…?

The implications of this are truly incredible… this is a completely different approach to brain computer interfaces: instead of inserting the devices inside the brain, you grow the brain around the device… Profound.

Organoids Just as I was writing this section, news of an experiment when “organoid” Human Brain Cells on a Chip Can Recognize Speech And Do Simple Math. Wow.

🦿🦾Robots rising

I have been progressively surprised at the speed of advancement made in robotics throughout 2023.

Autonomy Cruise and Waymo rolled out their driverless (“robotaxi”) deployments in San Francisco and Austin, although not without issues:

After an incident where a vehicle dragged a person along the street, Cruise had its self-driving licence in SF revoked and in December laid off 900 workers with investigation into the crash ongoing.

Meanwhile UK startup Wayve has been advancing the field in Intelligent vehicle autonomy, rolling out some impressive AI model demos all year:

“Our solution revolutionises automated driving by leveraging end-to-end deep learning, eliminating costly robotic stacks and complex mapping. With our embodied AI software, vehicles learn from experience, adapt to any environment, and drive without explicit programming.“

Menagerie robot form factors have proliferated, just two examples:

Butler robots are now being deployed in hotels and hospitality

Revolute here’s a new robot body plan I haven’t seen before: a flying sphere with gyroscopic motion… (See Revolute Robotics website)

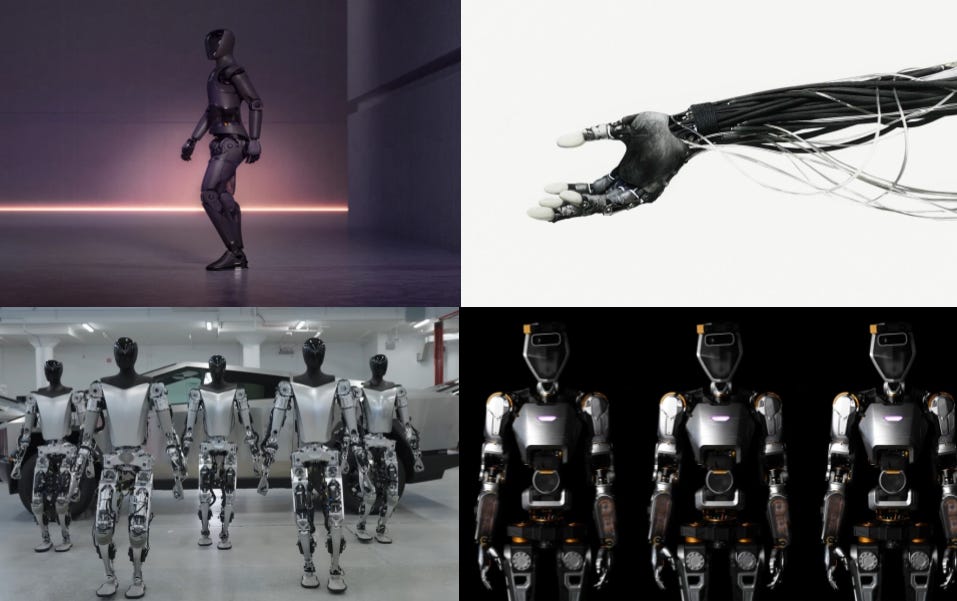

Humanoids are here It may be that robotic body plans may converge in future on a more familiar shape: for the greatest possible utility in a world designed and built for humans, perhaps the robots of the future will take on a humanoid form?

In addition to Boston Dynamics’ Atlas robot (best known for its dancing and parkour skills), several companies are developing advanced humanoid robots to work alongside - and perhaps replace - humans in future.

(Clockwise from top left: humanoid robot prototypes from Figure AI, Clone Robotics, Sanctuary AI and Tesla) Perhaps most striking of these is Clone Robotics, building “the world's first … low-cost, biomimetic, and intelligent androids, trained to perform all the common labour for daily life.“ Their “hand”, powered by Clone’s proprietary hydraulic muscles and valves is eerily anthropomorphic, bringing to mind the perfect human replicas in the TV series Westworld.

Comeback of the year went to Tesla: who went from this shamefully awful Optimus robot reveal at the end of last year: a trio of T-shirted engineers holding the prototype up and moving its legs to help it walk was top comedy straight out of HBO’s Silicon Valley…

… to this: Optimus Gen 1 showing more intelligent manual dexterity than any others out there and even pulling off yoga poses:

…and just last week, to this: Optimus Gen 2:

Here are Elon Musk’s comments at the Tesla shareholder meeting in May this year:

“say … you have a generalized humanoid robot. What would be the effective ratio of humanoid robots to humans? Because I think basically everyone would want one and maybe people would want more than one. Which means the actual demand for something like Optimus if it really works — which it will — is, I don't know …10 billion units. It's some crazy number it might be 20 billion units If the ratio is say two to one on people ... It's some very big number is what I'm saying. And a number vastly in excess of the number of cars. So my prediction is that Tesla's … majority of long-term value will be Optimus. And that prediction I'm very confident of.“

Autonomous (swarming) weapons A future with swarms of autonomous lethal drones scare me.

🐝Black Hornet The FLIR Black Hornet is among the smallest demo:

From a defence point of view, the question no-one is talking about out loud is what is the threat posed by swarms of miniature natural-looking airborne devices above your country? (And what can we all do about it…? (Clue: a moratorium on killer drones would be a start).

China’s military-industrial complex is already working on a list of technologies needed to fight off swarms of Unmanned Aircraft Systems (UAS). (Including the LW-30 Laser Defense System).

One bright spot on the horizon: one of the main items on this year’s US/China Summit agenda was a pledge to ban AI in autonomous weapons - eg. drones and, er, nuclear warhead control systems. However, this falls a long way short of a broader consensus on other military matters…:

“Sources informed the [South China Morning] Post that there has been no meeting of the minds on several controversial issues, including Ukraine and Israel; Taiwan, which is set for presential elections in January; and the PLA Navy’s activities in the South China Sea, affecting neighbouring countries like the Philippines.” —SCMP

Watching the accelerated development of new lethal autonomous drones in Ukraine and elsewhere, this trend will only head in one (swarm) direction if it’s not covered by a multilateral treaty. (Although the tech is now so accessible…how could anyone stop non-state actors from using it…?) As a reminder of why this is so important, here’s a video from the Campaign To Stop Killer Robots — from 5 years ago, even more scarily real with today’s technology.

And this short 8-minute very dark sci-fi film “Slaughterbots” shows even more clearly what would inevitably follow without international regulation:

For an indication of just how close these (and many others) technologies are (and whose hands they’re already in) this PirateWires interview between Mike Solana and the legendary Palmer Luckey (original founder of Oculus and now running private US autonomous military technology company Anduril) goes to some pretty dark places.

Are you a robot? Pretty dark take, here:

via @tomairangi

🎨3D printing

Tallest The tallest 3D-printed concrete building in the world is now up in Saudi Arabia: 3 storeys high, completed in 26 days and claiming 30% energy saving on traditional construction.

Highest resolution California-based nano3Dprint launched their D4200S printer, capable of printing with a 20-nanometer resolution, making it the highest resolution of any additive manufacturing system currently available.

🌞🔋Renewable energy

Lots of new developments in renewables, mentioning just a few highlights:

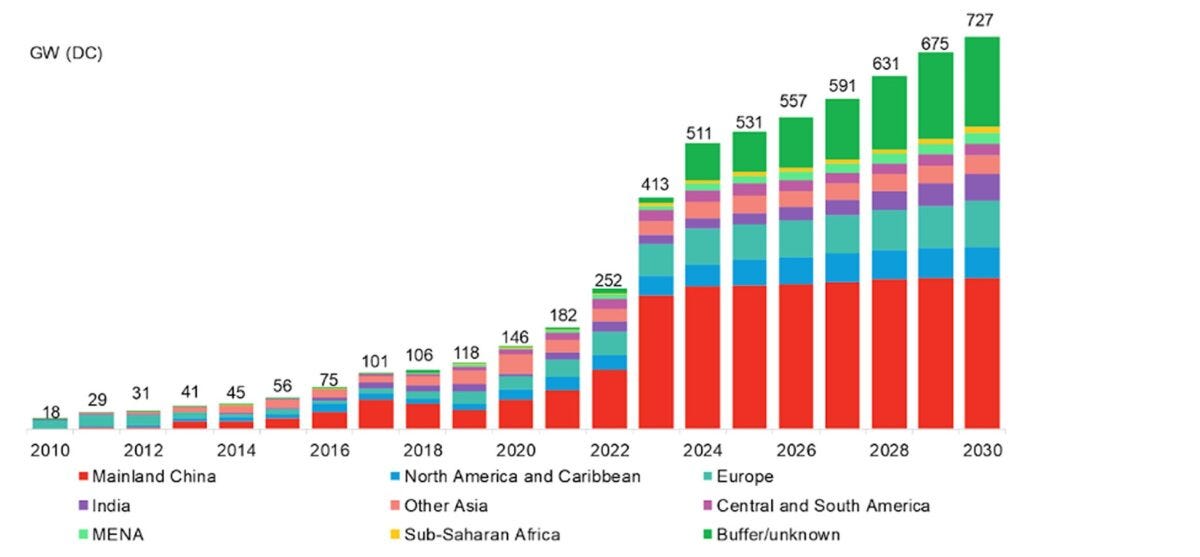

New solar installations in 2023 are way ahead of forecasts, on target to hit 413 GW this year, says BloombergNEF, driven mainly from a massive 240GW buildout by China, up over 58% from 2022:

Lithium bubble After concerns that the world would face a chronic lithium shortage in 2021/2022 caused a massive spike in lithium commodity prices… lithium prices are back to where they were in late 2021.

New battery tech Japanese car manufacturer Toyota announced a solid state car battery breakthrough which they think could halve the cost and size of car batteries, aiming to include the technology in electric vehicles by 2027.

⚛️Place your nuclear fusion bets

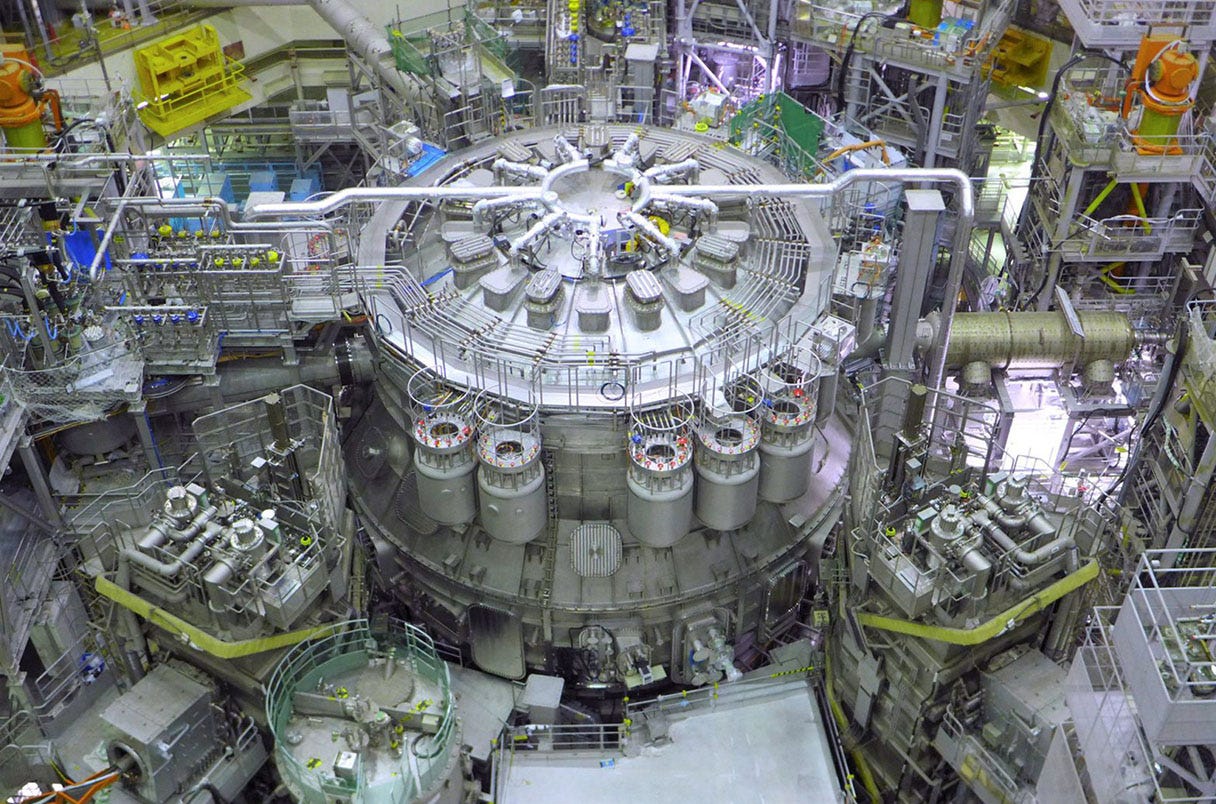

China is working on its own large fusion power-generating tokamak, the China Fusion Engineering Test Reactor (CFETR), which may enable the possibility of utilizing the CFETR as the core of a hybrid fusion-fission reactor, in which neutrons from the fusion reactions would be used to drive fission reactions in a “subcritical blanket”.

Fusion ignition for the second time: US scientists at the Lawrence Livermore National Laboratory announced they had successfully repeated their fusion ignition breakthrough experiment from December 2022 - achieving net energy gain in a fusion reaction on July 30 and producing a higher energy yield than in December. Still a long way to go.

Japan’s four-storey-high JT-60SA tokamak, the world’s newest and largest fusion reactor, fired up its plasma for the first time:

Japan’s JT-60SA fusion reactor. Image: NATIONAL INSTITUTES FOR QUANTUM SCIENCE AND TECHNOLOGY via Science The ubiquitous Sam Altman spoke about another of his major projects, nuclear fusion startup Helion Energy. Here’s the transcript from his conversation with StrictlyVC back in January:

Q: Fusion…when do you think there will be a commercial planned actually producing electricity economically?

A: yeah I think by like 2028, pending you know good fortune with Regulators we could be plugging them into the grid. I think we'll do, you know, a really great demo well before that like hopefully pretty soon

(Microsoft signed a “commercially binding agreement” to purchase electricity from Helion from 2028. Game on).

🚀💥Rapid unscheduled disassembly (x2)

In the race to space, the world's spaceports set a new record with over 180 launches putting payloads into orbit by the end of November, exceeding the mark of 179 successful orbital launches from all of 2022.

SpaceX’s massive Starship was finally cleared for its second test launch by the FAA after, er, flying concrete issues with its first test flight. SpaceX promptly launched from SpaceBase in Texas…this time all 33 Raptor engines on the Super Heavy Booster started up successfully and, for the first time, completed a full-duration burn during ascent. but the rocket still exploded (“rapid unscheduled disassembly”)soon after stage separation. No flying concrete this time, thankfully...

Amazing visuals:

🌕The new Moonrush

2023 saw a new “Moonrush” as US / China strategic competition drove investment in the race to establish a presence on the Moon, with both public and private sector investment increasing:

NASA’s Artemis program Almost exactly 50 years since Apollo 17 last went to the Moon, NASA’s Artemis I successfully completed its mission in December 2022 to enter lunar orbit and return. The Artemis program’s official timeline is to complete the Artemis 3 crewed lunar landing in 2025, operationalise the Lunar Gateway (a waypoint space station in lunar orbit) in 2026 and commence annual crewed Moon landing missions from 2027 onwards. At the end of 2022 NASA Orion Programme leader Howard Hu said that NASA expects humans to live on the Moon this decade.

China Chang’e programme: After successfully landing and returning a probe in 2020, Phase IV now underway aims to develop an autonomous lunar research station near the Moon's south pole, with the next mission launch due in 2025.

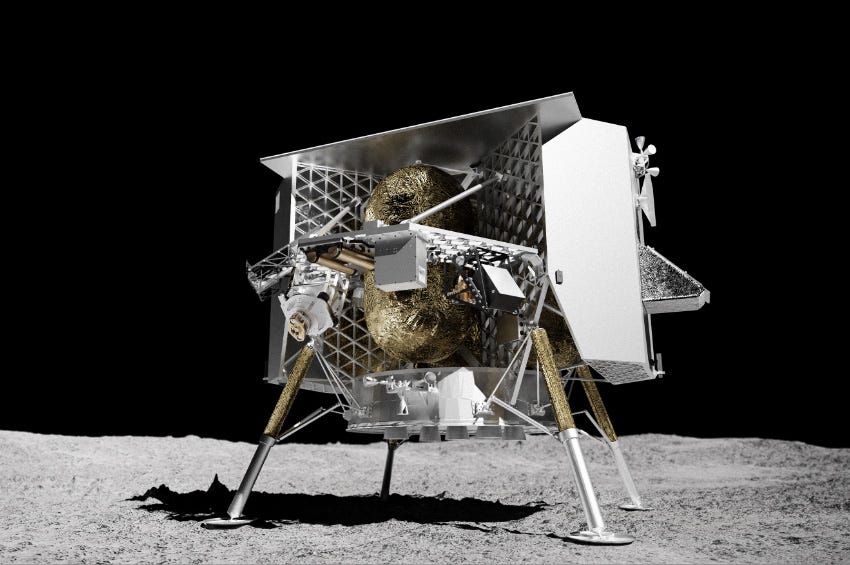

Three private space exploration companies raced to land robotic craft on the Moon in 2023: Japanese iSpace HAKUTO-R Mission 1 (crashed attempting to land), Intuitive Machines Nova-C (now launching in Jan 2024) and Astrobotic Peregrine lander (now launching Christmas eve).

SpaceX are also in the planning stages of DearMoon, the first commercial space tourism launch in the giant Starship rocket, taking 8 passengers around the moon and back. They also recently teased a Moon Base staffed by “Hundreds or Thousands” (But crewed space missions are risky and expensive - robots are increasingly able to perform manual dexterity tasks at or beyond human level, 24/7. The economics of mass colonisation likely won’t add up for decades, if ever…)

The bigger question yet to be answered: who actually owns the Moon?

“The Outer Space Treaty of 1967, space law’s foundational text, is showing its age. It dates back to the era when only governments had access to space. And it states that no claims of sovereignty can be made, on the Moon or elsewhere. Efforts to update the treaty to establish rules around resource extraction have run into the lunar regolith. America has refused to sign the Moon Agreement, adopted by 18 countries in 1984, whereas China and Russia have rejected America’s latest proposal, the Artemis accords of 2020.

The debate over who owns the Moon has been the subject of speculation since long before the space age…As the trio of landers now hoping to reach the lunar surface illustrate, cheap rockets and new technology mean that the previously fantastical question of the Moon’s ownership is about to get very real. These craft are the first representatives of a planned flotilla of lunar vehicles, both crewed and uncrewed, publicly and privately funded, that herald a new Moon rush. That brings possibilities, but it also raises tricky questions about the trajectory of humanity’s exploration and exploitation of space.” — The Economist

⏳Longevity

Rejuvenation athletics

Bryan Johnson completed over 2 years of his full-on open-source Blueprint protocol of diet, high intensity exercise, sleep and supplements to reverse his age. The results are amazing and he has gone from “obscure tech billionaire” to meme-dropping internet celebrity over the period. He plays a very funny, very effective social media game.

In a successful gamification angle, he now styles himself as a “rejuvenation athlete” and established the “Rejuvenation Olympics”:

Johnson’s own health results from following Blueprint relentlessly have been quite spectacular, even if he hasn’t actually “reversed” his age so far:

🧬Reboot me

High-profile longevity researcher David Sinclair (I reviewed his book Lifespan in the very first Memia newsletter, Memia 2020.012) is one author among many in a new research paper: Loss of epigenetic information as a cause of mammalian aging, which validates Sinclair’s long-proposed Information theory of aging:

Delighted to share with you our latest paper. Here, we test the hypothesis that aging may be driven by DNA damage-induced changes to the epigenome. Mightbe due to a glitch in the software of the body that causes it to malfunction, which can be fixed with a reboot?👇 1/

Delighted to share with you our latest paper. Here, we test the hypothesis that aging may be driven by DNA damage-induced changes to the epigenome. Mightbe due to a glitch in the software of the body that causes it to malfunction, which can be fixed with a reboot?👇 1/Could we now find a reverse pathway which “reprograms” our age backwards?

🧫Biotech

Too many advances to cover in depth, just two from this year:

🔬Immaculate Conception is a US startup focused on turning stem cells into human eggs.

🧬Designing DNA with AI AI has designed bacteria-killing proteins from scratch – and they work. Elliot Hershberg explores a recent preprint paper outlining potential strategies to apply generative AI to design DNA:

“What is the take home message? This study is a compelling example of the immense promise at the intersection of AI and biology. It demonstrates where ML can have the highest impact: as a powerful tool to guide empirical engineering. Complex regulatory DNA sequences were designed purely in the world of bits. When they were manifested in the world of atoms, they behaved as expected.

Let’s extrapolate for a second. What if we developed a model that could not only design cell-type specific expression, but could design sequences that would drive gene expression in response to signals from the cellular environment. What if we put some of these enhancers into an engineered cell therapy that detected disease and expressed antibodies or mRNA therapies and released them to treat the condition?

I, for one, am excited for our sci-fi future.”

🍲Foodtech

Exponential biotech, in particularly precision fermentation, is going to fundamentally change the way dairy products are produced. With the environmental impacts of peak cow finally starting to sheet home, I used AI to imagine a future of dairy production…without animals:

Who will be the “ASML of fermentation-based dairy production”?

DALL-E 3 imagined it thus:

“A futuristic pastoral landscape, featuring genetically-engineered, bioluminescent grass covering rolling hills. The scene is enhanced by the integration of solar panels and wind turbines with the natural environment, under a radiant blue sky with wispy clouds. In the distance, modern structures with sleek, curved designs reflect the sunlight, adding to the vision of a harmonious blend of technology and nature.“

⚛️Quantum technologies

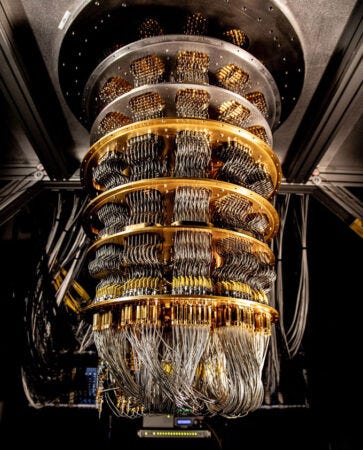

Finally… the weird and wonderful world of quantum mechanics is starting to yield R&D results and gain investment. China has announced over US$15Bn of public QT funding to date, more than public investment from the EU (US$8Bn) and US (US$3.7 billion) combined. However, private investment in QT ramped up significantly in the US with a McKinsey report identifying US$2.35 billion of investment into quantum technology start-ups in 2022.

Quantum technologies (QT) break down into three main classes: computing (QC), communications (QComms), and sensing (QS):

Quantum computing (QC) promises to solve complex mathematical problems orders of magnitude faster than the most powerful silicon-based supercomputers currently in existence. Major milestones were achieved in 2023:

In June, a Chinese team announced Juizhang, a photonic QC which it claimed can process some AI-related tasks 180 million times faster than a classical computer.

After claiming to have reached "quantum supremacy" in 2019, researchers from Google’s Quantum AI lab published in July that the latest 70-qubit (quantum bit) iteration of their Sycamore QC was able to perform certain calculations in just a few seconds which would otherwise require the fastest classical supercomputer approximately 47 years to accomplish.