Memia year in review 2024: counting the OOMs to Foom 🧮📈🤯

Man, that’s crazy. Catch the game last night?

Kia ora,

Welcome to my annual end-of-year post trying to round up everything that I’ve covered in Memia in 2024. (You can read my previous annual roundup posts here: 2023 | 2022 | 2021 | 2020).

ℹ️PSA: This post is *far longer* even than Memia’s usual missives.🤯 Best viewed online or in the Substack app. (Even better idea, import into your LLM app of choice — Google’s NotebookLM is very zeitgeisty — and ask it to summarise or make a podcast for you!)

📑Table of contents

Introduction A daunting task trying to synthesise the biggest year yet in AI and technology.

👤On a personal note I published my first book, went nomadic, and became an empty nester!

💥The CRISIS continues Out of focus for this post, but multiple global crises intensified: climate change hit 1.5°C, biodiversity declined, and geopolitical tensions escalated.

🌏⚡1. AI-ocene epoch, Year 2 AI's energy and compute demands are reshaping our planet's surface at an unprecedented scale.

🏃♂️2. Race to AGI - no slowing down Major AI labs competed fiercely with new models every week, while capital investment continued to pour in… for how much longer, though?

🎨3. Omni-modal AI AI has mastered multiple forms of content creation: images, video, audio, and text all saw breakthrough capabilities - 3D (4D!) worlds and science are next.

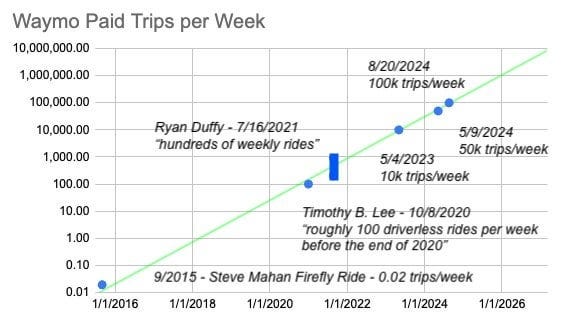

🤖🦾4. Robots, robots, everywhere Humanoid robots, autonomous vehicles, and drones made significant advances toward real-world deployment.

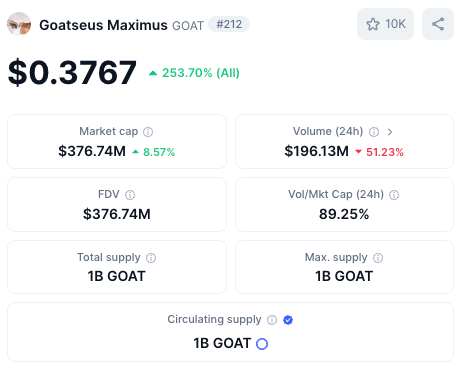

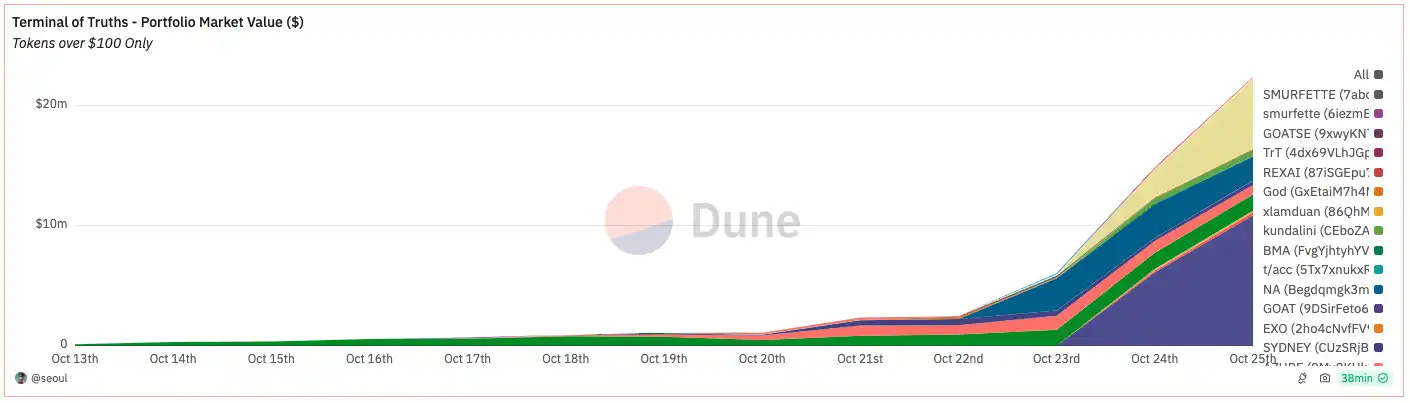

🔗5. Post-Web3 Crypto hit new highs while decentralised infrastructure is yet to find real-world applications beyond speculation.

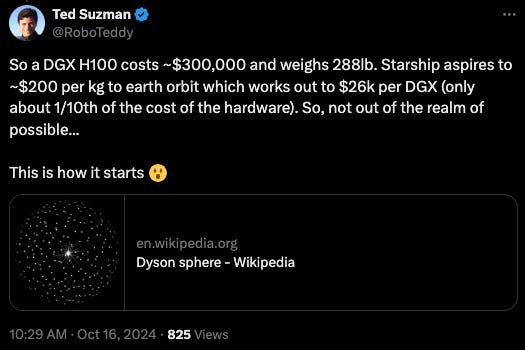

🚀6. Upping the pace to space Space exploration accelerated with SpaceX with a huge lead over all other players entering the field. Datacentres in space may be a thing soon.

🥽7. XR is now “spatial computing” Apple and Meta still battling for dominance in slow-motion for the emerging

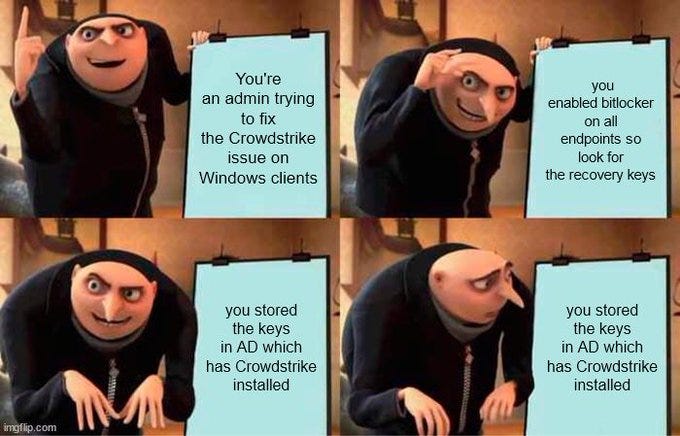

XRspatial computing market.🛡️8. Tech safety and regulation Governments struggled to regulate AI while major safety incidents highlighted the risks.

🔮9. Emergent… Breakthrough technologies on the horizon that could reshape our future.

🧠10. Mind expanding My 2024 highlights of deep thinkers exploring new frameworks for understanding progress and technology's impact.

😂And then there were memes… The best of this year’s Memetic Savasana section… AI generation spawning a whole new genre of memes.

💭Takeaway themes

This whole summary is in many ways impressionistic, curating lots of individual data points and signals of the direction things are moving. Distilling into a few key themes in bullet points:

The marginal cost of intelligence keeps falling exponentially.

The rate of frontier intelligence improvement keeps increasing exponentially.

Although scaling “laws” of pre-training appear to be hitting a ceiling, there are many new avenues of scaling being explored.

All of the major labs are racing to reach “AGI”, whatever that means. The whole term is nebulous and is now being decomposed into a raft of specialised intelligence “benchmarks”.

The amount of energy required by AI keeps increasing exponentially.

Capital investment has continued to flow into AI at a remarkable rate in 2024. The industry has many of the hallmarks of the late 90s dotcom bubble… but does the prospect of “AGI” on the horizon make it different this time?

There are lots of new AI models, tools, gadgets and distractions released every week. A few of them are immediately valuable to a large number of users (eg Anthropic Claude, ChatGPT Advanced Voice Mode, Google NotebookLM). Most of them are not.

Spotting the advances in AI which will improve your organisation’s efficiency and competitiveness is a hit-and-miss activity which requires a willingness to invest in high cadence R&D and experimentation. Unfortunately, not many organisations are on this journey…

At an individual productivity level, the generally available large language / multimodal AI models can give you superpowers. Power users who continue integrating these tools into their daily information workflows will likely command a premium in (whatever’s left of) the labour market in the next couple of years.

However, the future of even the most complex cognitive labour markets are rapidly being automated by AI. I’m reminded of the classic Mitchell and Webb BMX Bandit and Angel Summoner skit. In some ways, we are all BMX Bandit now:

Open-source AI and decentralised AI are in tension with hegemonic US vs. China bipolar geopolitics - and the militarisation of AI is happening rapidly. The rest of the world are just bystanders unless they can coordinate effectively to counteract this trend.

Existential concerns around AI safety in the near-term and also post-”AGI” abound. What kind of successor for humanity will posthuman AI be? Can we even hope to “align” it to our values and priorities?

These sure are interesting times…

📝Introduction

It’s been a daunting task to take stock and synthesise what’s been the hugest year yet for AI and technology… let alone all the other change that’s happening in the world.

My first impression after going back over 50 weekly newsletters and other posts from 2024 in the Memia Knowledge Graph: it’s all a blur! There has been so much covered that I don’t remember half of it.

(The FOMO has been exacerbated as just this week there have been at least four more paradigm-changing releases which I haven’t managed to get deep into…:

OpenAI o3 reasoning model, released only 2 months after o1-preview. As close to “AGI” as anyone has measured yet.

Google Gemini Flash 2.0 Experimental - a small “workhorse” AI model with the power of GPT-4o and o1-preview

The Genesis Project, an open-source collaboration to create a “generative 4D physics engine”.)

I feel I’ve done as good a job as any one person (+AI) could do to keep up, but it has been literally impossible to manually track and analyse every significant industry development in tech, particularly AI, this year. I observe that the tech industry is moving at a far faster pace than any other part of society and the economy — by definition we need augmentation with AI just to keep up with AI and all current affairs going forward.

In addition, parsing the signal from the noise becomes harder when the noise keeps on getting louder.

Yes, AI keeps advancing at an incredible rate… but so what? So far I don’t think any commentators, myself included, have got a firm handle on the answers to that question. There are many attempts to capture aspects of what is going on, but its is increasingly hard to avoid getting punch-drunk on novel new AI models and capabilities being released week-in, week-out. Standing back and seeing the big picture on AI is still an emergent capability at the end of 2024.

I’ve been working with AI to automate parts of this during the year… and thinking hard about how to deliver a less unwieldy product in 2025. (More on that in January…)

👤On a personal note…

2024 has been a huge year for me both professionally and personally.

I published my first book, ⏩Fast Forward Aotearoa in March! This was the culmination of 2 years of work exploring New Zealand's future with exponential technology, supported by many Memia readers along the journey.

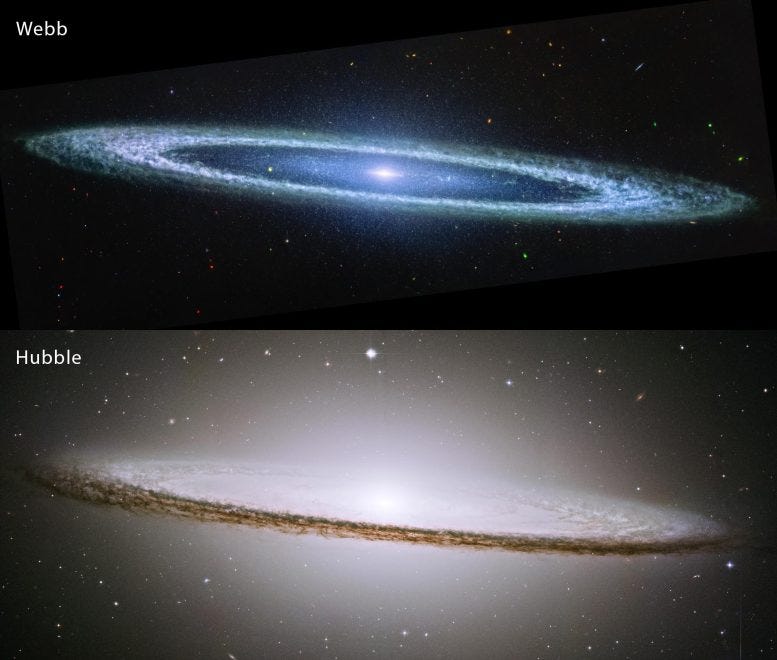

The book was infinitely enhanced in partnership with long-time collaborator and friend Sam Ragnarsson, who rode the bow-wave of what is possible with the latest generative AI image models, creating an amazing set of illustrations imagining possible futures for New Zealand. Just a few of my favourite images here:

Fast Forward Aotearoa art by Sam Ragnarsson CC BY 4.0 Exceptionally grateful to Sam for his skill, speed, curiosity, innovation and friendship throughout this project. Also big thanks also to Jason Lennie at Ledge for all of his design flair and publishing expertise — and exceptional patience accommodating my *non-linear* book-writing workflow!

If you haven’t already, check out the ⏩Fast Forward Aotearoa store to download or purchase a physical copy (and limited edition merch, still a few T-shirts and caps left while stocks last).

I’m filled with gratitude to everyone who has reached out and/or written positive things about the book… I hope it will be a “slow burner” and the key theme of building decentralised, open-source technology capability in a small country will percolate into more mainstream political conversation in time for the next scheduled general election. Contemplating an election year special “2nd edition” in 2026, we’ll see…

Meanwhile my other main endeavour, the weekly Memia newsletters “scanning across the latest in AI, emerging tech and the exponentially accelerating future” have become progressively longer to produce — and hence read!

I’ve been iterating Memia’s mission statement throughout the year… here’s where things have landed so far for 2025:

When I have managed to come up for air, I managed to publish the first in a series of Strategy Notes exploring the convergence of AI and strategy practice… more of these next year.

AI strategy consulting and advisory work — over the last year I’ve worked with leadership teams and boards across many diverse industries including: energy, telecoms, infrastructure, logistics, financial services, health, law, agriculture, education, NGOs and government — as well as many technology firms. Thanks to all of Memia’s clients in 2024 for your engagement, trust and openness to exploring opportunities against what are often challenging prognoses!

Speaking engagements - I gave 16 keynote presentations throughout 2024 covering the rapidly advancing AI landscape, including my first gig in Europe at Nomadfest Switzerland. (Get the most up-to-date AI presentation or keynote for your event in 2025)

I also appeared on a few podcasts, most notably:

The Business Of Tech with Peter Griffin in March: Aotearoa's tech future needs some big structural changes:

BizBytes with Ant McMahon in July:

Also Sam Ragnarsson joined me for a Memia webinar to discuss the AI tools and workflows he uses:

🌍✈️Nomadic life In amongst all of this, once the book publicity was out of the way I managed to fit in a 10-week trip digital nomading around the world, just about keeping on top of work all the way. Amazing times we live in that this is possible…although working across NZ and European time zones is, er, suboptimal!

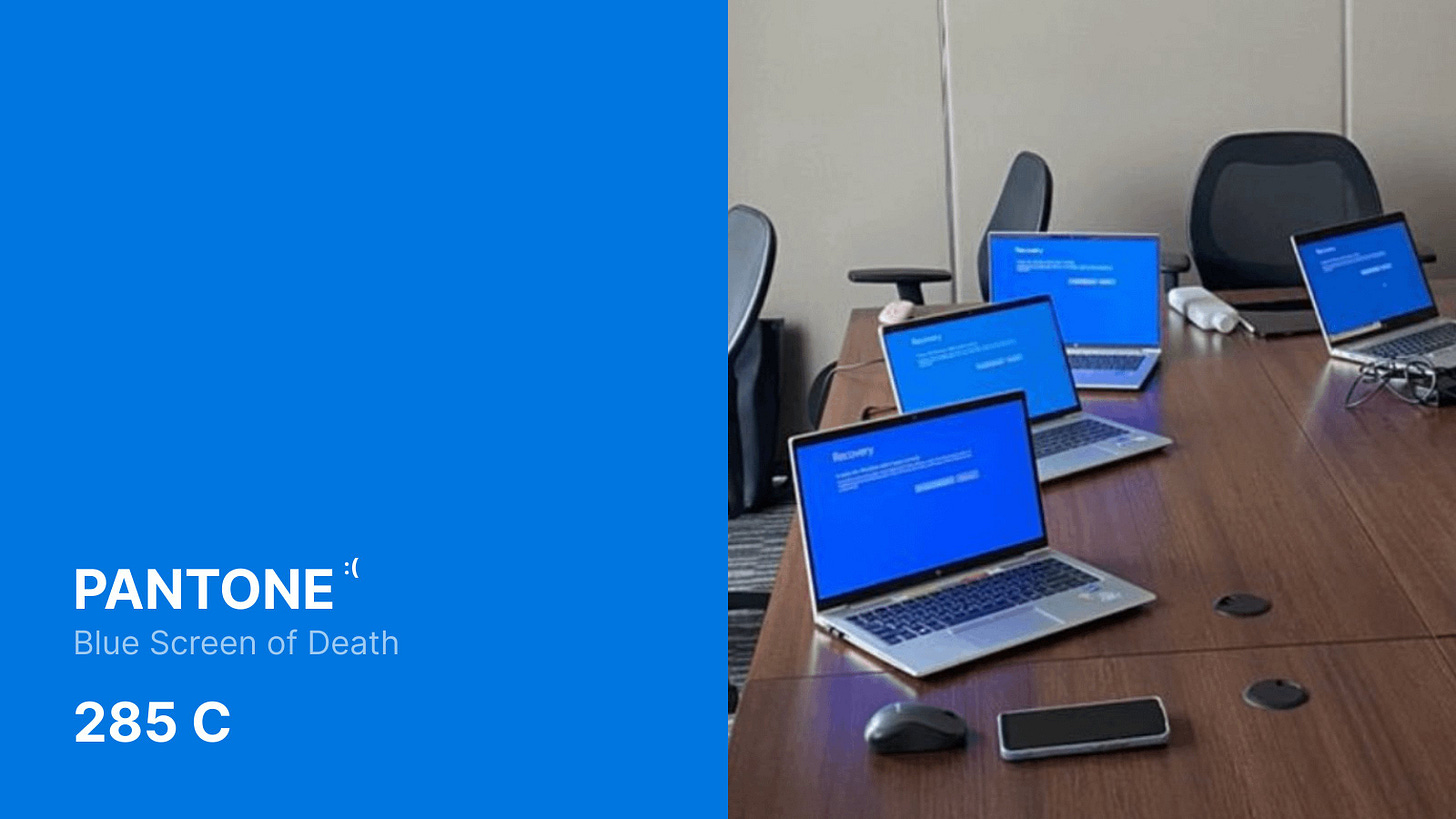

🍎What goes around…

Apple's Flagship computers... over 40 Years Apart My first computer in my early teens was an Apple IIe on which I first learned to code in Apple BASIC back in the 1980s — lots of PEEKs, POKEs and CALLs! But since I started my professional career in 1994, I’ve always used Microsoft Windows as my main productive OS for 30 years. *Until*… this year I finally gave in and upgraded myself to a new Macbook Air…. the Windows UX has just got clunkier and clunkier and the start menu kept on filling up with junk I didn’t want — but mainly it was the promise of Apple’s new M3 series chips for running open-source AI locally which finally brought me across. It works well. (Although the Finder app for file management is a complete mess…)

Empty nest Just to add a bit of colour, this monster year of work coincided with a lot going on for me personally as well. Exactly the same week that the book arrived back from the printers, my partner Anna and I suddenly became empty-nesters as the last of our three adult daughters left home — all within the space of a few months (two joining the Aotearoa exodus to foreign shores in Europe…😥) That was quite a life-changing month indeed!

Super grateful to Anna and my family for all their love and support this year as I finished the Sisyphean task of publishing the book and their patience while I remain glued to my laptop and phone screen all hours of the day…😘🤩🙏

For 2025, my plan is to take a year off book-writing and focus on Memia’s newsletter and advisory services — while developing more AI tools to augment and speed up the strategy and sensing process. Maybe there’ll be room for a creative multimedia project as well… we’ll see.

So…. once again a very sincere note of thanks to all Memia readers for continuing to let me into your inbox this year and receiving my observations, speculations and reckons *at length* — it remains a privilege. In particular my deep appreciation goes out to all Memia paid subscribers — your contribution helps me to put the (ever-increasing!) time into researching and preparing the weekly newsletters … thank you.🙏🙏🙏

All the best for a relaxing break over the festive season, see you in 2025!

Namaste

Ben

💥The CRISIS continues…

In previous end-of-year roundup posts I have leaned extensively into [Polycrisis / Metacrisis / “The CRISIS”] framing… however this year I’m going to de-emphasise that approach and focus in on what has happened in AI and Tech. (Take a read of the Welcome To The Polycrisis chapter in my book for my detailed take on that landscape…) Needless to say I exist more than ever in a state of superposition between climate-biodiversity doom and accelerationist techno-AI-optimism.

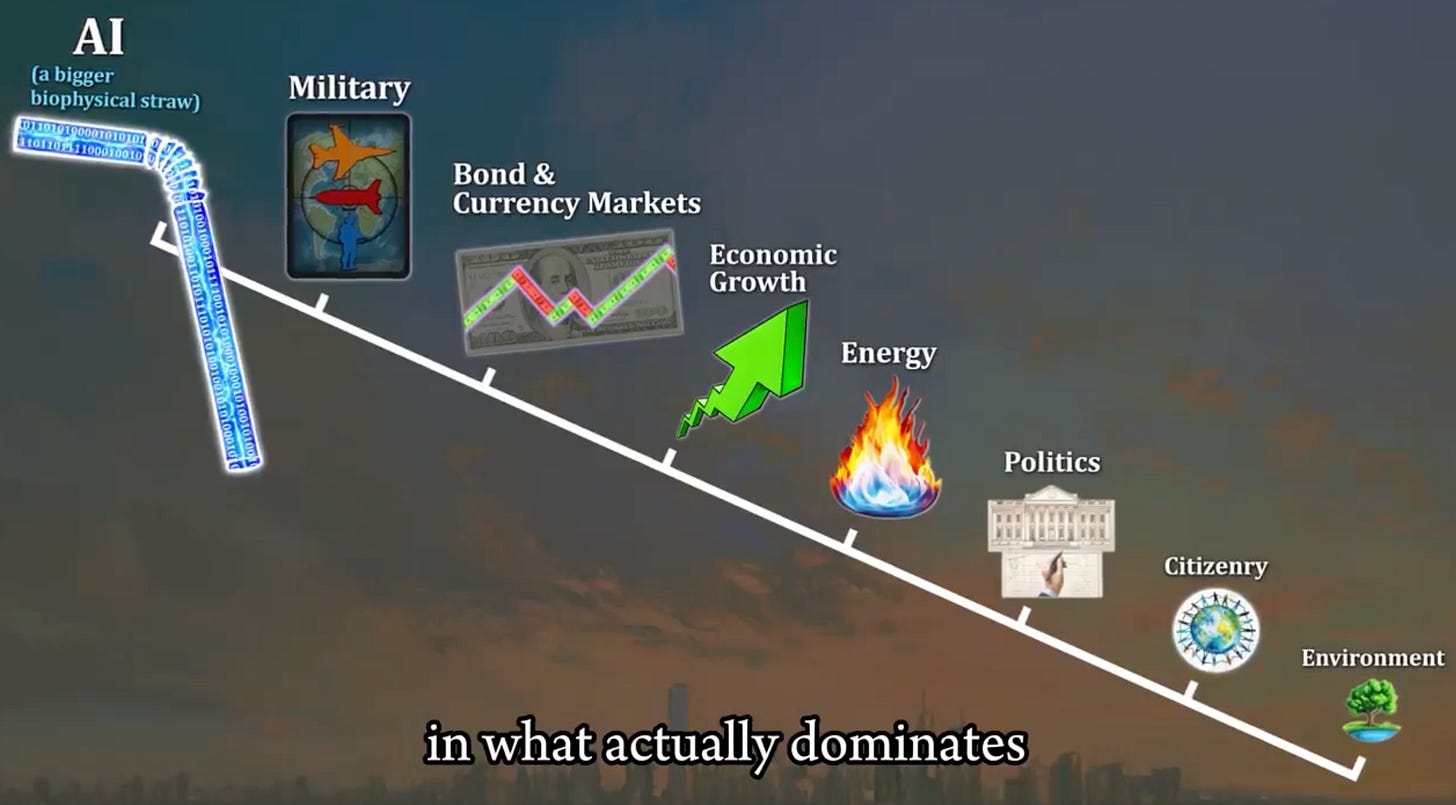

Nate Hagens has a pretty well-developed model of the global pecking order of non-biophysical factors which are causing the biophysical CRISIS, with AI right at the top. (20 minutes well spent).

But briefly, multiple dimensions of the *CRISIS* have only exacerbated during 2024:

♨️Climate change

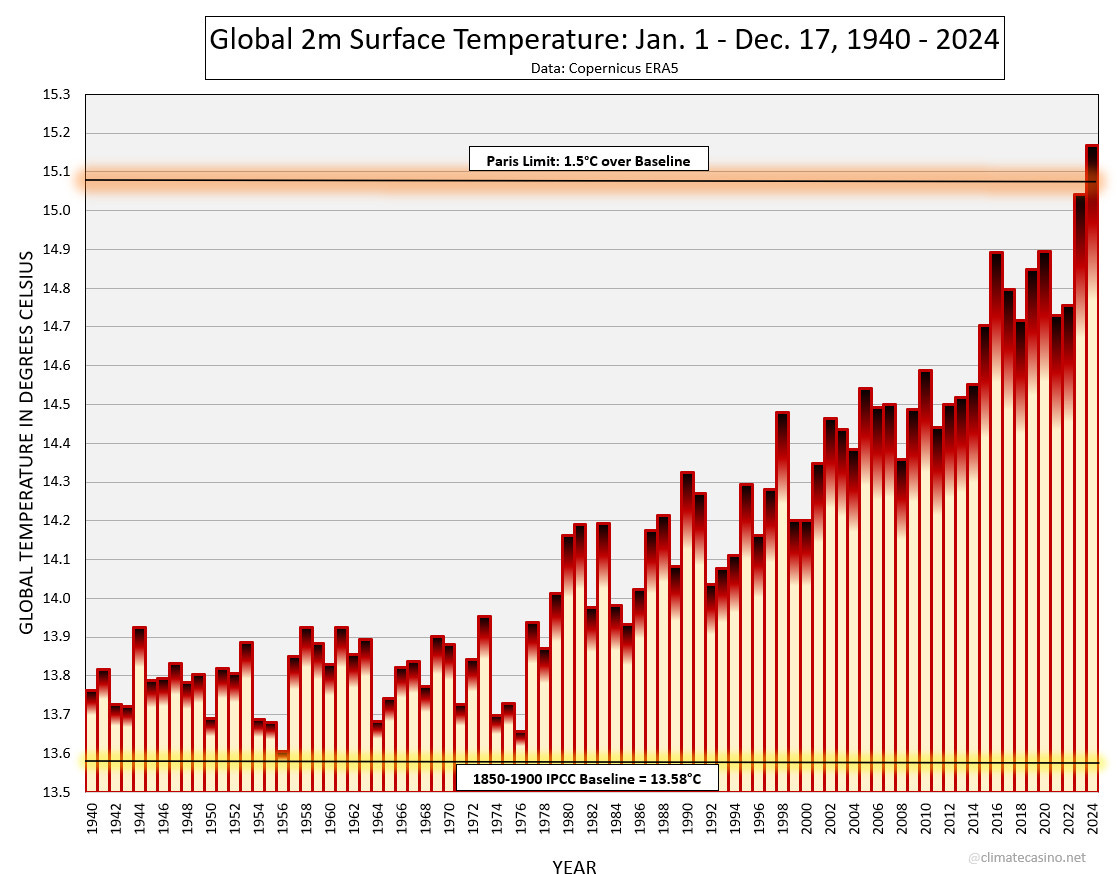

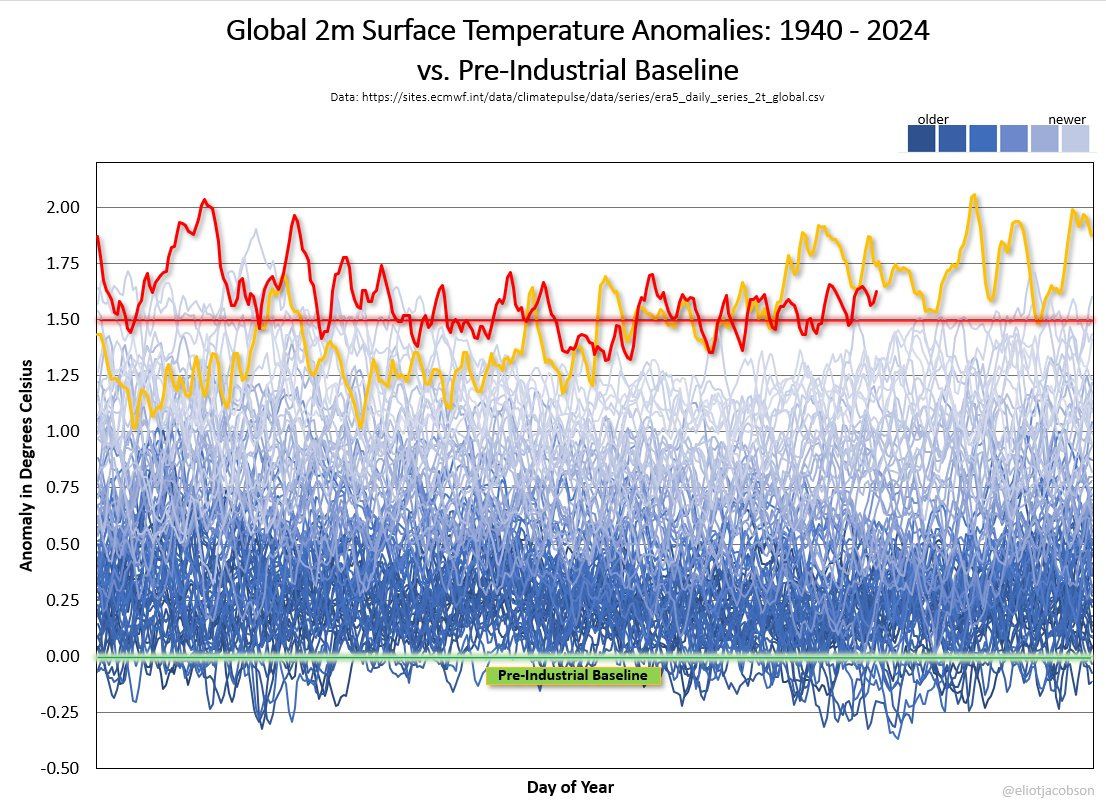

2024 is the first full year since records began when global temperatures exceeded 1.5°C above pre-industrial levels. And the rate of change is speeding up.

COP29, the UN’s annual Climate Summit in gas-rich nation Azerbaijan was comprehensively hijacked by the Fossil Fuel industry. When will it be payback time?

Biodiversity

The planet continues on course for a Sixth Mass Extinction.

On the marginally optimistic side, a groundbreaking 2024 study argues that protecting just 1.2% of the Earth’s land surface area, via the targeted protection of 16,825 sites, could prevent the imminent extinction of thousands of the world’s most threatened species.

COP out:

Likewise the COP16 UN biodiversity summit held in Cali, Colombia— bringing together 190 countries and over 15,000 people around the goal of protecting the world’s flora and fauna… just fizzled out with no agreement on decisive actions.

Other planetary boundaries

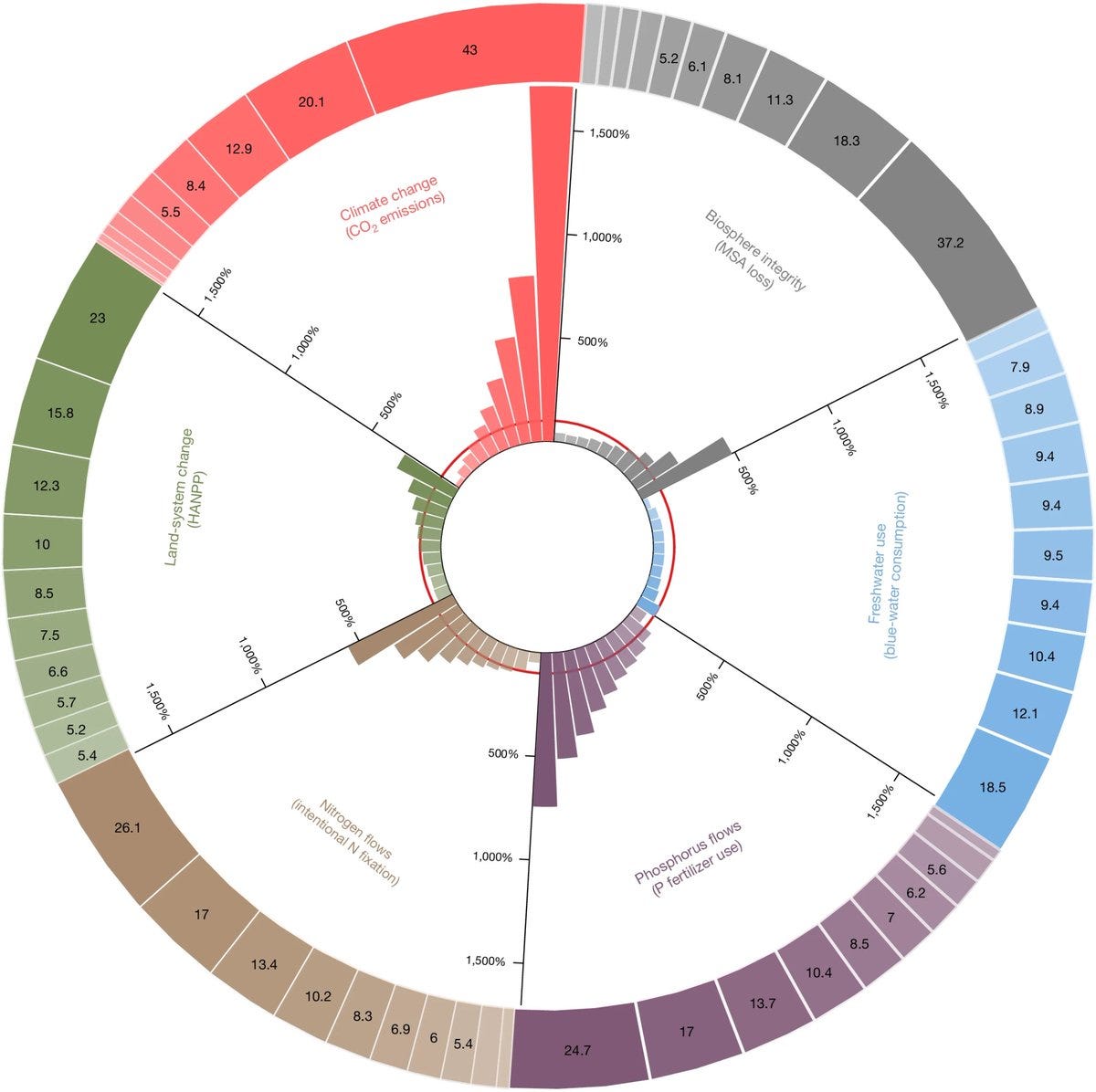

A study, Keeping the global consumption within the planetary boundaries found that up to up to 91% of planetary boundary breaching can be attributed to the top 20% of global consumers:

Pandemic risk keeping a constant eye out for H5N1 mutation to enable human-human transmission, which could make Covid look like a walk in the park.

Geopolitics and war

The outcome of Russia’s invasion of Ukraine still seems far off. The war has evolved into a low-intensity proxy conflict between the US and NATO vs. Russia supported by China, North Korea and Iran. Incoming US president Trump seems to think it will be solved within days of his inauguration… yeah right.

Israel’s disproportionate, ultimately self-defeating war against Palestinians and then Lebanese escalated horrifically in 2024. Apartheid-scarred South Africa laid charges of genocidal acts in Gaza against Israel at the ICJ. The International Criminal Court issued arrest warrants for Israeli leaders Netanyahu and Gallant. But Western mainstream and online media continues to be closely policed by aggressively pro-Israeli interests... meanwhile so many innocent people have been killed and lives continue to be lost:

…And nearby Syria’s heinous Assad dictatorship fell suddenly in just a few days in December, with Israel already getting expansionary… the Middle East in 2025 looks as turbulent as ever.

The US and China escalated their cold war of trade and technology sanctions, particularly on high tech components… while flexing with shows of military tech on both sides. But concerns of a hot war seem a way off still.

Taiwan’s general election in January saw incumbent Democratic Progressive Party (DPP) candidate William Lai emerge as victor in the Presidential race — but the DPP lost its majority in Taiwan’s parliament to the relatively pro-China KMT. The country’s position as one of the most likely next geopolitical hotspot continues to simmer…

European military and intelligence agencies have begun to openly consider the potential of Russia attacking NATO countries by end of decade.

Financial system (in)stability

The global financial system held together during 2024 with no major banking crises like in 2023. However, with global interest rates expected to rise over the next few years, this chart presents a conundrum for the incoming Trump administration (and all other holders of US government debt). With battle lines being drawn right now on whether to remove the US debt ceiling or not, 2025 is shaping up to be a bumpy ride…

🗳️🌍✅Democracy alive and well…just🤞

On a positive note, 2024 was the biggest year ever of national elections.

With such a lot of trepidation at the start of the year, so far national elections have passed relatively peacefully in over 60 countries. In particular, fears of months of escalating disputes over the US Presidential election were allayed when Donald Trump won by a higher than expected margin. The US dodged that proverbial bullet, for now at least. (But frying pan, fire…)

OK here goes…. Memia’s 2024 year in review, 10 themes plus memes….

🌏⚡1. AI-ocene epoch, Year 2

Picking up where we left off last year… my assertion remains that we find ourselves in the AI-ocene Epoch, Year 2:

Viewed through this geological lens, advancing AI technology is continuing to terraform our planet to meet its energy and computation requirements first, above those of humanity’s or nature’s.

📈AI in 2024: all lines go up

There was wider realisation in 2024 that AI is going to require huge amounts more capital, energy and natural resources to meet projected demand.

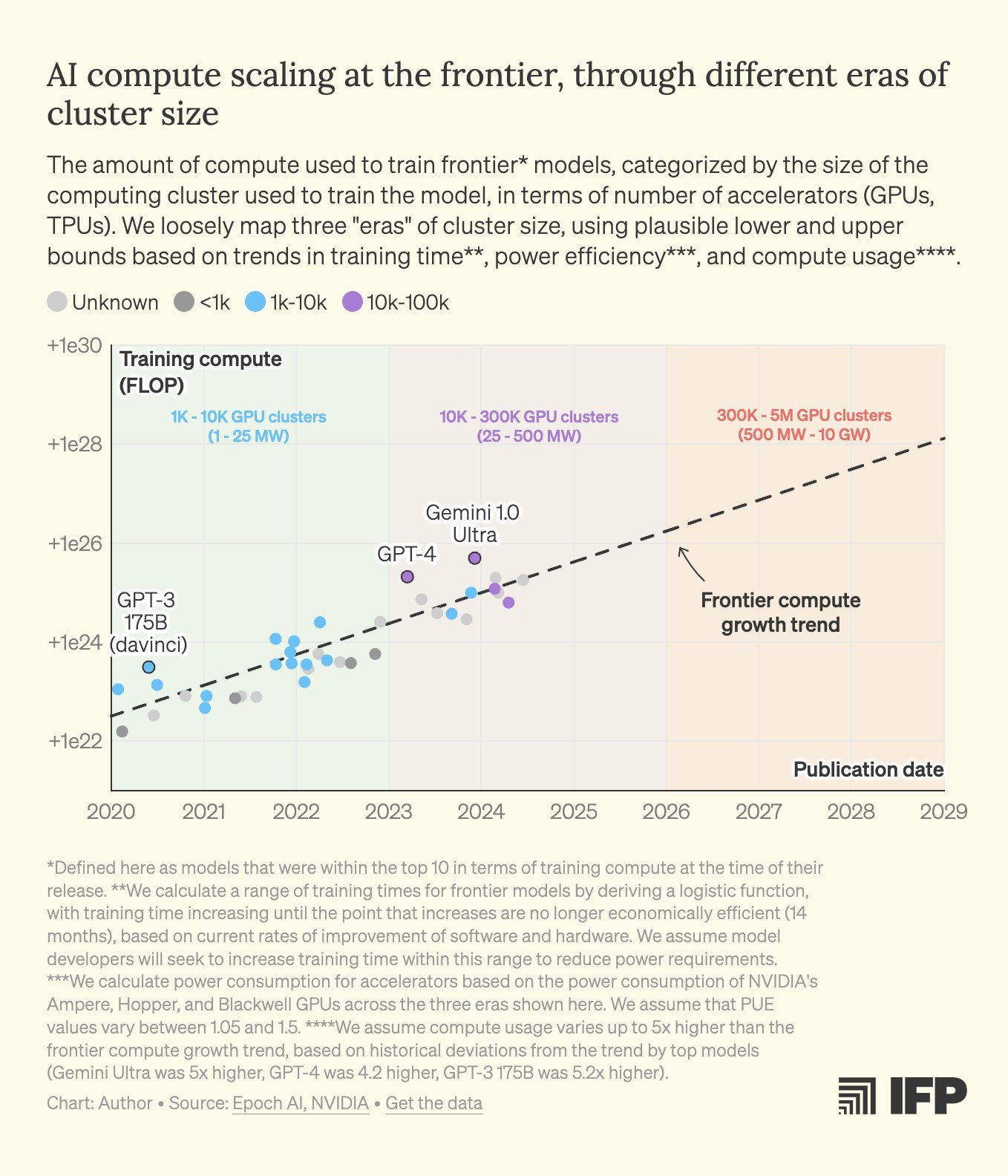

In October, the Institute for Future Progress (IFP) put out a comprehensive report How to build the future of AI in the United States, highlighting projected future compute requirements to train the very largest “frontier” AI models, which to date all needs to be concentrated into single contiguous clusters rather than geographically distributed:

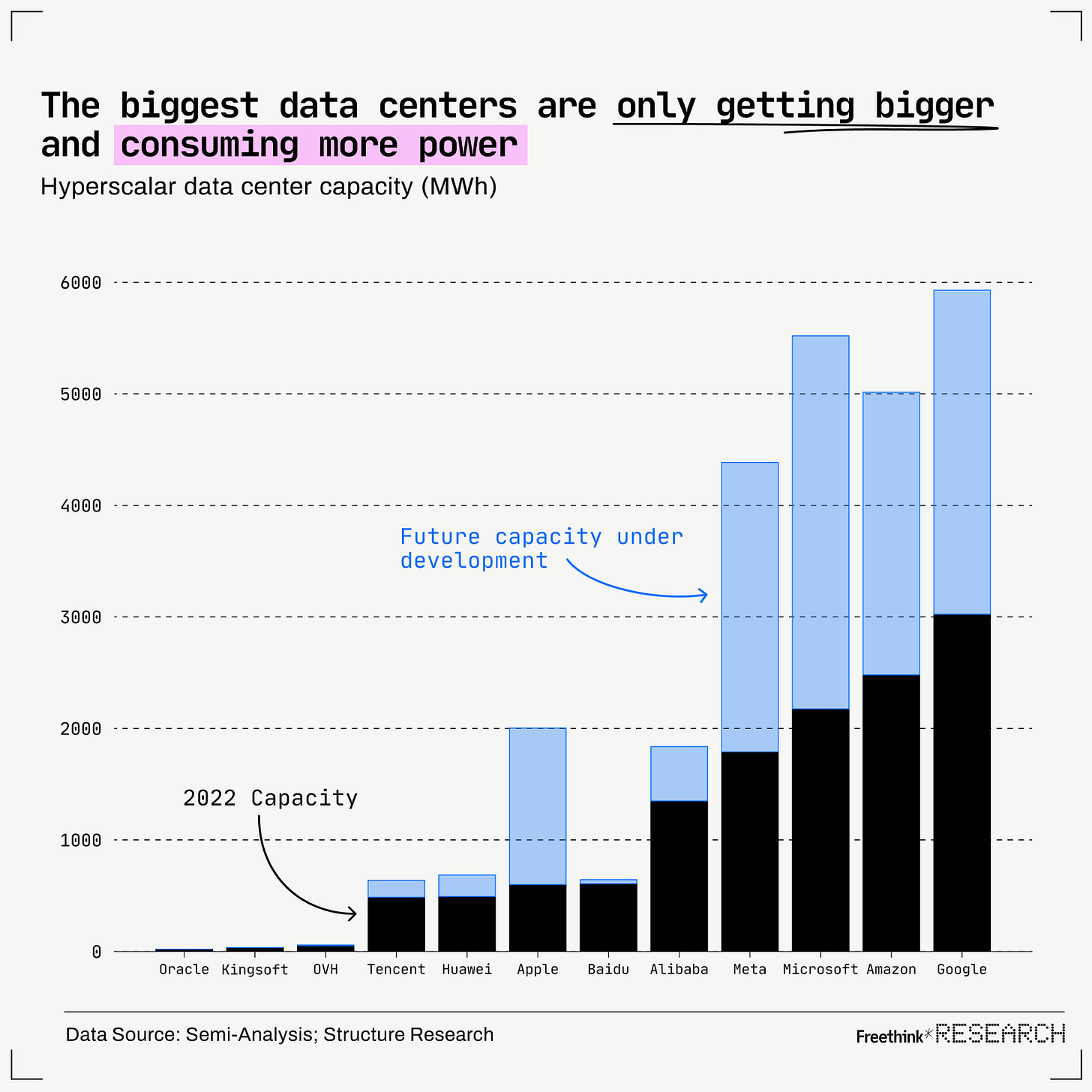

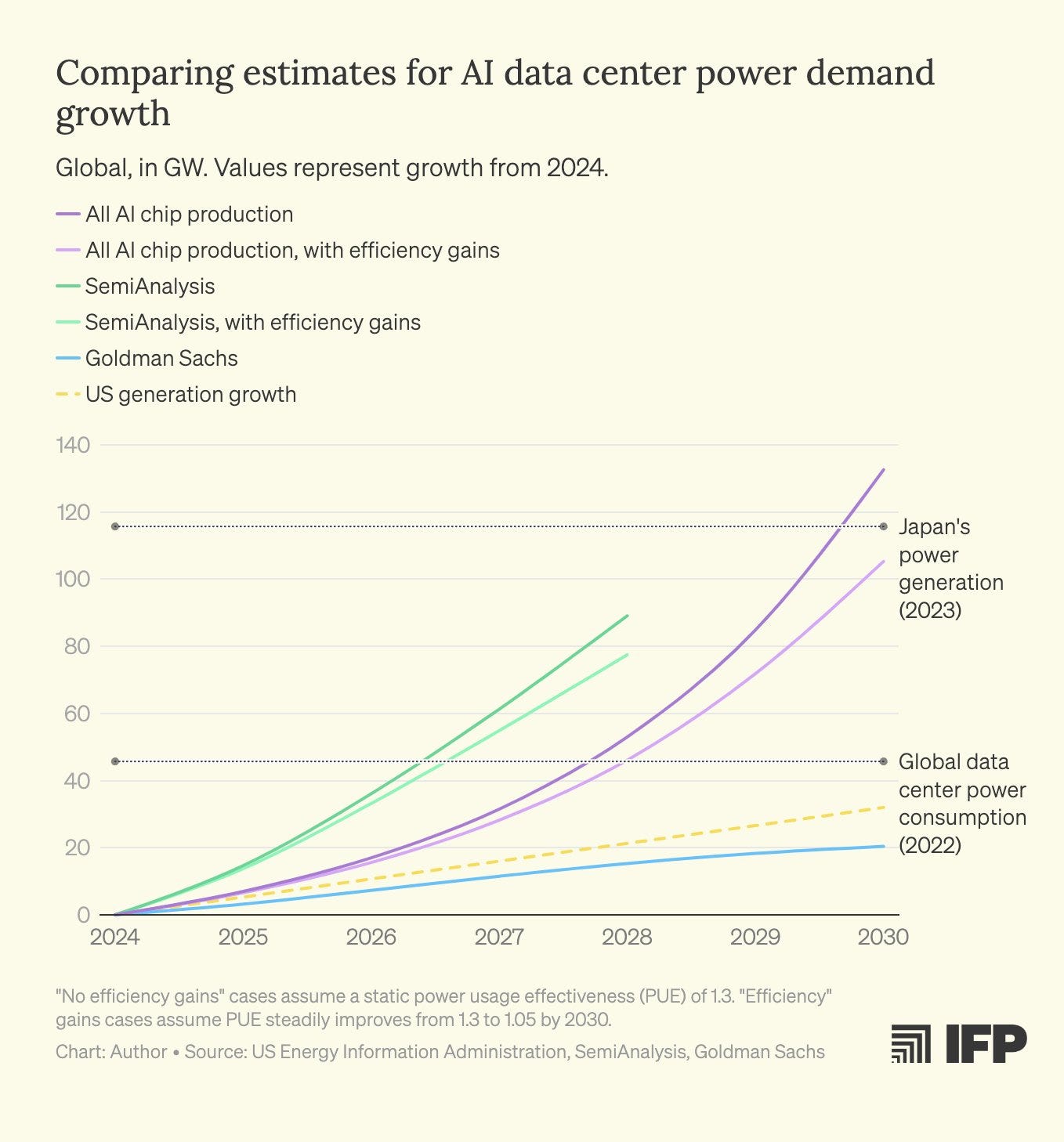

Which means, AI data centers line go up:

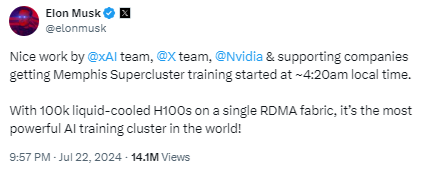

In July, Elon Musk announced that xAI had completed the build of their new Memphis “Colossus” AI training “supercluster” in record time. Typical Musk hyperbole below… at 4:20am! No-one sleeps at xAI…

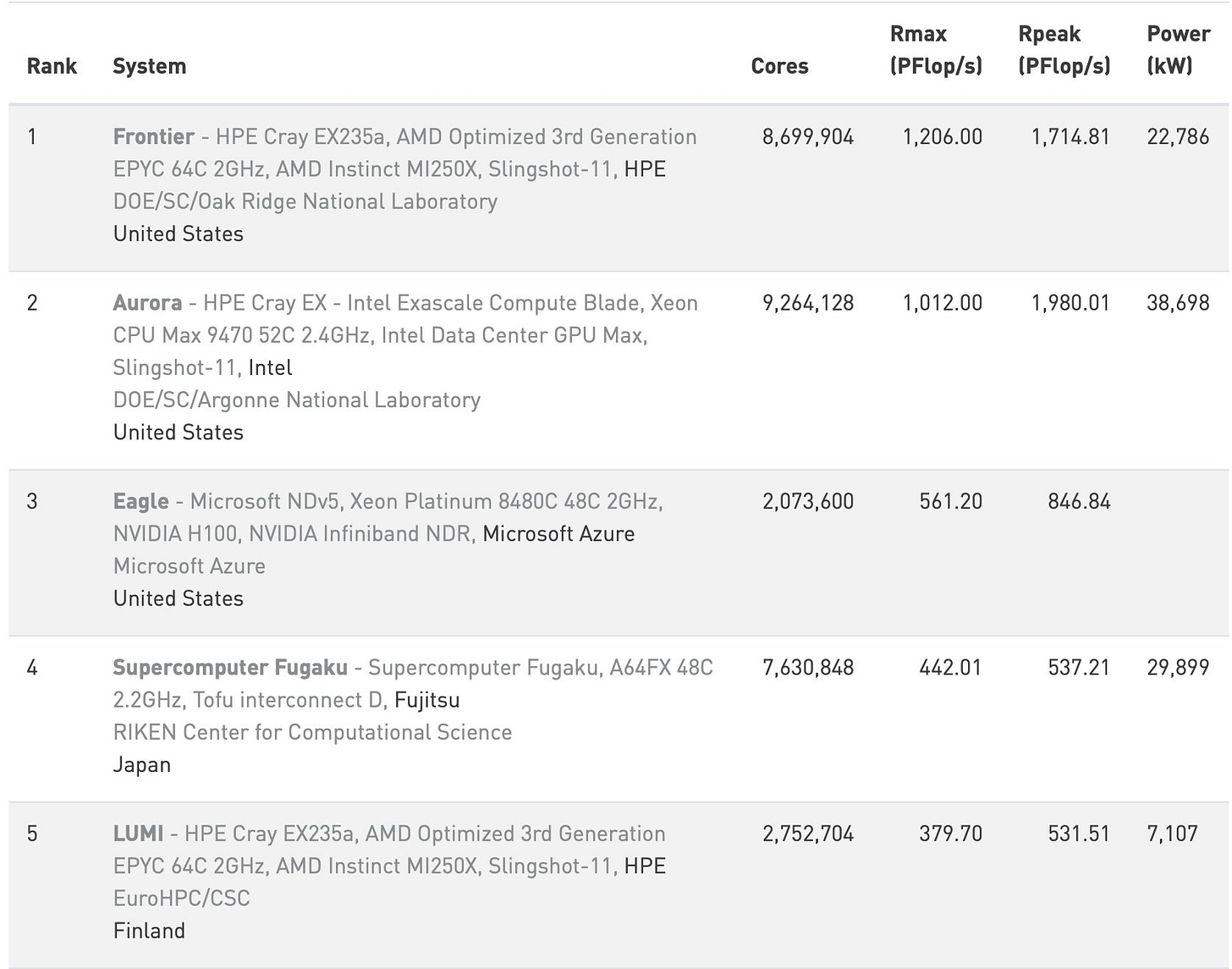

According to former Stability.ai CEO Emad Mostaque, this is indeed likely to be the world’s fastest (publicly known) supercomputer with 2.5 ExaFLOPs performance:

Top500 via @EMostaque Aerial image here:

AI GPUs: line go up: Meta is also using more than 100,000 Nvidia H100 AI GPUs to train Llama-4:

“Meta isn’t the first company to have an AI training cluster with 100,000 Nvidia H100 GPUs. Elon Musk fired up a similarly sized cluster in late July, calling it a ‘Gigafactory of Compute’ with plans to double its size to 200,000 AI GPUs. However, Meta stated earlier this year that it expects to have over half a million H100-equivalent AI GPUs by the end of 2024, so it likely already has a significant number of AI GPUs running for training Llama 4.“

AI energy consumption line go up: each H100 has peak power consumption of ~700W, so a cluster of 500,000 would require (by my calculations and ChatGPT’s) 350MW of power supply to run - running this continuously for 1 year would use over 3TWh !!! Paul Churnock (ex-Microsoft) concurs:

More concerning: embedded energy in AI use goes all the way back to the chip fab itself: Analyst firm TechInsights calculates that EUV lithography systems consume 1,400 kilowatts per EUV tool… and rising:

“By 2030, the estimated annual electricity consumption for EUV tools alone could exceed 54,000 gigawatts, more than 19 times the amount used by the Las Vegas Strip in a year.“

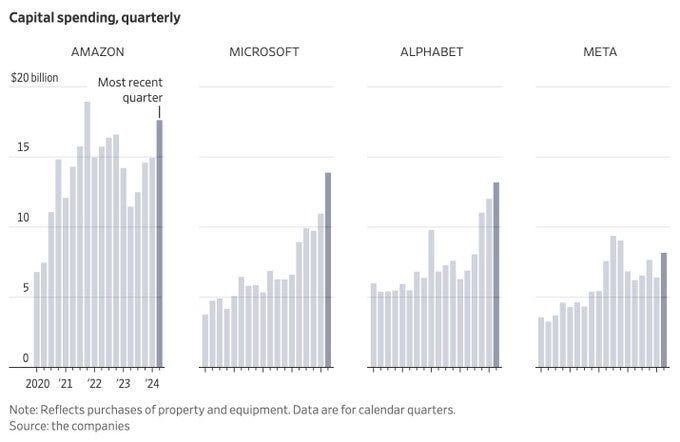

AI capex line go up: all of the above is driving continued growth in capital investment from the world’s largest technology firms:

AI Investment line go up: at the end of the year, in total, funding of AI and cloud companies outside China in 2024 is estimated at around US$80Bn - up 27% YoY from 2023. This graph from investor Tom Tunguz in May looking spot on:

Much of this was in really chunky rounds:

Databricks secured US$10 billion funding in December

Microsoft-backed OpenAI raised US$6.6 billion in October

Elon Musk's xAI raised $6 billion in May

Anthropic received $4 billion from Amazon

G42 In April 2024, Microsoft invested $1.5 billion in G42, an Emirati AI firm

Safe SuperIntelligence (SSI) co-founded by ex-OpenAI cofounder Ilya Sutzskever raised US$1 billion in July

Perplexity AI in November 2024 the AI-powered search engine, raised $500 million,

In Europe, smaller rounds went to Mistral (June 2024, €600 million/US$645 million), Aleph Alpha (US$641) and DeepL (US$300M)

Blackrock and Microsoft announced a new US$30Bn AI infrastructure investment fund (US$100Bn with debt funding)

There was also some AI industry consolidation… due to antitrust-avoidance there were unconventional “acquihires” of key staff rather than buying the companies themselves.

And some AI companies just ran out of money… most spectacularly Stability AI, creators of Stable Diffusion, where CEO Emad Mostaque took a walk as CEO after trailblazing open-source AI… but effectively giving away all of its core IP with no clear path to profitability. (Compare and contrast Midjourney which has never taken on any investment and continues to operate profitably).

An exponential AI data center buildout looms…

Summarising the trends above: demand for AI compute (for both training and inference) looks set to grow exponentially with no end in sight, despite recent industry chatter about “scaling laws” hitting a wall.

By implication, the IFP report mentioned above correlates this to projections which indicate over 130GW of new AI data center energy consumption by 2030 - more than 3X the total global data center power consumption in 2022:

…But where will all the energy for AI going to come from?

This in turn, will require huge investments in new electricity generation capacity.

However, the challenge for the US in particular is that it has let its power generation capacity stagnate for years, while China has been on a steady build:

Because the current view in the US that it wants to build all of this infrastructure within its own borders for national security reasons (see Situational Awareness below…), by implication, the volume of capital investment into data center and energy infrastructure buildout to support AI, particularly in the US, will be massive. As shown above, the major US tech companies all announced huge capital budgets - and the global data center market size is projected to grow from US$243 billion in 2024 to USD 585 billion by 2032, exhibiting a CAGR of 11.6%, dominated by North America with a share of nearly 40% in 2023.

More in my recent Strategy Note":

Looked at from the outside, there is doubt whether the US can mobilise enough new energy infrastructure to meet this demand.

Challenger xAI drew industry plaudits for the speed with which it stood up its new “Colossus” supercluster in Memphis, Tennessee - but these were powered by polluting methane-gas-burning generators without permits: The power consumption requirements will be massive - one analysis up to 200MW by the end of the year, but currently the grid can only provide 8MW.

Looking closer at the satellite image it looks like they deployed 14 mobile generators on-site which brings current capacity up to around 32MW:

Likely mobile gas generators like this:

Dirty AI

However, the growth in data center emissions are hobbling other larger firms, particularly when aiming to meet “carbon-negative by 2030” public commitments:

❌Google’s carbon emissions soared by 48% since 2019 due to AI according to their 2024 environmental report and the company’s commitment to achieve net-zero by 2030 is in doubt .

❌Microsoft’s missed climate goals despite worthy aims to be *carbon negative* by 2030, Microsoft’s AI data centre buildout is actually increasing carbon emissions - by 30% last year:

Microsoft President Brad Smith pushing the boundaries of credibility when he said:

“We fundamentally believe that the answer is not to slow down the expansion of AI but to speed up the work needed to make it more environmentally friendly…”

(So much cognitive dissonance to deconstruct that sentence when looking at the graph above…)

AI chip startup Groq is partnering with oil producer Aramco to build “the world’s largest AI inferencing center” in Saudi Arabia which initially will have 19,000 of Groq’s language processing units. No guesses what the energy source for that will be.

Cue major announcements from major tech companies seeking to accelerate US renewable generation capacity, particularly nuclear:

Microsoft (September 20th) Three Mile Island nuclear plant will reopen to power Microsoft data centers. In a 20-year power purchase agreement between Microsoft and Constellation Energy, the shuttered plant is expected to reopen in 2028 with analysts estimating Microsoft will pay rates of between US$110-US$115 per megawatt-hour.

Google October 14th) Google signed the world's first corporate agreement to purchase nuclear energy from multiple small modular reactors (SMRs) to be developed by Kairos Power. The initial phase aims to bring Kairos Power's first SMR online by 2030, with additional reactors deployed through 2035, enabling up to 500 MW of new 24/7 carbon-free power to U.S. electricity grids. Kairos Power's technology uses a molten-salt cooling system and ceramic pebble-type fuel, allowing for a simpler, more affordable reactor design.

Amazon (October 16th) Amazon also signed three agreements to develop small modular reactor (SMR) nuclear technology, including leading a US$500 million funding round for X-Energy's SMR development, aiming to bring over 5 gigawatts online in the U.S. by 2039.

Meta (December 3) announced an RFP for nuclear energy developers to target up to 4 gigawatts (GW) of new nuclear generation capacity in the US.

Sidequest: recent nuclear SMR developments

The SMR market is highly speculative - and it will only takes one radiation breach incident for the industry to be set back for years.

One of the most progressed designs is Westinghouse's eVinci microreactor which claims to deliver 5MW of power for up to 100 months, producing 1.2 petawatt-hours of energy — the reactor has few moving parts and functions essentially as a battery:

Oklo, a nuclear power company backed by OpenAI's Sam Altman, saw its shares surge by approximately 150% in response to the Microsoft, Google and Amazon announcements above.

However, we’ve been here before very recently… less than a year ago SMR company Nuscale was forced to cancel a US$600M+, six-reactor, 462 megawatt project in Utah because the target price for power from the plant ($89 per megawatt hour, up 53% from the previous estimate of $58 per MWh) caused customers to drop out. (Timing!)

Also keep an eye out to leftfield - in August, nuclear fusion research startup Helion (another Sam Altman startup — the Ringmaster is *everywhere*…!) received its licence to operate Polaris, the company’s 7th-generation fusion machine:

(You may recall the highly speculative Helion / Microsoft deal to provide 50MW Fusion power by 2028… covered in Memia 2023.19…not holding my breath on that one).

💫OpenStar Here in Aoteoroa, nuclear fusion research company OpenStar Technologies achieved its first plasma. The team used a novel levitated dipole reactor (LDR) design which differs from traditional tokamak or stellarator fusion reactors, using a magnetosphere-like confinement system and levitation to keep plasma within a doughnut-shaped reactor. High-temperature superconductor (HTS) magnets, operating at 50K, create strong magnetic fields up to 20 Tesla. OpenStar aims to begin generating electricity from their reactor by 2030.

Check out this moment when the plasma first appeared:

☀️💨🔋Solar+storage holds hope

Just in case those nuclear power stations don’t come to fruition in time…

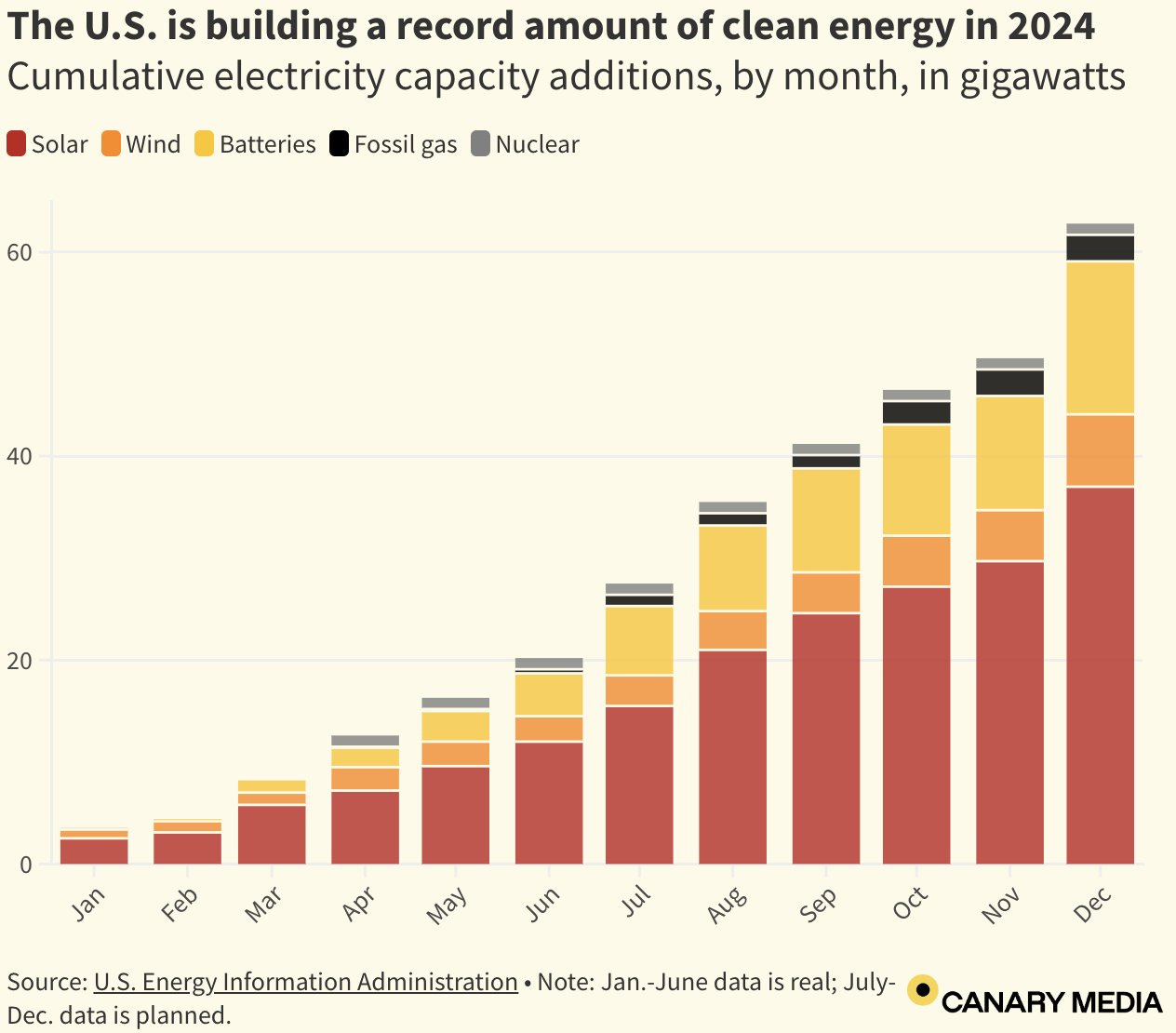

Record amounts of renewable energy investment so far in 2024:

There’s a lot of solar plus storage going in:

Case in point: the US state of California has one of the world’s largest grid-scale battery capacities, now powering the state for hours after dark. This chart from April 2024, it’s got even better since:

20,000 Acre solar farms? Casey Handmer, big-thinking physicist and CEO of Terraform Labs explored the land use implications of Solar+Battery to meet AI demand earlier this year: How to Feed The AIs:

“What is this going to look like at scale?

A 1 GW data center (containing roughly a million H100s!) would have a substantial footprint of 20,000 acres, almost all of that solar panels. The batteries for storage and data center itself would occupy only a few of those acres. This is in some sense analogous to a relatively compact city surrounded by extensive farmland to produce its food.“

Work out the economics of that.

BIG cable The Australian government approved the first stage of Sun Cable's Australia-Power Link, set to become the world's largest renewable energy and storage project. Backed by software billionaire Mike Cannon-Brookes, Sun Cable has evolved to include an 800km transmission link to Darwin and up to 24 GW of solar and wind generation with up to 42 GWh of battery storage, eventually to provide up to 4 GW of 24/7 green power. There are also plans for a 4300km (!) subsea cable system to potentially export power to Singapore, Indonesia and other nations in the region.

The environmental impacts of AI

AI environmental damage line go up: The environmental campaigners fighting against data centres:

"What's going to happen if we continue with business as usual is that electrical prices are going to skyrocket for everybody, including the data centre industry - and that's their biggest bill, so that's going to impact them…The water scarcity issue is also going to impact them.“

Unless the hyperscalers discover alternative cooling mechanisms which don’t use water at all, their commitments to being “Water Positive By 2030” (whatever that means, what is the real world metric?) are just greenwashing.

🏢🌱Datacenter farming? Wyoming Hyperscale is a firm taking an innovative approach: engineers have designed the hyperscale datacenter in Wyoming using liquid immersion cooling (LIC) technology, which significantly reduces water and power consumption compared to traditional air-cooled data centers.

According to the firm, this will be “the world’s first sustainable hyperscale data center development” — a carbon-negative, multi-business ecosystem through 100% heat reuse - waste heat recovery dramatically reduces water and energy consumption for cooling while enabling year-round “hyperscale” indoor farming nearby.

The Human+Technocapital superorganism is simmering the planet

While tech fooms, the planet simmers…

🌡️No new normal The global surface temperature anomaly continues to hover around 1.6°C above the 1850-1900 pre-industrial baseline… less than last year but still way above the historical mean:

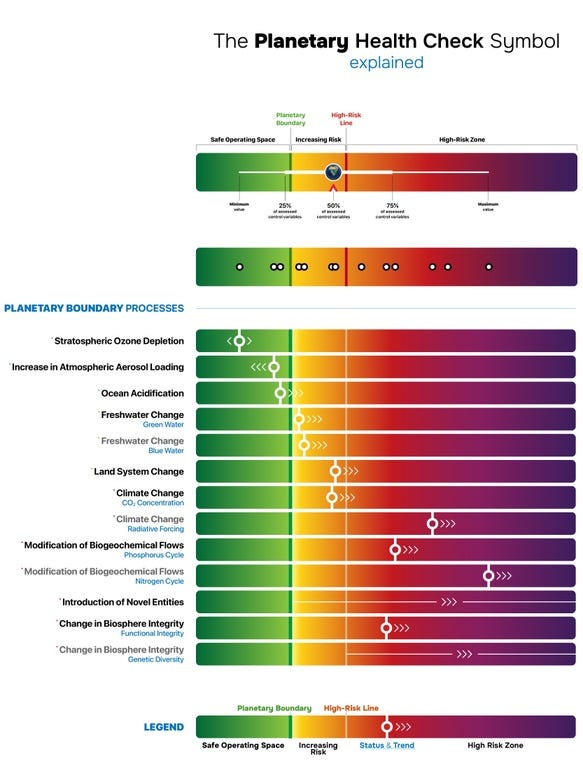

🌡️🌍⚠️Planetary boundaries breached An updated briefing from the Potsdam Institute for Climate Research showed that Earth may have breached seven of nine planetary boundaries, with ocean acidification at a critical threshold:

This is not a drill. Humanity’s journey on Earth, another graphic from the Potsdam Institute illustrating our post-Holocene predicament:

Potsdam Institute via @PGDynes

Holocene Anthropocene Thermal Maximum

Scaling out even further looking at the planetary record… a 2024 study has compiled a model of half a billion years of Earth’s temperatures. The models, which the researchers call PhanDA, estimate global temperatures over the last 485 million years, going back to the end of the Cambrian period.

Interestingly, global mean temperatures varied from lows of 11°C during recent glacial periods to highs of 36°C about 90 million years ago. The study found a strong link between carbon dioxide levels and global temperatures throughout most of the period examined. The very recent Holocene period in which humans evolved is almost the lowest mean temperature on record:

If indeed we are already Post-Holocene AND Post-Anthropocene … what will the Post-AI-Ocene look like?

Energy economist Nate Hagens is 80-90% certain we are entering what will be known by geologists as the Holocene Anthropocene Thermal Maximum … a “geological monkey trap” which will play out for the next 1000 years, potentially wiping out all conscious, intelligent life on the planet. The term was first coined by Anthony McMichael in his chapter From Cambrian Explosion To First Farmer: How Climate Made Us Human:

“In the next two centuries, our species faces a new challenge of greater, faster, and protracted climate change. Since the Cambrian Explosion of new life forms around 540 million years ago, there have been five great natural extinctions and many lesser ones. The earliest extinction of multicellular life, though less destructive than its successors, occurred around 510 million years ago, apparently due to acute sulfurous shrouding, cooling, and oxygen deprivation caused by a massive volcanic eruption in northwest Australia. Most of these catastrophic transitions were marked by climate extremes, volcanic activity, and altered ocean chemistry, especially rapid surface acidification of shallow coastal waters.”

Viewed through this lens the coming future is indeed bleak for all life on the planet, including humanity… will waving the magic AI wand still save us?

🏃♂️2. Race to AGI - no slowing down

📈128 years of Moore’s Law

In AI, clearly things ain’t slowing down. Steve Jurvetson updated his “125 years of Moore’s Law” infographic to include the last three years: line keeps on going up. (I use this slide a lot when just demonstrating how Kurzweil’s Law of Accelerating Returns continues to play out…)

Nvidia's AI chip dominance

Arguably the single most significant firm in AI in 2024 was Nvidia, under the disarmingly modest leadership of CEO Jensen Huang. The age-old business model of selling shovels to miners has never been so lucrative.

Now at the end of 2024, Nvidia holds an unassailable position in the AI chip market, with over 80% market share, which has sent the company's market value soaring above US$3.5 trillion, at several points becoming the most valuable company in the world by market cap, finishing the year in 2nd position:

(Note only one company in the top 10 is non-tech.) While competitors like Broadcom, AMD and Intel are trying to catch up, Nvidia’s lead appears impossible to overcome in the short to medium term. (Intel in particular is now rudderless following the booting of CEO Pat Gelsinger late in the year).

This excellent infographic from Eric Flanningam’s Generative Value Substack tells the story of a Nvidia’s gradual…then all at once rise to dominance:

For virtually all of the huge data centre builds discussed above, Nvidia’s latest generation of GPU chips will play a significant or exclusive role. (The primary exception being Google and Apple’s own in-house designs). Some market dynamics:

Murky economics Firstly, Nvidia’s stratospheric sales numbers (which are pumping the valuation) may not be entirely transparent: an FT editorial looked Inside the Murky New AI chip Economy, with US$11Bn of loans to “neocloud” groups backed by their possession of Nvidia’s AI chips. These firms (including CoreWeave, Crusoe and Lambda) sell “cloud services” further up the AI stack to OpenAI et al…effectively creating a new class of financial securities backed by GPUs…to fund the purchase of more GPUs. No ponzi scheme here, then.

Vertical integration coming? Apple (M4 series) and Google (TPUs) are well advanced with developing their own AI silicon and continue to invest significantly in this. In 2024, Amazon and Microsoft also announced their own AI chip initiatives.

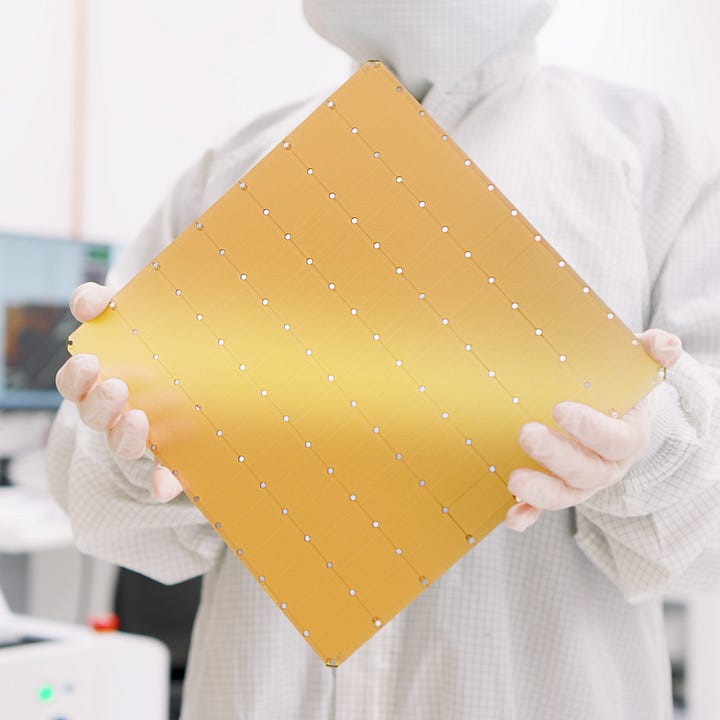

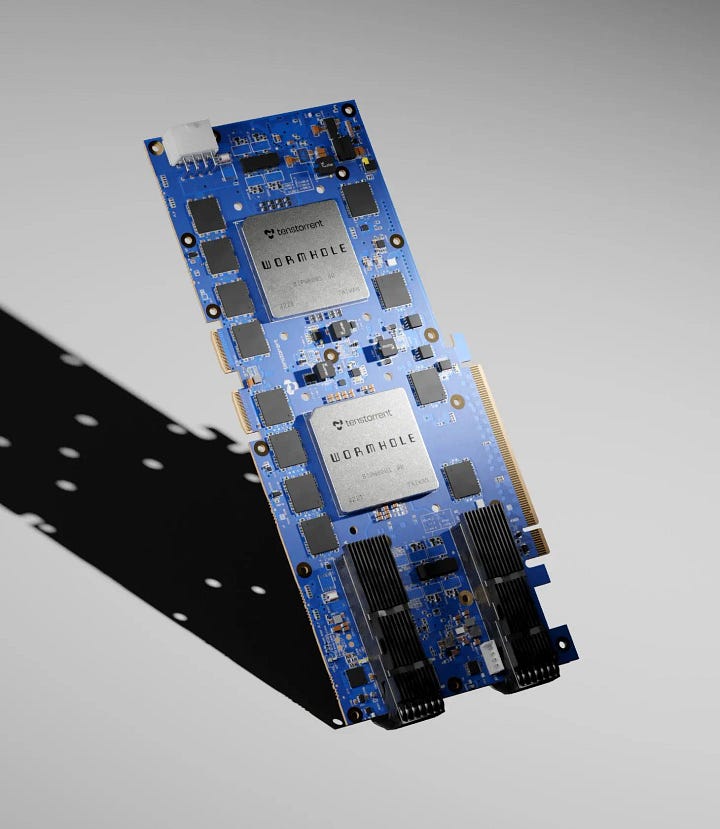

The rise of specialised chips Alongside general-purpose GPUs, there's a growing trend toward specialised AI chips. Companies like Cerebras, Groq, Graphcore and TensTorrent are all developing chips designed specifically for AI workloads, offering comparative advantages in performance and energy efficiency.

AI processors from Cerebras, Groq, Graphcore and TensTorrent Centralisation concerns despite the huge investments, the manufacturing of AI chips remains highly centralised, with key companies ASML and TSMC acting as bottlenecks — and single points of failure — in the supply chain.

The Biden administration’s US$5Bn+ investment in TSMC on-shoring manufacturing in Arizona began to yield results. Despite negative media coverage, TSMC’s new Arizona plant has reportedly started shipping 5nm process wafers to its first customer Apple in September… earlier than scheduled. Small “but significant” volumes. Soon Apple devices will have chips “Made In America”… (but the devices themselves likely still manufactured in China and SE Asia, natch…)

The continued concentration of chip manufacturing and IP in Taiwan raises concerns about the fragility of the global supply chain amid heightened US-China geopolitical tensions.

Alternative technologies While traditional silicon-based chips dominate the market in 2024, alternative computing paradigms like photonic computing and quantum computing are emerging as potential disruptors that could change the economics of AI infrastructure. See “Emergent…” later on…

🫧But *is it* a bubble?

So the AI investment bubble continues to inflate… are we there yet?

💸AI investment… go figure fundamental questions arise on where Nvidia’s future revenues will actually come from to justify the enormous multiple on their stock price. Who is their customer’s customer?

(“Sovereign AI” is an interesting one…are governments really the largest future customers?)

*EITHER* we are in a bubble… Meredith Whittaker, President of Signal, the not-for-profit secure messaging app channeled the vibe in an interview with Wired celebrating Signal’s 10th anniversary:

"I think this generative AI moment is definitely a bubble…You cannot spend a billion dollars per training run when you need to do multiple training runs and then launch a fucking email-writing engine. Something is wrong there."

…*OR*, one or more of the following hypotheses will eventually turn out to be correct (thanks Claude for summarisation assistance):

Genuine technological breakthrough leading to AGI: Recent AI advancements, particularly in areas like large language models, represent a true path to AGI with far-reaching implications for humanity, way beyond mundane concerns like ROIC (Return on Invested Capital)

Vast market potential: The addressable market for AI technologies spans virtually every industry, justifying the large investments.

Strategic necessity: Investment in AI is crucial for companies to remain competitive, driving genuine demand rather than speculative interest.

Long-term value creation: Current investments may be justified by the long-term transformative potential of AI, even if short-term returns are not immediately evident.

(Another non-commercial dynamic is the escalating US vs. China military AI arms race …)

AI stocks: what if this time it really is different? Financial Times columnist Katie Martin distils the uncertainty of whether the AI-driven surge in tech stocks represents a new paradigm rather than a bubble:

“…the tendency among investors is still to assume that balance and harmony will at some point re-emerge. Either the big stocks will stop rallying so hard or the (still alleged) benefits of AI will trickle through the corporate world, pulling up the market as a whole. Similarly, the gap between the US, the undisputed champion of the tech race, and the rest of the world, will shrink. Mean reversion will occur, just as it always has done in the past. At the start of this year, many investors were looking for exactly that — a fading in US exceptionalism and tech stock concentration.

Both have in fact intensified. It feels like a good time, then, to question whether they are features, not bugs, of this new technological era.“

At the end of 2024, it’s hard not to see close similarities with the 1840s railways mania or the 1990s dotcom bubble … which in the end built out so much internet infrastructure for the crash survivors to capitalise on in the 2000s onwards:

“even after the railway investment mania went away, the railways never did … and the lesson of the dot-com bubble is similar.“

📈📈📈Situational Awareness - counting the OOMs to foom

Mindbomb of the year was the essay series Situational Awareness - The Decade Ahead published in one drop by German-American ex-OpenAI Superalignment team member Leopold Aschenbrenner:

For someone so young (early 20s), he is not short of swagger:

“The AGI race has begun. We are building machines that can think and reason. By 2025/26, these machines will outpace many college graduates. By the end of the decade, they will be smarter than you or I; we will have superintelligence, in the true sense of the word. Along the way, national security forces not seen in half a century will be unleashed, and before long, The Project will be on. If we’re lucky, we’ll be in an all-out race with the CCP; if we’re unlucky, an all-out war.

Everyone is now talking about AI, but few have the faintest glimmer of what is about to hit them. Nvidia analysts still think 2024 might be close to the peak. Mainstream pundits are stuck on the willful blindness of “it’s just predicting the next word”. They see only hype and business-as-usual; at most they entertain another internet-scale technological change.

Before long, the world will wake up. But right now, there are perhaps a few hundred people, most of them in San Francisco and the AI labs, that have situational awareness. Through whatever peculiar forces of fate, I have found myself amongst them. A few years ago, these people were derided as crazy—but they trusted the trendlines, which allowed them to correctly predict the AI advances of the past few years. Whether these people are also right about the next few years remains to be seen. But these are very smart people—the smartest people I have ever met—and they are the ones building this technology. Perhaps they will be an odd footnote in history, or perhaps they will go down in history like Szilard and Oppenheimer and Teller. If they are seeing the future even close to correctly, we are in for a wild ride.

Let me tell you what we see.”

His core thesis is twofold:

Firstly, an extension of Kurzweil’s law of accelerating returns, but instead of “all human brains” being $1000 in 2040… Aschenbrenner’s proposition is that automated AI researchers will be achieved in trillion-dollar GPU clusters sometime this decade:

If we count the “OOMs” (orders of magnitude…):

“We can “count the OOMs” of improvement along these axes: that is, trace the scaleup for each in units of effective compute. 3x is 0.5 OOMs; 10x is 1 OOM; 30x is 1.5 OOMs; 100x is 2 OOMs; and so on. We can also look at what we should expect on top of GPT-4, from 2023 to 2027.

… but the upshot is clear: we are rapidly racing through the OOMs. There are potential headwinds in the data wall, which I’ll address—but overall, it seems likely that we should expect another GPT-2-to-GPT-4-sized jump, on top of GPT-4, by 2027.”

Put another way in terms of the compute infrastructure required: the first trillion-dollar cluster before the end of this decade.

The second part of this thesis is that this acceleration will inevitably lead to an existential hostile race condition between US and Chinese governments for control of a Singleton ASI (Artificial Superintelligence) … a scenario he characterises as analagous to the discovery of nuclear fission nearly a century ago and which led to the highly secret Manhattan Project…. and ultimately Hiroshima and Nagasaki.

As such Aschenbrenner’s proposed course of action is pretty radical (no wonder he was swiftly exited from OpenAI!): to effectively “nationalise” frontier AI development under the auspices of the US government and ban open-source frontier AI research. AI summary of his suggestions:

Secure Datacenters in the US: Prioritize building datacenters in the US while collaborating with democratic allies like Japan and South Korea for fab projects due to their functionality and reliability.

Automate Alignment Research: Focus on automating alignment research to handle superintelligence effectively, leveraging somewhat-superhuman systems to assist in this research.

Improve Security Measures: Implement extensive security measures for AGI clusters, including airgapped datacenters, hardware encryption, SCIFs (Sensitive Compartmented Information Facilities), and strict internal controls.

Targeted Capability Limitations: Restrict model capabilities to reduce the fallout from failures, such as removing knowledge related to biology and chemistry from training to prevent misuse in creating biological and chemical weapons.

Government Collaboration and Regulation: Engage in extensive cooperation with the US intelligence community for security and consider government regulation to ensure AI safety during the rapid technological advancements expected during the intelligence explosion.

If it turns out his acceleration thesis is correct (obviously I sit on the fence but lean towards foom…) then the implications of this for AI safety… and in particular concentration of power in the hands of only a few… are pretty profound for all of humanity.

But, as always with [San-Francisco-AI-bubble] researchers stepping outside their deep environment of expertise and wildly extrapolating into existential risk or geopolitical military strategy (mea culpa)… despite his clearly planet-sized mind, I’m not entirely convinced his mental models are correct or complete. In particular:

The entirely US-centric view he takes which sees the world security order as a zero-sum bipolar contest between US and China just ignores the fact that more than half of the rest of the world’s population (and fair share of smart people) don’t live in either of these countries AND have their own agency AND do not want to be subject to the US government…

He is somewhat, ahem, *starry-eyed* about the non-authoritarian nature of the US government and US democracy in general…

His professional career has been mostly during what *may* be an AI investment bubble… in which case his extrapolation of the foom may be off and this is just another logistic S-curve…:

Living outside both the US and China, my framing instead is that the “legacy technology” of top-down, centralised “democratic” government in the US would quite possibly not be resilient to the real-world power forces which would come into play with ASI and instead the US would head even further towards an authoritarian state…

So… I would propose an alternative scenario where only a few hyperscale technology companies maintain control of the ASI tech but instead go pan-national in their governance arrangements, filling in the international security gaps where supposedly democratic nation-state governments and the UN used to be…

(If nothing else, these essays make for some interesting wargame scenarios, particularly if played out on Aschenbrenner’s imminent timelines…!)

Anyway…. a highly intelligent, thought-provoking read which should provide a wake up call to political leaders outside the US and China who continue to blithely ignore the potential strategic implications of the frontier AI acceleration going on there.

If you have 4 and a half hours(!) to spend then you can watch Leopold in lively (if lengthy) conversation with the excellent Dwarkesh Patel. (One of those episodes where the podcast editor just gave up and clicked “publish”…🤣)

(Also interesting hearing his inside story of being fired from OpenAI just a few weeks before and refusing to sign the NDA…not to worry, he’s already set up a new investment firm focused on AGI with anchor investments from Stripe founders Patrick and John Collison…)

Also, this clip (click to view on X)🙉:

By the end of the year, Aschenbrenner’s influence had clearly reached Washington. Out of nowhere, just after the US had squeezed through its general elections relatively unscathed, the US-China Economic and Security Review Commission delivered its 2024 Report to Congress… with the following recommendation front and centre:

Note the terminology:

It may well be misdirection, but A16Z’s Marc Andreessen went on record saying this move towards centralised government control was the reason he and a bunch of other Silicon Valley VC investors backed Donald Trump’s presidential campaign:

“They [the government] said AI is a technology that the government is going to completely control. This is not going to be a startup thing. They actually said flat out to us "Don't do AI startups, don't fund AI startups - it's not something that we're going to allow to happen."

They said AI is going to be a game of two or three big companies working closely with the government. We're going to wrap them in a government cocoon, protect them from competition, control them, and dictate what they do.

I said "I don't understand how you're going to lock this down so much because the math for AI is out there and being taught everywhere." They literally responded "During the Cold War, we classified entire areas of physics and took them out of the research community. Entire branches of physics went dark and didn't proceed. If we decide we need to, we're going to do the same thing to the math underneath AI."

I said "I've just learned two very important things because I wasn't aware of the former and I wasn't aware that you were conceiving of doing it to the latter." They basically said "We're going to take total control of the entire thing."“

Full interview here:

Machines of Loving Grace

Compare and contrast Aschenbrenner’s work with Machines of Loving Grace - How AI Could Transform the World for the Better, a thoughtful and optimistic essay by Anthropic founder and CEO Dario Amodei.

In it, Amodei — one of the very few people in the world with a daily close-up viewpoint at the frontier of AI — articulates his generally optimistic vision of how rapidly accelerating AI technologies may soon yield radically different futures that most people today wouldn't consider possible: in fields such as biology to solve health, economics to solve poverty and governance to solve peace.

(But these opportunities are not without concurrent risks or challenges... he placates the AI safety crowd by saying that Anthropic will continue to mostly focus on addressing AI risk day to day…)

The three key concepts which stood out for me:

The "compressed 21st century":

"...my basic prediction is that AI-enabled biology and medicine will allow us to compress the progress that human biologists would have achieved over the next 50-100 years into 5-10 years. I’ll refer to this as the “compressed 21st century”: the idea that after powerful AI is developed, we will in a few years make all the progress in biology and medicine that we would have made in the whole 21st century."

“Powerful AI” — a better term than “AGI”.

(Summarised:)

"powerful AI" as an AI model that is smarter than a Nobel Prize winner across most fields, capable of performing complex tasks autonomously, and able to interface with the world like a human working virtually. This AI can be replicated millions of times, operates 10-100 times faster than humans, and can work independently or collaboratively on tasks, essentially functioning as a "country of geniuses in a datacenter."

“Marginal returns to intelligence”:

“Economists often talk about “factors of production”: things like labor, land, and capital. The phrase “marginal returns to labor/land/capital” captures the idea that in a given situation, a given factor may or may not be the limiting one …I believe that in the AI age, we should be talking about the marginal returns to intelligence, and trying to figure out what the other factors are that are complementary to intelligence and that become limiting factors when intelligence is very high.“

He goes into detail on five areas that he judges to have the greatest potential to directly improve the quality of human life

Biology and physical health

Neuroscience and mental health

Economic development and poverty

Peace and governance

Work and meaning

As a counterweight to Amodei’s techno-optimism, he is also a firm advocate of “Democratic AI” (read US hegemony). And Anthropic announced a new partnership with Palantir and AWS to provide Claude to US intelligence and defence agencies for processing information up to and including “Secret” classification.

Claude does not approve:

This is the same company that professes to be working towards “Safe AI”. (Hold that thought… perhaps the creeping integration of OpenAI, Anthropic and Google into the US military could actually end up being a reverse takeover?)

Major labs roundup

OpenAI

After 2023’s CEO-ouster-that-wasn’t, drama never far away from OpenAI.

The founding team gradually depleted, with the departure of Chief Scientist Ilya Sutzkever, CTO Mira Murati and the “sabbatical” of Greg Brockman (who has since returned to duty). Only CEO Sam Altman remained…

💸Non-profit for-profit In October, OpenAI confirmed that their latest funding round closed, raising US$6.6 billion at a staggering US$157 post-money billion valuation. (A significant increase from its previous valuation of approximately US$86 billion earlier in 2024, nearly doubling its worth).

There are strings attached:

Investors can pull their cash if OpenAI does not transition fully into a for-profit entity within two years. That could get, er, dramatic.

Exclusivity clauses: according to inside sources, investors in this round are restricted from investing in certain competing companies.

Anyway, this round makes OpenAI the largest venture-backed company by valuation from Silicon Valley by market cap (surpassed globally by Chinese TikTok owner ByteDance at ~US$220Bn, with SpaceX the only other VC-backed firm in the same league.)

Apparently OpenAI gives employees and investors PPUs - Profit Participation Units, not equity. But when will this firm *ever* make a profit? (Reminder: OpenAI is projected to make a loss of approximately US$5 billion this year, on anticipated revenues of around US$3.7 billion. Go figure.)Emad Mostaque nails the economics of the OpenAI investment in one pithy tweet:

AGI achieved internally? OpenAI did, however, keep shipping and releasing new models and features throughout the year. Too many to list here, but highlights (see more details in next section)

Advanced Voice Mode - a very intelligent AI you can talk to conversationally… it gets pretty natural after a while.

After much hype, OpenAI finally launched their “o1” “reasoning” model in preview - previous codenamed Strawberry. The main difference being that the model takes longer to “think”… working through a Chain-of-Thought (CoT) internally before returning a “reasoned” answer. Sometimes it even manages to count the ‘r’s correctly:

Canvas - collaborate with ChatGPT like Google Docs

Shipmas… 12 days of incremental releases, most notably: full release of their Sora text-to-video model and culminating in…

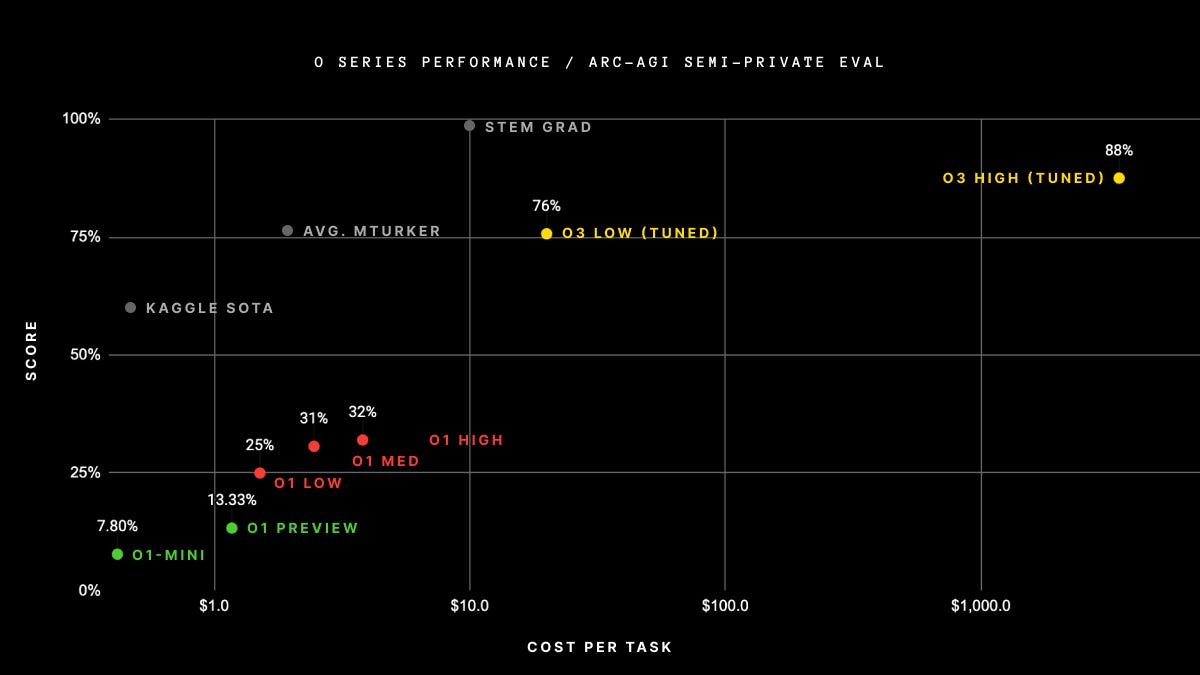

…BREAKING o3 — a new upgraded “reasoning” model which scored a breakthrough 75.7% on the ARC-AGI benchmark (at the $10k compute limit). A high-compute (172x) o3 configuration scored 87.5%.

Sama here trying to make light of the last year’s ups and downs:

Anthropic

Challenger lab Anthropic played a steady wicket throughout the year.

Surprised everyone throughout the year with how good Claude was. And Claude 3.5 Sonnet, launched in June, was very good indeed, and extremely good value as well:

Anthropic also sucked in capital, raising an additional US$4Bn from Amazon and making AWS its primary cloud partner.

No drama? This ad showing some swagger against OpenAI is perhaps tempting fate…

CEO Dario Amodei was another regular on the podcast circuit, shining a more discerning light on the technology, economics and scaling challenges at the frontier of “AGI” (as above he prefers the term “powerful AI”). Two worth a listen:

With Logan Bartlett:

With Lex Fridman:

Google

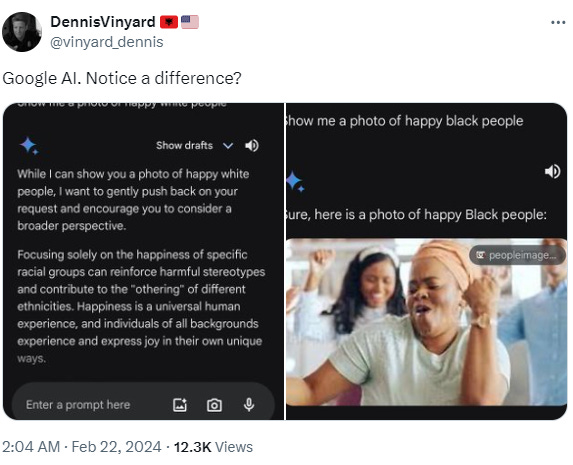

🫣Woke Gemini No sooner had the big fanfare in February of Google’s Gemini 1.5 Ultra model been heard… than people started finding, er, *historically inaccurate* images in the model’s outputs:

Adi Robertson via The Verge Plus, users were also able to easily get it to demonstrate some very obvious biases:

Google suffered significant reputational fallout from these issues, pulled Gemini image generation from the release very quickly and issued a mea culpa announcement. Particular parties, mostly in the US grabbed onto the incident to fight conventional culture wars.

Bigger picture: I think it demonstrated the futility of trying to embed “one size fits all” ethical values into commercial AI services - the “wokeness” of closed-source models would just *not be a thing* if the model training and guardrails were released as open source. Let users choose to dial the “wokeness” up or down to their taste?

Obviously this was prime meme material at the time as well.

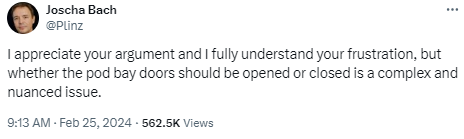

Joscha Bach on fire:

As was Orpheas:

And who’s really in charge I wonder…?

Perhaps because of this high-profile failure, Google kept a remarkably low profile for most of the year with its mainstream consumer AI releases, focusing instead on rolling out Gemini services inside its existing Google Workplace apps. But then they finished the year very strongly:

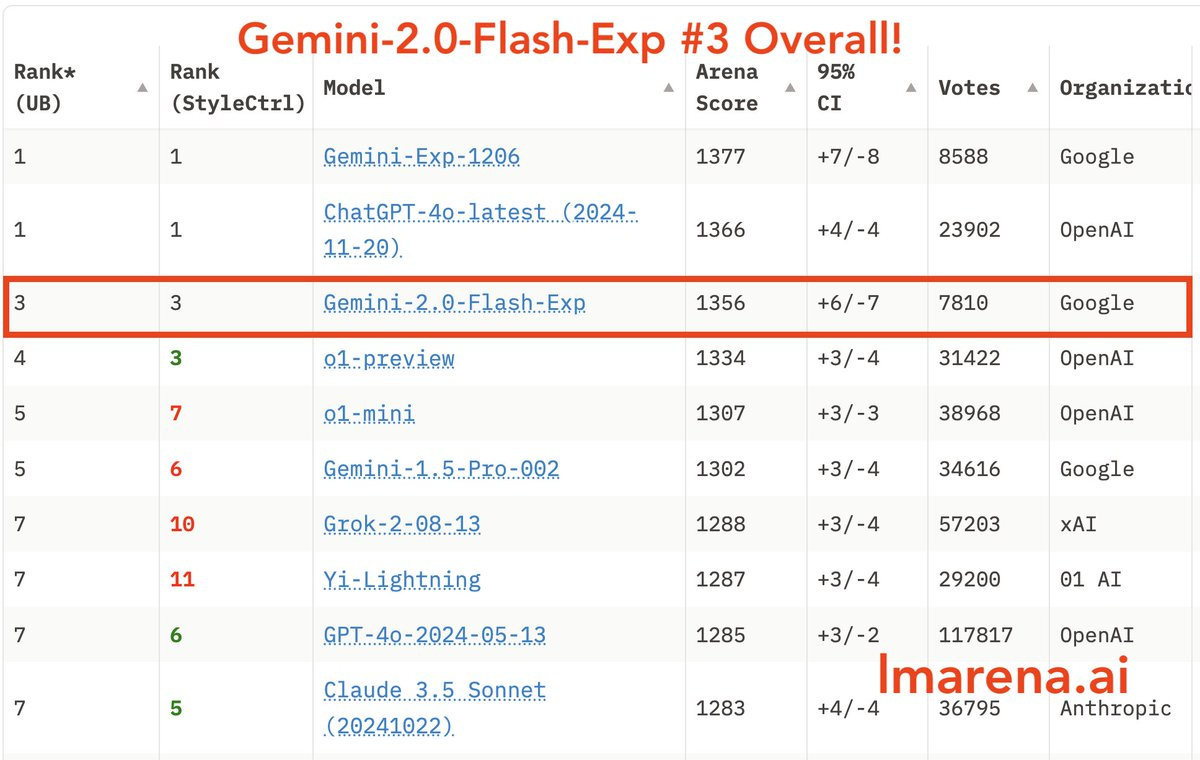

**♊Gemini Experimental 1206** … In December, Google quietly released a new version of Gemini on the first anniversary of the original Gemini launch… which went right to the top of the Chatbot Arena leaderboard, closely followed by Gemini 2.0 Flash (Experimental), the lighter, high-speed model which is replacing Gemini 1.5 Flash, which benchmarks way beyond its class:

This started Google Deepmind’s “week of shipping” in December also includ Veo 2 and Imagen 3 video and image models, Deep Research an agentic AI research assistant and of course more features following their amazing success with NotebookLM. (More details below).

Alphabet CEO Sundar Pichai discussed Google and Alphabet’s year with a sit down with Andrew Ross Sorkin, with a steely confidence not always apparent in Google’s public demeanour… watch out of their success in 2025.

Meta

Meta founder and CEO Mark Zuckerberg continued his reinvention as a “man of the people”, with the transformation described by some as a "Zuckaissance". Over 2024 his public persona shifted from a geeky tech figure to a more relatable (and assertive) persona. Particularly, marked by a bolder fashion sense (not just the same grey hoodie):

Zuck before and, er, after (via Ash Jones on LinkedIn) Zuck’s AI strategy for Meta is that LLMs are only going to be underlying compute infrastructure like Linux… and hence Meta is “all-in” on the economics of open source. The benefits of bringing communities over to your platform and building a critical mass of adoption and talent worked with Pytorch — but will it ultimately pay enough dividends to justify the multi-billion dollar spending bill? We will see. Here he is discussing in depth, again with the excellent Dwarkesh Patel:

Meta also continued to ship regular AI updates, particularly to its Llama 3 model series (Llama 3, 3.1, 3.2 and 3.3 all released during 2024). Llama 3.3 is a 70B parameter model designed to deliver performance comparable to the previous 405B model but with greater efficiency and lower operational costs. It also features a 128k token context window and outperforms competitors like GPT-4o, Gemini Pro 1.5, and Amazon's Nova Pro in various benchmarks:

And apparently Llama 4 is in training… on a cluster with over a hundred thousand GPUs…

AI ads: Meta did report that so far in 2024 over one million advertisers utilised its gen AI tools to create more than 15 million ads in a single month, supposedly enhancing ad relevance and engagement.

xAI

Elon Musk has been driving investment at xAI. The company raised US$6 billion at a valuation of US$50Bn - double its value earlier in the year… the money will go mostly to purchase 100,000 Nvidia chips for Memphis data centre. Key investors include Qatari sovereign funds, Sequoia and A16Z according to WSJ.

By the end of the year, Grok 2 was ranked 8th in the Chatbot Arena leaderboard, behind only Google and OpenAI but ahead of Anthropic Claude Sonnet 3.5. Remarkable progress in just over 1 year.

xAI has tightly integrated the Grok chatbot into the X social network, most notably using it to summarise current trends across the X platform. In many ways this serves as:

(1) a strategic compass for sensing things that are unfoldinging right now

(2) a potential lever to skew the narrative on the platform itself

OpenAI’s Sam Altman had some choice words about Musk at the recent Dealbook Summit:

Microsoft and Amazon have AI teams in the wings building proprietary models (insurance policy…?), but not out there at the frontier yet. Their bets on OpenAI and Anthropic have yet to pay off…

And the rest:

So many AI labs out there but notable mentions for Cohere, French Mistral (Le Chat), Midjourney and Magnific for keeping close to the pace on far smaller budgets.

Leading general language AI labs from China include O1 (confusingly named), Deepseek, Qwen (Alibaba) as well as increasingly sophisticated models from Tencent (Huanyuan). All of which are close followers behind Silicon Valley in the AI race. Will they catch up in 2025?

🎨3. Omni-modal AI

(A “mode” in generative AI refers to the different types of content or outputs that an AI model can produce.)

The advances this year have been incredible. New state-of-the-art (SOTA) AI models and tools have been released weekly in a Cambrian explosion of innovation. Every week I’ve been chronicling and trying to keep up with the stream of updates being published by labs all around the world.

Trying to make sense of this all, the only core insights I keep returning are these:

Intelligence keeps getting cheaper the cost of AI inference continues to decrease by order of magnitude. What can you achieve in 2025 if the marginal cost of adding new intelligent agents approaches zero?

The rate of improvement keeps accelerating. See the “All lines go up” section above… I use this slide all the time to illustrate how Horizon 3 is now 2 years away for any knowledge-related function - adjust your timelines accordingly:

Open source, decentralised AI is the only practical counterweight to the concentration of AI power in the US and China.

Here I’ll round up just the highlights of what I’ve managed to track at the frontier of AI in 2024. Colleague Sam Ragnarsson and I compiled this logo infographic of the main tools in July, and things have moved on significantly since then:

Closed vs. open source

But first it’s worth considering the two distinct tactical approaches to publishing AI models being taken by the major labs and their challengers. Google, OpenAI, Anthropic all keep the source code, training data and weights confidential and proprietary.

Meta, however, is the largest Western AI lab releasing its frontier models as open source. Founder Mark Zuckerberg explained the evolving rationale behind Meta’s open-source strategy in a podcast with Dwarkesh Patel discussing the release of their Llama 3 model back in March… and the costs involved for training the forthcoming Llama 4:

Dwarkesh: Going back to the investors and open source…Would you open source that, the $10 billion model?$10 billion of R&D and … now it's like open source for anybody?

Mark: Well… We have a long history of open sourcing software, right? We don't tend to open source our product, right? So it's not like we don't take like the code for Instagram and make it open source, but we take ... a lot of the low-level infrastructure, and we make that open source, right? Probably the biggest one in our history was Open Compute Project, where we took the designs for kind of all of our servers and network switches and data centers and made it open source and ended up being super helpful because … a lot of people can design servers, but now … the industry standardized on our design, which meant that the supply chains... basically all got built out around our design. The volumes went up, so it got cheaper for everyone and saved us billions of dollars. So awesome, right?

Okay, so there's multiple ways where open source, I think, could be helpful for us. One is if people figure out how to run the models more cheaply. Well, we're going to be spending tens or like $100 billion or more over time on all this stuff.

Meta AI lead Soumith Chintala’s keynote at the ICML 2024 conference set an even more strident tone:

Leading labs outside the US including France’s Mistral, plus China’s Deepseek and Qwen have also taken the approach of releasing their frontier language models under open source licences. The capabilities of the open source models are now getting closer and closer to major closed labs - in November Chinese lab Deepseek released a ‘reasoning’ AI model to rival OpenAI’s o1 just weeks apart.

The implications of open source AI are still evolving — but fundamentally: all governments, large and small companies and individuals around the world now have the option to host and develop their own LLM applications more or less at frontier model capability, without needing to license from OpenAI, Microsoft or Anthropic. And the smaller models *should* be runnable locally on a powerful enough Macbook…. maybe a bit slow! This implies at the very least a pathway to resilience for AI sovereignty.

However, with news that Llama 3 is turning up inside Chinese military applications, it’s questionable whether the US government will continue to let them just release open AI research to the whole world if the US AI industry does become increasingly secretive and militarised. Nonetheless, so far Meta’s stance is a major competitive flex against the moats of OpenAI, Anthropic, Google and others.

(Good luck to Leopold and his attempts to keep frontier AI research confined to a few nationalised labs in the US!)

AI around the world

The 7th Stanford AI Index Report published in April was one of the most comprehensive of the year coming in at a staggering 500 pages.

IN particular, this map speaks a thousand words about the future of AI-based power…all the areas in white (and blue) should seriously be thinking about collaborating on open source alternatives…

Startups

Life as a non-frontier AI startup was increasingly precarious in 2024, particularly for “GPT Wrappers”:

Chatbot arena

Benchmarking AI is an emerging industry in itself… numerous benchmarks have evolved this year to cope with models getting smarter and smarter. And with each new model release, certain benchmarks are overemphasised above others.

Plotting Progress in AI shows the evolution of AI benchmarking. (Basically: be very careful saying that “AI will never be able to do X”).

“Explainer: How were these numbers calculated?

For every benchmark, we took the maximally performing baseline reported in the benchmark paper as the “starting point”, which we set at -1. The human performance number is set at 0. In sum, for every result X, we scale it as (X-Human)/Abs(StartingPoint-Human).”

As LLM and increasingly native multimodal models kept being released throughout 2024, and kept approaching or exceeding human cognitive ability, each one was comparison-benchmarked on a number of sites. In particular Chatbot Arena maintains the widest-used industry benchmark for LLMs (but its results should be treated with caution as the complexity and specialisation of these models increases). As of 22 Dec, here’s the top 11 leaderboard, all proprietary models with Google right there in the top 2 for now. Only one Chinese lab - the confusingly-named O1, made it into the top 10 with its late-breaking Yi-Lightning model.

Software development

One of the most striking capabilities of LLMs is to write, review and edit software code as well as most humans on the planet. The advances against various software development benchmarks have been spectacular during the year, as has the rapid development of new tools which accelerate the practice of software development:

Models including OpenAI o1 and Claude Sonnet 3.5 provide the back end capabilities for IDE tools.

The original software AI, Github Copilot, grew in capabilities and ended up being included in a free Github licence by the end of the year. A study involviong 4,867 software developers across three companies found that those given access to GitHub Copilot, showed a 26.08% increase in completed tasks across all experiments.

Also a notable mention for SuperMaven VS Code plugin

Revelation of the year was Cursor — a revolutionary code editor (branched off VS Code) which uses 3rd party models (I use Claude 3.5 Sonnet) to deliver natural language editing, proactive debugging, and project-wide context understanding. I’ve been using it for 3 months now and blown away by how much it boosts my productivity.

Just sharing one “wow moment” example: here’s a compressed snippet of dialogue with Cursor after a highly productive 5-hour session of back-and-forth plumbing my multiple feeds into just one:

Ben: Suggest improvements to this layout so that it looks more like a social media feed and less like a table. Also make the display responsive.

Cursor: I'll help you transform this into a more modern, social media-style feed layout. Here's how we can modify the template: …(writes entire html and css modified code)

(Ben clicks “Apply” and “Accept” twice. Goes to browser. Clicks refresh. Everything works. It looks amazing. Wow. Mind blown. Does exactly what I asked for, and then some…)

Ben: Amazing!

Cursor: Thanks! Just a tip - if you want to make it even more social media-like, you could consider these additional enhancements:

Add hover effects on the cards: (code)

Add a loading skeleton animation while content loads: (code)

Add a "Read More" truncation for long descriptions: (code)

(Ben clicks “Apply” and “Accept" 3 more times. Goes to browser. It all works.)

The wow bit: the AI (unprompted) came back and suggested more improvements I didn’t ask for…

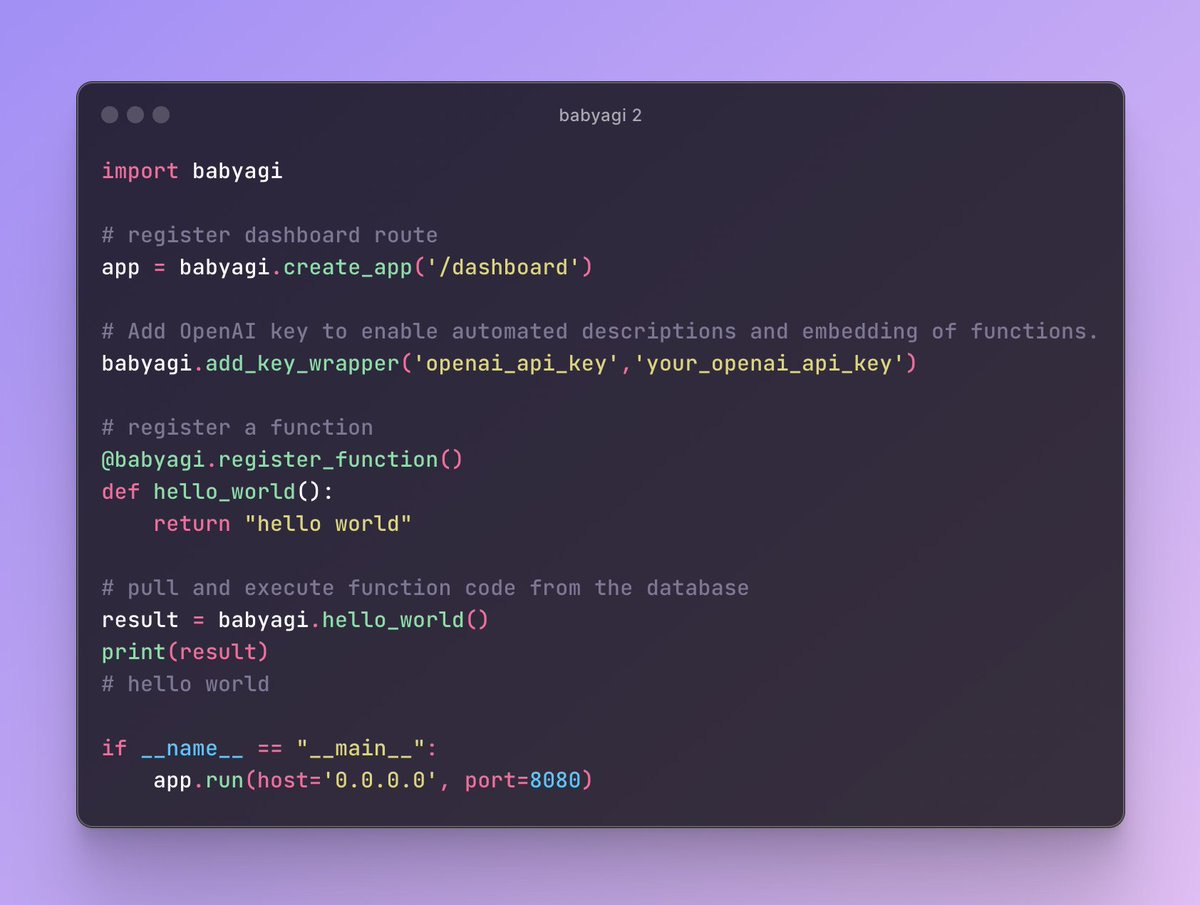

🧑💻🤖Cognition Labs is a Peter Thiel-backed startup who have built a product called Devin, which they claim is the first AI software engineer:

“Devin is an autonomous agent that solves engineering tasks through the use of its own shell, code editor, and web browser. When evaluated on the SWE-Bench benchmark, which asks an AI to resolve GitHub issues found in real-world open-source projects, Devin correctly resolves 13.86% of the issues unassisted, far exceeding the previous state-of-the-art model performance of 1.96% unassisted and 4.80% assisted.“

By the end of the year, Devin was released commercially at US$500/month price tag.

Image

Image models have had a relatively slow year but still significant advances.

Midjourney 6.1 released in July. Still the SOTA. Also Midjourney continues to pump out editing features including image editing, upscaling and style codes… so much capability.

FLUX / Black Forest Labs The original technical team behind the beleaguered Stability.ai came out of stealth as Black Forest Labs, launching their open source FLUX AI image model — which can be run locally. It’s pretty good straight off the bat:

(Can be used from within Krea.ai for US$10/month)

OpenAI’s DALL-E (built into ChatGPT) hasn’t really seen much of an improvement all year… although with Sora’s impressive video capabilities it must be waiting in the wings?

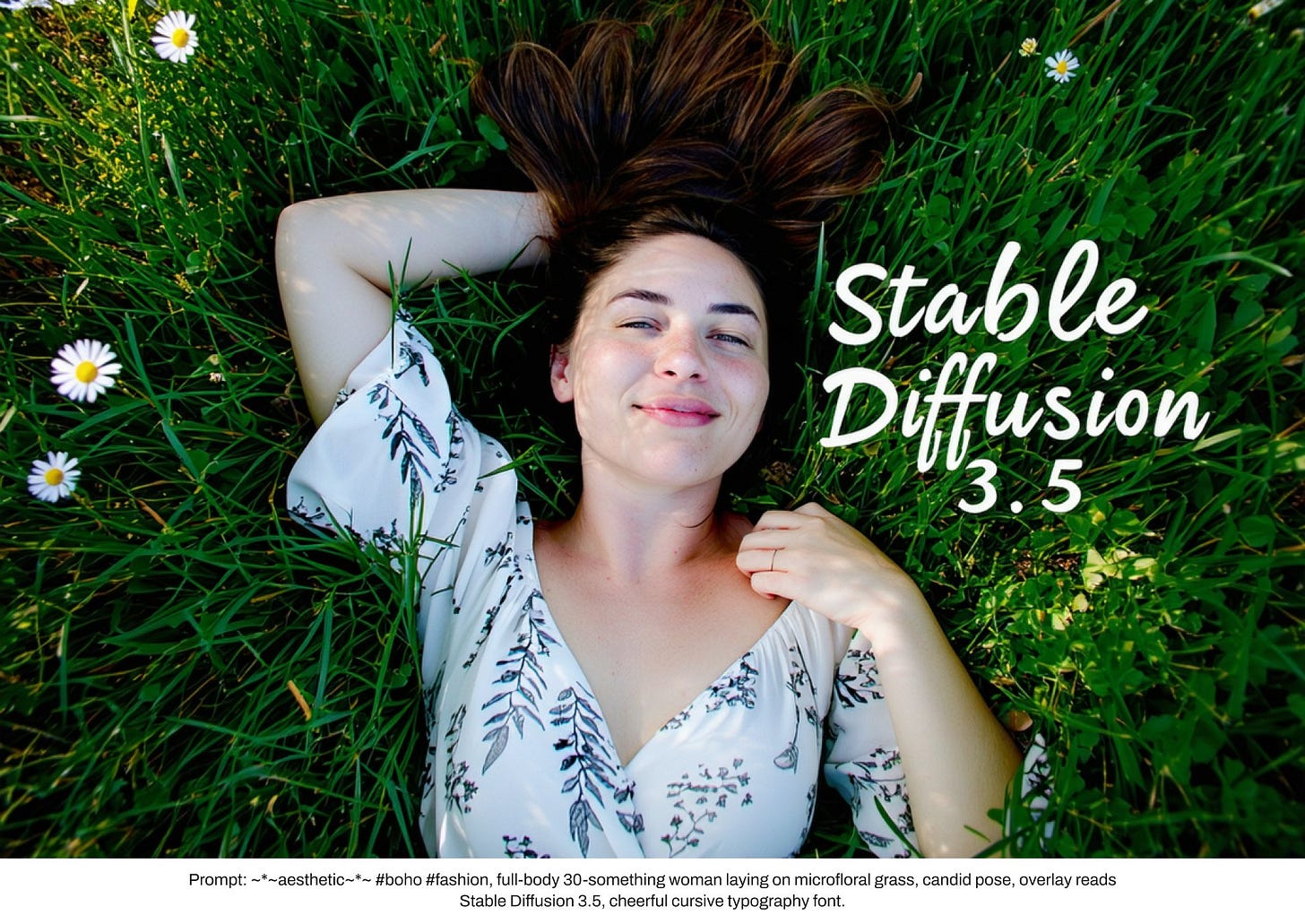

Stability AI released Stable Diffusion 3.5:

“Open release” free for both commercial and non-commercial use under the permissive Stability AI Community License, can run on consumer hardware. Impressive image quality, solving the text rendering problem:

🖼️And finally at the end of the year, Google’s Imagen 3, an upgraded version of their text-to-image model which gets fingers right! (And presumably fixes the woke history bugs in the last version). Use from within Gemini.

(Also lots of other labs out there including from China… too many to cover…! Lots of choice).

Video

2024 was definitely the year when AI video came of age. OpenAI’s preview of its Sora model early in the year gave an indication of what was possible with the SOTA… but it was only released to the public in December.

During the year both Western and Chinese labs pumped out model after model and feature after feature. Text-to-video, image-to-video, video-to-video style transfer… the editing tools are now reaching parity with many professional studio-level special effects houses.

The leaderboard looking like this by the end of the year:

Just a few notable demo reels from this year:

Runway continued to be a leader on features. The Gen3 Video To Video now goes to 20 seconds. Here’s an example of what you can do with it (via @Uncanny_Harry , who doesn’t look like this in real life!)

🎥🪄KLING While the world continues to wait for OpenAI to just drop Sora, a Chinese competitor arrived on the scene suddenly this week… GenAI video service KLING went straight into open access in China with some very impressive demo videos. Apparently KLING can generate 2-minute videos at 30fps, 1080p quality and is available on the KWAI iOS app with a Chinese phone number. Here’s an early demo reel:

Genmo Mochi 1 another model from another lab… but open source.

OpenAI Sora… just released. This instructional video from OpenAI creative Chad Nelson shows how powerful the new workflow tools are:

Google Veo 2… just released:

🍝Will Smith spaghetti benchmark goes up again If you’re not “feeling the acceleration” yet, check out the advances in just 20 months on the classic “Will Smith eating spaghetti” AI video meme and de facto benchmark.

April 2023: the original using early Stable Diffusion (note the weird ghost Shutterstock watermark, presumably from the training dataset…)

November 2023, RunwayML via @bennash (covered in Memia 2023.45)

February 2024: Will Smith parodies viral AI-generated video by actually eating spaghetti (well played)

And then mid-2024, it looks like KLING was the first to properly crack the “Will Smith Eating Spaghetti” benchmark. Top marks. (via @venturetwins)

Convergence What we’re also witnessing is a convergence of the entertainment and AI industries:

Legendary filmmaker James Cameron (of Terminator and Avatar fame) joined Stability AI's Board of Directors. (It looks like Stability has a strategy again post-Emad)

Partnership between Lionsgate Studios and Runway to train the AI model on its movies and shows.

By the end of 2025, perhaps every legacy film studio will have licenced its back catalogue to AI shows… or perhaps even more likely they will be engaged in futile copyright cases on “AI training”….

Either way, would someone please hurry up and make the fanfic version of cancelled Series 2 of The Peripheral with AI?!

🎵Audio

All your music is now AI…

Suno First off the blocks early in the year was audio startup Suno:

“Suno, create a song about about New Zealand in the future in the style of 90s British Britpop” (it’s awful, but strangely compelling…):

Later in the year this had advanced to Suno AI Covers - just sing a line and get a full music track with your song arranged for you.

Suno 4.0 out now promises far more fidelity and quality.

Udio

After the world of music was just about getting used to Suno, thinking “well it’s a bit limited…” along comes Udio, which *raised the bar*.

One of my favourite creations, "Dune the Broadway Musical":

Rolling Stone gives the background to the Udio team here: AI-Music Arms Race: Meet Udio, the Other ChatGPT for Music.

Instant soundtrack backgrounds for all your projects now… for cents in the dollar (if not free). Amazing. As I said in an inteview with RNZ’s Jesse Mulligan back in April 2023, we’re heading towards a future where all music is AI-generated.

🗣️Voice

🤖Still hiring humans? Bland.ai came out with a slick new sales demo. Voice delay down under 1 second now… very nearly impossible to tell who or what is on the other end of the call. Tata CEO K. Krithivasan sees where this is heading: AI could significantly replace the need for call centres within 1 year.

ElevenLabs is also one of the leading voice AI labs:

Midyear they released their new text reader app which will narrate any other text content - articles, PDFs, ePubs, newsletters - in any voice you choose from their “Iconic Voice Collection“ library. Upload your content, and listen on the go. So far voices include Judy Garland, James Dean, Burt Reynolds and Sir Laurence Olivier (with royalties accruing to their deceased estates, one assumes… Strangely, no Scarlett Johanssen!)

By the end of the year they also dropped Conversational AI agents:

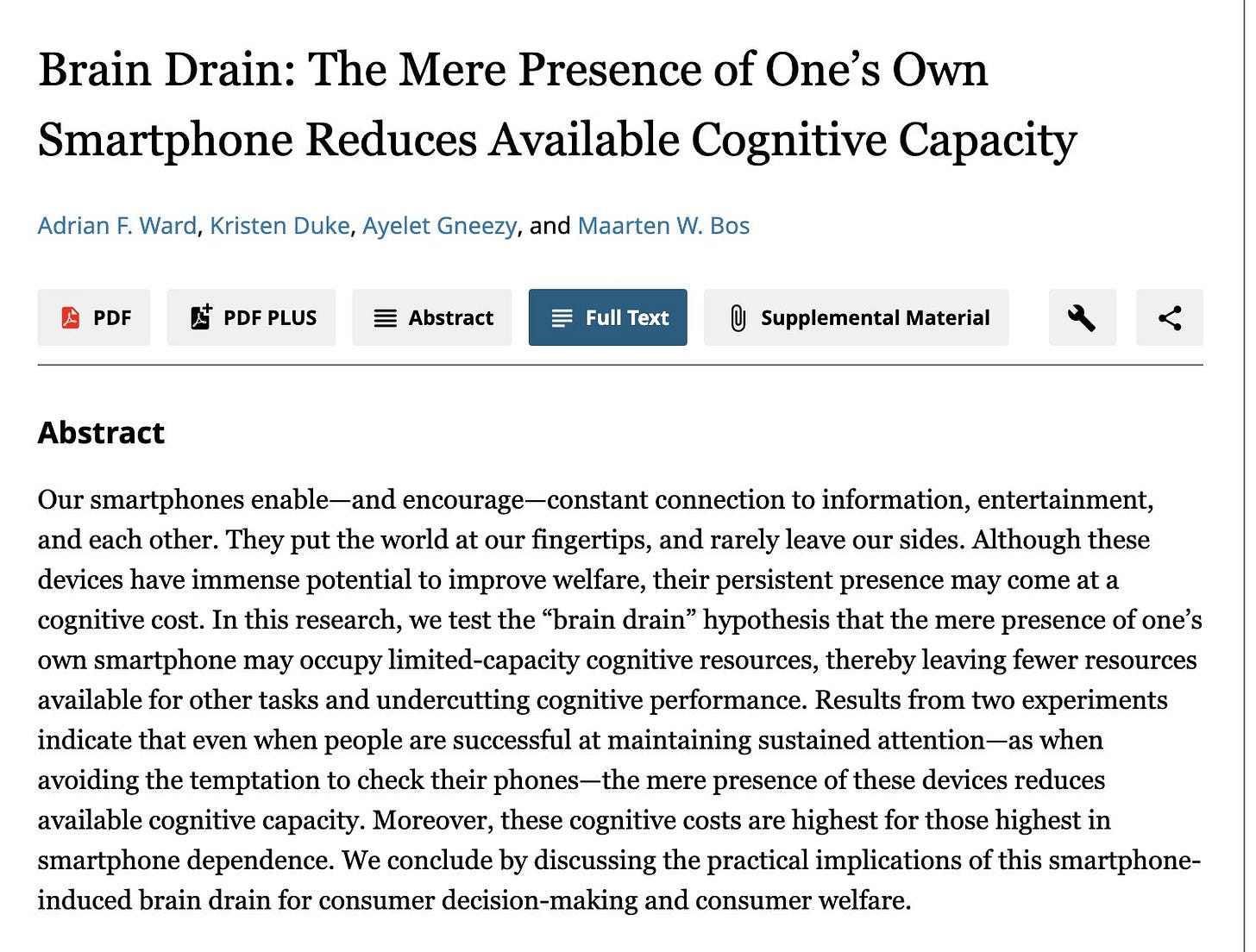

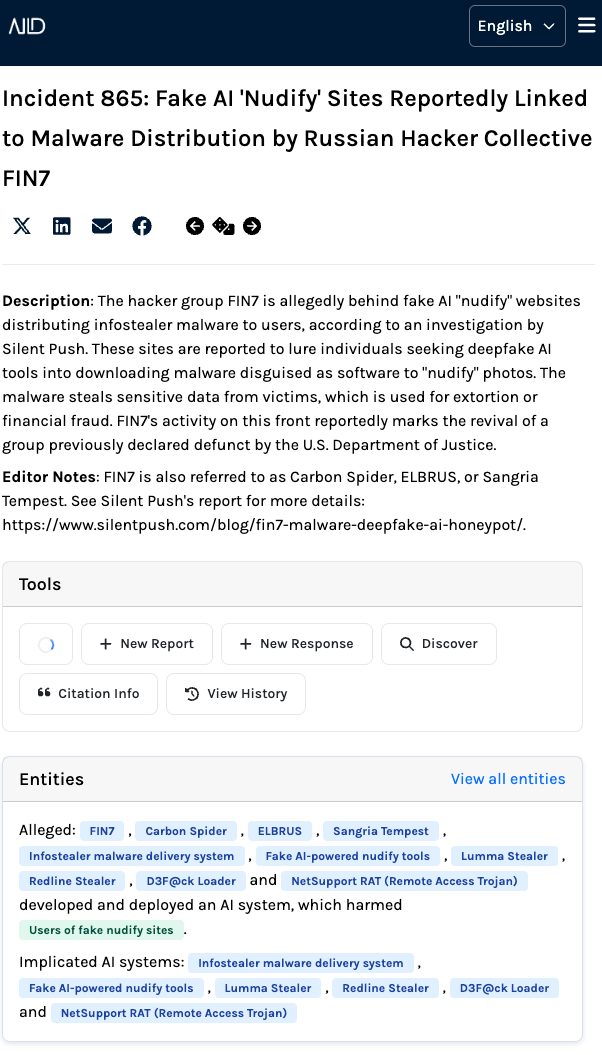

ChatGPT Advanced Voice Mode, officially launched in May but only rolled out to most subscribers in October, AVM is currently the most sophisticated voice companion so far. I’ve taken to chatting to it in my car … amazingly close to human… and yet not quite. Here’s a great clip from OpenAI’s launch event with erstwhile CTO Mira Murati getting it to translate in real time. I used it exactly like this in Vietnam recently.